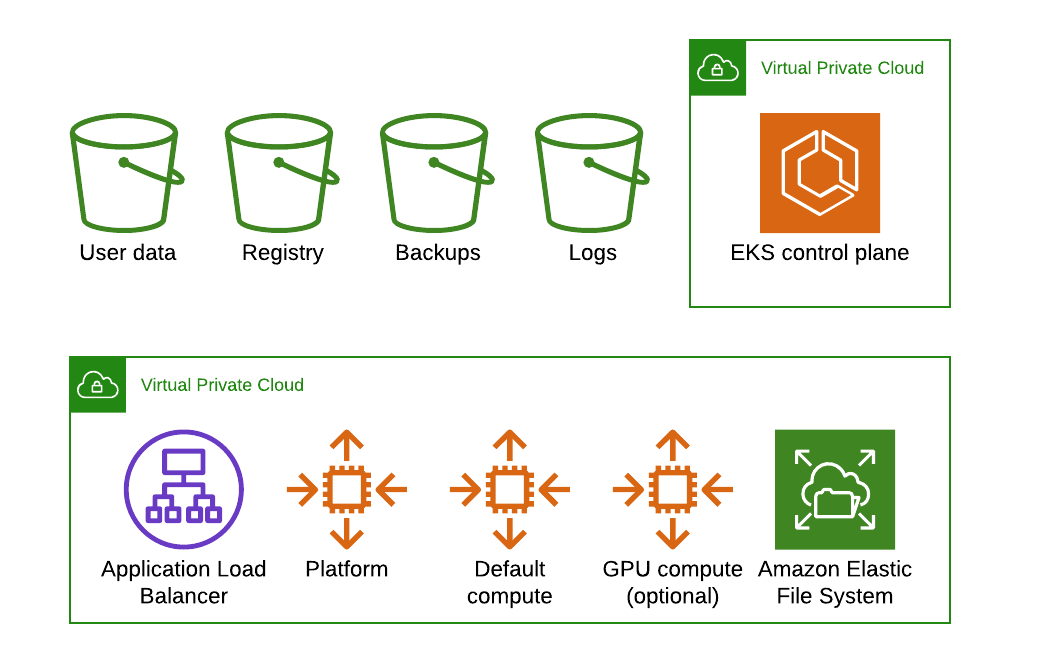

Domino can run on a Kubernetes cluster provided by AWS Elastic Kubernetes Service. When running on EKS, the Domino architecture uses AWS resources to fulfill the Domino cluster requirements as follows:

-

EKS features a fully managed Kubernetes control plane.

-

Domino uses a dedicated Auto Scaling Group (ASG) of EKS workers to host the Domino platform.

-

ASGs of EKS workers host elastic compute for Domino executions.

-

AWS S3 stores user data, internal Docker registry, backups, and logs.

-

Elastic Container Registry can be configured as an external Docker registry.

-

AWS EFS stores Domino Datasets.

-

The

ebs.csi.aws.comprovisioner creates persistent volumes for Domino executions. -

Calico is a network plugin that supports Kubernetes network policies.

-

Domino cannot be installed on EKS Fargate, since Fargate does not support stateful workloads with persistent volumes.

-

Domino recommends provisioning via our Terraform modules.

-

Domino recommends nodes have private IPs fronted by a load balancer with proper security controls. Nodes in the cluster can egress to the Internet through a NAT gateway.

Note that the use of GPU compute instances is optional.

Your annual Domino license fee will not include any charges incurred from using AWS services. You can find detailed pricing information for the Amazon services listed above at https://aws.amazon.com/pricing.

This section describes how to configure an Amazon EKS cluster for use with Domino. You should be familiar with the following AWS services:

-

Elastic Kubernetes Service (EKS)

-

Identity and Access Management (IAM)

-

Virtual Private Cloud (VPC) Networking

-

Elastic Block Store (EBS)

-

Elastic File System (EFS)

-

S3 Object Storage

Additionally, a basic understanding of Kubernetes concepts like node pools, network CNI, storage classes, autoscaling, and Docker are useful when deploying the cluster.

Security considerations

You must create IAM policies in the AWS console to provision an EKS cluster. Domino recommends that you grant the least privilege when you create IAM policies. Grant elevated privileges when necessary. See information about the grant least privilege concept.

Service quotas

Amazon maintains default service quotas for each of the services listed previously. Log in to the AWS Service Quotas console to check the default service quotas and manage your quotas.

VPC networking

If you plan to do VPC peering or set up a site-to-site VPN connection to connect your cluster to other resources like data sources or authentication services, configure your cluster VPC accordingly to avoid address space collisions.

Namespaces

You do not have to configure namespaces prior to installation. Domino will create the following namespaces in the cluster during installation, according to the following specifications:

| Namespace | Contains |

|---|---|

| Persistent Domino platform services and metadata required for platform operation (control plane) |

| Ephemeral Domino execution pods launched by user actions in the application (workspaces, model APIs, apps, etc.) |

| Domino installation metadata and secrets |

Node pools

The EKS cluster must have at least two ASGs that produce worker nodes with the following specifications and distinct node labels, and it might include an optional GPU pool:

| Pool | Min-Max | Instance | Disk | Labels |

|---|---|---|---|---|

| 4-6 | m5.4xlarge | 128G |

|

| 1-20 | m5.2xlarge | 400G |

|

Optional: | 0-5 | p3.2xlarge | 400G |

|

The platform ASG can run in one availability zone or across three availability zones.

If you want Domino to run with some components deployed as highly available ReplicaSets you must use three availability zones.

Using two zones is not supported, as it results in an even number of nodes in a single failure domain.

All compute node pools you use must have corresponding ASGs in any AZ used by other node pools.

If you set up an isolated node pool in one zone, you might encounter volume affinity issues.

To run the default and default-gpu pools across multiple availability zones, you must duplicate ASGs in each zone with the same configuration, including the same labels, to ensure pods are delivered to the zone where the required ephemeral volumes are available.

To get suitable drivers onto GPU nodes, use the EKS-optimized accelerated AMI distributed by Amazon as the machine image for the GPU node pool.

You can add ASGs with distinct dominodatalab.com/node-pool labels to make other instance types available for Domino executions.

See Manage Compute Resources to learn how these different node types are referenced by labels from the Domino application.

Network plugin

Domino relies on Kubernetes network policies to manage secure communication between pods in the cluster.

The network plugin implements network policies, so your cluster must use a networking solution that supports NetworkPolicy, such as Calico.

See the AWS documentation about installing Calico for your EKS cluster.

If you use the Amazon VPC CNI for networking, with only NetworkPolicy enforcement components of Calico, ensure the subnets you use for your cluster have CIDR ranges of sufficient size, as every deployed pod in the cluster will be assigned an elastic network interface and consume a subnet address. Domino recommends at least a /23 CIDR for the cluster.

Docker bridge (Kubernetes 1.23 or lower)

|

Note

| Docker bridge is not supported in Kubernetes 1.24 and above. |

By default, AWS AMIs do not have bridge networking enabled for Docker containers.

Domino requires this for environment builds.

Add --enable-docker-bridge true to the user data of the launch configuration used by all Domino ASG nodes.

-

Create a copy of the launch configuration used by each Domino ASG.

-

Open User data and add

--enable-docker-bridge trueto the copied launch configuration. -

Switch the Domino ASGs to use the new launch configuration.

-

Drain any existing nodes in the ASG.

Dynamic block storage

The EKS cluster must be equipped with an EBS-backed storage class that Domino will use to provision ephemeral volumes for user execution. See the following for an example storage class specification:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

type: gp2

provisioner: kubernetes.io/aws-ebsDatasets storage

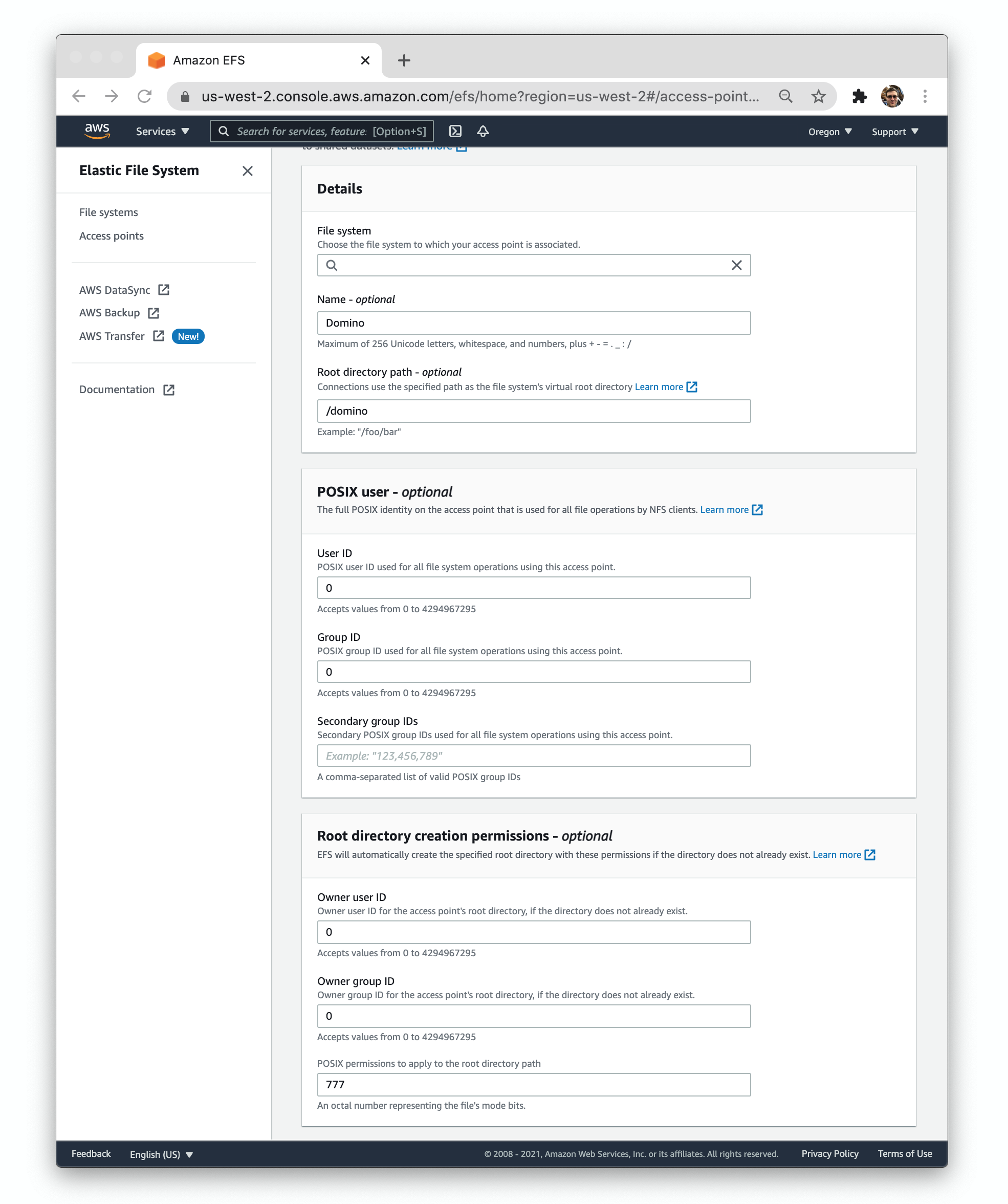

To store Datasets in Domino, you must configure an EFS (Elastic File System). You must provision the EFS file system and configure an access point to allow access from the EKS cluster.

Configure the access point with the following key parameters, also shown in the following image.

-

Root directory path:

/domino -

User ID:

0 -

Group ID:

0 -

Owner user ID:

0 -

Owner group ID:

0 -

Root permissions:

777

Record the file system and access point IDs for use when you install Domino.

Blob storage

When running in EKS, Domino can use Amazon S3 for durable object storage.

Create the following S3 buckets:

-

One bucket for user data

-

One bucket for the internal Docker registry

-

One bucket for logs

-

One bucket for backups

Configure each bucket to permit read and write access from the EKS cluster. This means that you must apply an IAM policy to the nodes in the cluster like the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:ListBucketMultipartUploads"

],

"Resource": [

"arn:aws:s3:::$your-logs-bucket-name",

"arn:aws:s3:::$your-backups-bucket-name",

"arn:aws:s3:::$your-user-data-bucket-name",

"arn:aws:s3:::$your-registry-bucket-name"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListMultipartUploadParts",

"s3:AbortMultipartUpload"

],

"Resource": [

"arn:aws:s3:::$your-logs-bucket-name/*",

"arn:aws:s3:::$your-backups-bucket-name/*",

"arn:aws:s3:::$your-user-data-bucket-name/*",

"arn:aws:s3:::$your-registry-bucket-name/*"

]

}

]

}Record the names of these buckets for use when you install Domino.

Autoscaler access

If you intend to deploy the Kubernetes Cluster Autoscaler in your cluster, the instance profile used by your platform nodes must have the necessary AWS Auto Scaling permissions.

See the following example policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeLaunchTemplateVersions",

"ec2:DescribeInstanceTypes"

],

"Resource": "*",

"Effect": "Allow"

}

]

}Domain

You must configure Domino to serve from a specific FQDN. To serve Domino securely over HTTPS, you also need an SSL certificate that covers the chosen name. Record the FQDN for use when you install Domino.

|

Important

|

A Domino install can’t be hosted on a subdomain of another Domino install.

For example, if you have Domino deployed at data-science.example.com, you can’t deploy another instance of Domino at acme.data-science.example.com.

|