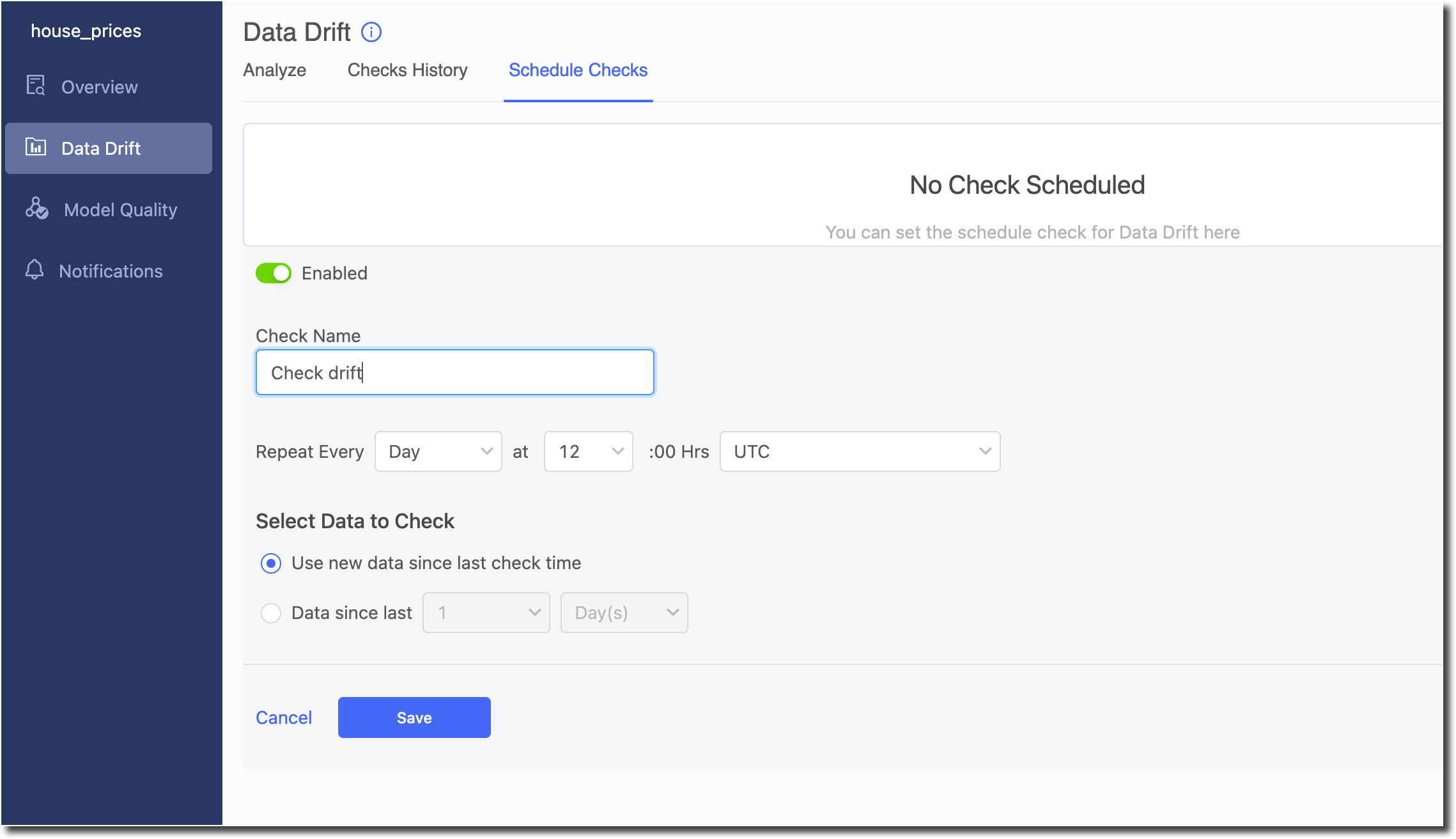

In a production setting, prediction and ground truth data would normally be pushed into the Model Monitor using APIs. An automated way of making sure you are notified if data drift or model quality metrics degrade beyond the threshold for any time period is to set up Scheduled Checks for the model.

You can specify at what frequency the checks should be repeated and what time range of data should be used for calculations for those checks. When an occurrence of the check fails, notification emails are sent out to the configured email Ids in Notification section.

Users have two options to configure what time range of data should be used in the Select Data to Check options:

-

Use data since last check time

-

For data drift, this option ensures only predictions with timestamp that fall after the last time the scheduled check ran are considered for the check.

-

For model quality, this option ensures only ground truth labels ingested into the Model Monitor after the last time the scheduled check ran are matched with historical predictions made by the model and are considered for the check.

-

-

Data since last x time period

-

For data drift, this option allows to select predictions with timestamp that fall within the last specified x time period (eg. last 3 days) are considered for the check.

-

For model quality, this option allows to select ground truth labels ingested within the last specified x time period (eg. last 3 days) are matched with historical predictions made by the model and are considered for the check.

-