To scale the performance of Model APIs in Domino, you can scale hardware using Model API hardware tiers, or increase the degree of parallelism.

Use Model API hardware tiers to scale your models deployed as Domino Model APIs.

Since Model APIs often have different requirements than Workspaces and Jobs, Domino lets you classify specific hardware tiers for Model APIs, allowing you to tailor your hardware to meet the unique demands of machine learning model deployment.

|

Note

| Model API tiers and regular hardware tiers are non-interchangeable. |

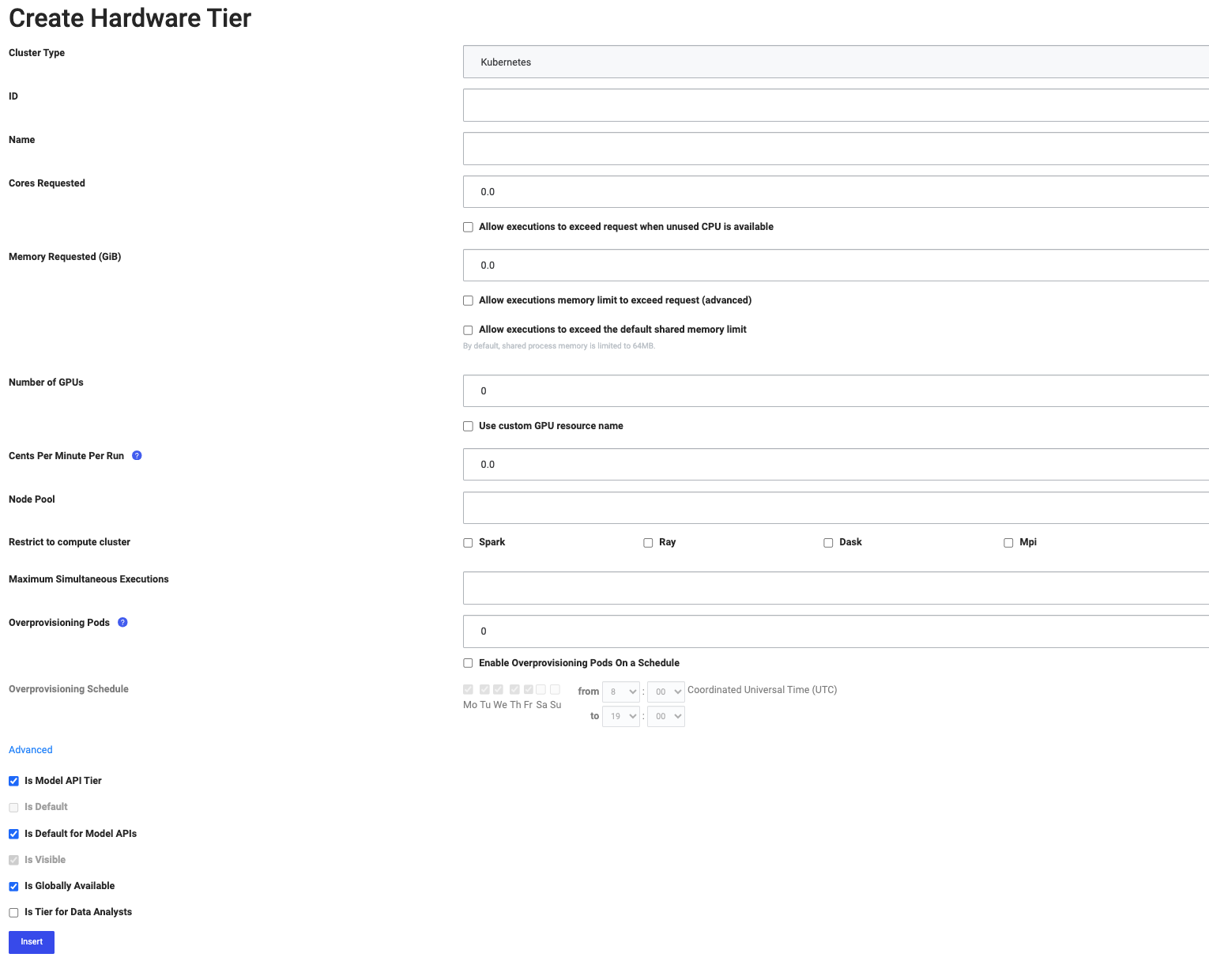

To create a new Model API hardware tier:

-

From the admin home page, go to Advanced > Hardware Tiers.

-

Click New to create a hardware tier, or click Edit to modify an existing hardware tier or set a default hardware tier.

-

Select the desired hardware tier values, see Create hardware tier.

-

Select Is Model API Tier.

-

You can also specify if you’d like this Model API tier to be the default for all Model APIs.

To scale all Python Model APIs, set the degree of parallelism.

|

Note

| Only synchronous models support this. |

-

Go to Admin > Advanced > Central Config.

-

Set

com.cerebro.domino.modelmanager.uWsgi.workerCountto a value greater than its default value of1to increase the uWSGI worker count. See the uWSGI documentation for more information. -

The system shows the following message:

Changes here do not take effect until services are restarted. Click here to restart services. -

Click here to restart the services.

Learn more about Domino hardware tiers.