To scale the performance of Model APIs in Domino, you can configure model resource quotas, or increase the degree of parallelism.

You set the resource quotas for the hardware specifications of the pods that host Model APIs. A resource quota determines the CPU and memory resources available to the model that uses it. Resource quotas are different than the hardware tiers Domino Runs use.

-

From the admin home, go to Advanced > Resource Quotas.

-

Click New to create a resource quota, or click Edit to modify an existing resource quota or set a default resource quota. Resource quotas cannot be permanently deleted.

-

When you create or edit resource quotas, set the following properties:

-

Visible: Leave this checkbox selected for a resource quota to be listed in the menu for users publishing models. Clear the checkbox to make a resource quota unavailable for use.

-

Memory (GB) - Request: The amount of RAM reserved for a model with this quota.

-

Memory (GB) - Limit: If the hosting node has RAM available, a model running this quota can make use of additional memory up to this limit.

-

CPU (# of Cores) - Request: The number of cores reserved for a model with this quota.

-

CPU (# of Cores) - Limit: If the hosting node has idle cores available, a model running this quota can use additional cores up to this limit.

-

Default: The resource quota with this set to

truewill be used for all newly published models by default. -

GPU: Select this checkbox to request GPU resources. When the checkbox is selected, you must enter the GPU Resource Name and Number of requested GPUs.

The Request values for CPU Cores, Memory, and GPUs are thresholds used to determine whether a node has the capacity to host an execution pod. These requested resources are effectively reserved for the pod.

The Limit values control the amount of resources a pod can use beyond the amount requested. If there’s additional headroom on the node, the pod can use resources up to this limit.

CautionIf a pod is using resources beyond those it requested, which causes excess demand on a node, Kubernetes might evict the offending pod and terminate the associated Domino Run. To prevent this, Domino recommends setting the requests and limits to the same values.

-

Node Pool: Select the type of the node pool for the deployment.

-

If you select Default, the node pool defaults to the value that your administrator set in the

com.cerebro.domino.modelManager.nodeSelectorLabelValueorcom.cerebro.domino.modelManager.nodeSelectorLabelValue.gpukey, depending on whether you are using GPUs or not. -

If you select Custom, enter the name of the node pool.

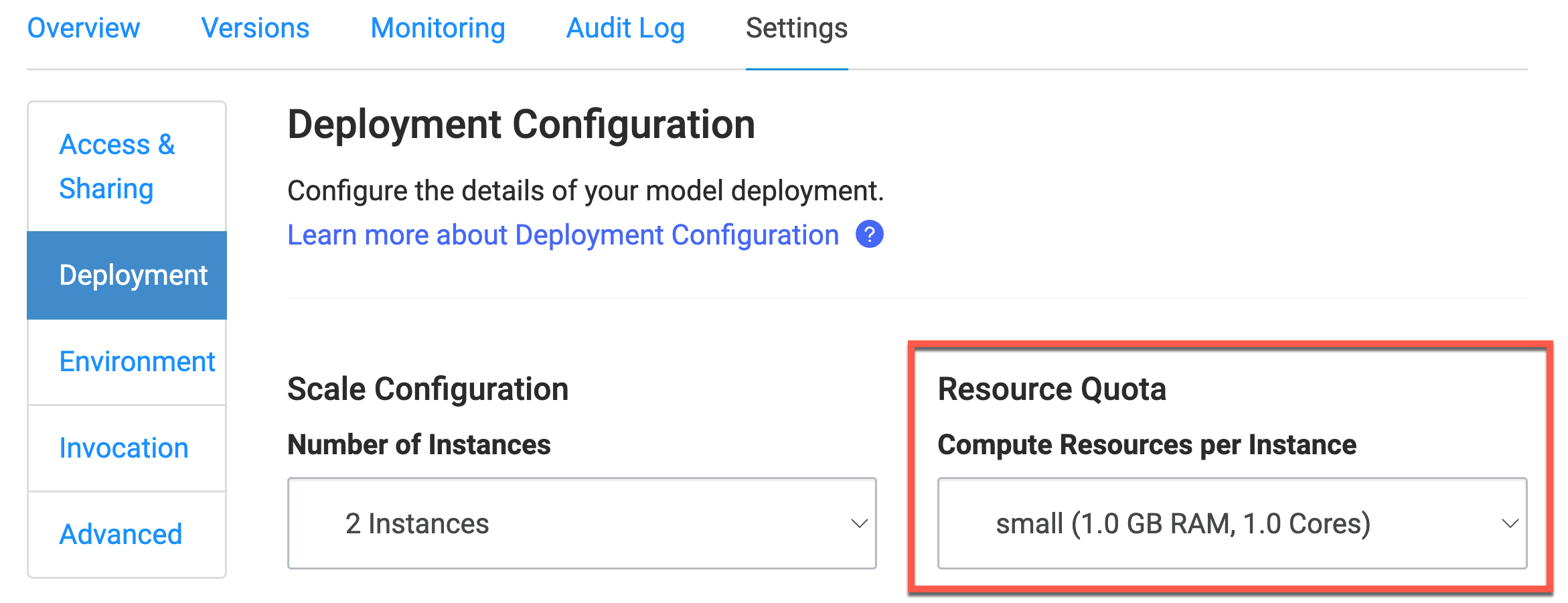

Your users can now set the resource quotas for their deployments. To do this, they must go to the model’s deployment page. Then, go to the Compute resources per instance menu in the Resource Quota section to set resource quotas.

-

-

-

Click Create.

To scale all Python Model APIs, set the degree of parallelism.

|

Note

| Only synchronous models support this. |

-

Go to Admin > Advanced > Central Config.

-

Set

com.cerebro.domino.modelmanager.uWsgi.workerCountto a value greater than its default value of1to increase the uWSGI worker count. See the uWSGI documentation for more information. -

The system shows the following message:

Changes here do not take effect until services are restarted. Click here to restart services. -

Click here to restart the services.

Learn more about Domino hardware tiers.