Domino supports the use of cost and energy-efficient AWS-designed silicon processors, AWS Trainum and Inferentia, to accelerate deep-learning model training and AI inference workloads. Use the AWS Neuron SDK to reuse existing code. Learn how to set up Trainium and Inferentia accelerators in your Domino.

Set up involves the following:

-

Node group creation: Create a new node group for Trainium and Inferentia instances.

-

Device plugin configuration: Provide hardware-specific settings.

-

Hardware tier setup: Enable Domino users to use Trainium and Inferentia instances for their workloads.

-

Environment configuration: Set up the necessary development tools and software libraries.

To use AWS accelerators, create a new node group that:

-

Uses one of the instance types in the following table

-

Uses the GPU-enabled AMI for your EKS version

-

Has a unique node pool label identifying its accelerator type

| Name | vCPU | Memory (GiB) | aws.amazon.com/neuron | Total Neuron Memory (GiB) |

|---|---|---|---|---|

inf1.xlarge | 4 | 8 | 1 | 8 |

inf1.2xlarge | 8 | 16 | 1 | 8 |

inf1.6xlarge | 24 | 48 | 4 | 32 |

inf1.24xlarge | 96 | 192 | 16 | 128 |

inf2.xlarge | 4 | 16 | 1 | 32 |

inf2.8xlarge | 32 | 128 | 1 | 32 |

inf2.24xlarge | 96 | 384 | 6 | 192 |

inf2.48xlarge | 192 | 768 | 12 | 384 |

trn1.2xlarge | 8 | 32 | 1 | 32 |

trn1.32xlarge | 128 | 512 | 16 | 512 |

For the cluster-autoscaler to scale your Neuron-based node group successfully, you must tag those autoscaling groups with the Neuron device resource template, like the k8s.io/cluster-autoscaler/node-template/resources/aws.amazon.com/neuron tag in the table below:

| Key | Value | Tag new instances |

|---|---|---|

Name | inferentia-test-domino-trn1-Node | Yes |

alpha.eksctl.io/cluster-name | inferentia-test | Yes |

alpha.eksctl.io/eksctl-version | 0.155.0 | Yes |

alpha.eksctl.io/nodegroup-name | domino-trn1 | Yes |

alpha.eksctl.io/nodegroup-type | unmanaged | Yes |

aws:cloudformation:logical-id | NodeGroup | Yes |

aws:cloudformation:stack-id | arn:aws:cloudformation:us-west-2:873872646799:stack/eksctl-inferentia-test-n… | Yes |

aws:cloudformation:stack-name | eksctl-inferentia-test-nodegroup-domino-trn1 | Yes |

eksctl.cluster.k8s.io/v1alpha1/cluster-name | inferentia-test | Yes |

eksctl.io/v1alpha2/nodegroup-name | domino-trn1 | Yes |

k8s.io/cluster-autoscaler/enabled | true | Yes |

k8s.io/cluster-autoscaler/inferentia-test | owned | Yes |

k8s.io/cluster-autoscaler/node-template/label/dominodatalab.com/node-pool | trainium | Yes |

k8s.io/cluster-autoscaler/node-template/resources/aws.amazon.com/neuron | 1 | Yes |

kubernetes.io/cluster/inferentia-test | owned | Yes |

Example eksctl node group config

Here’s an example eksctl node group config for Neuron-based node groups:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: inferentia-test

region: us-west-2

nodeGroups:

- name: domino-trn1

instanceType: trn1.2xlarge

minSize: 0

maxSize: 3

desiredCapacity: 1

volumeSize: 200

volumeType: gp3

availabilityZones: ["us-west-2a"]

labels:

"dominodatalab.com/node-pool": "trainium"

tags:

k8s.io/cluster-autoscaler/node-template/dominodatalab.com/node-pool: trainium

k8s.io/cluster-autoscaler/node-template/resources/aws.amazon.com/neuron: 1

iam:

withAddonPolicies:

ebs: true

efs: true

- name: domino-inf1

instanceType: inf1.2xlarge

minSize: 0

maxSize: 3

desiredCapacity: 1

volumeSize: 200

volumeType: gp3

availabilityZones: ["us-west-2a"]

labels:

"dominodatalab.com/node-pool": "inferentia"

tags:

k8s.io/cluster-autoscaler/node-template/dominodatalab.com/node-pool: inferentia

k8s.io/cluster-autoscaler/node-template/resources/aws.amazon.com/neuron: 1

iam:

withAddonPolicies:

ebs: true

efs: true

- name: domino-inf2

instanceType: inf2.2xlarge

minSize: 0

maxSize: 3

desiredCapacity: 1

volumeSize: 200

volumeType: gp3

availabilityZones: ["us-west-2a"]

labels:

"dominodatalab.com/node-pool": "inferentia2"

tags:

k8s.io/cluster-autoscaler/node-template/dominodatalab.com/node-pool: inferentia2

k8s.io/cluster-autoscaler/node-template/resources/aws.amazon.com/neuron: 1

iam:

withAddonPolicies:

ebs: true

efs: trueOnce your nodes have joined the cluster, deploy the Neuron device plugin DaemonSet using the following specification. You must use version 2.17.3.0 or greater for Domino workloads to be correctly processed by the device plugin.

To deploy this DaemonSet:

-

Save the following specification to a file (such as

neuron-device-plugin-ds.yaml). -

Apply the specification with

kubectl apply -f neuron-device-plugin-ds.yaml.--- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: neuron-device-plugin rules: - apiGroups: - "" resources: - nodes verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "" resources: - pods verbs: - update - patch - get - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch - update --- apiVersion: v1 kind: ServiceAccount metadata: name: neuron-device-plugin namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: neuron-device-plugin namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: neuron-device-plugin subjects: - kind: ServiceAccount name: neuron-device-plugin namespace: kube-system --- apiVersion: apps/v1 kind: DaemonSet metadata: name: neuron-device-plugin-daemonset namespace: kube-system spec: revisionHistoryLimit: 10 selector: matchLabels: name: neuron-device-plugin-ds template: metadata: annotations: scheduler.alpha.kubernetes.io/critical-pod: "" creationTimestamp: null labels: name: neuron-device-plugin-ds spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: node.kubernetes.io/instance-type operator: In values: - inf1.xlarge - inf1.2xlarge - inf1.6xlarge - inf1.24xlarge - inf2.xlarge - inf2.8xlarge - inf2.24xlarge - inf2.48xlarge - trn1.2xlarge - trn1.32xlarge containers: - env: - name: KUBECONFIG value: /etc/kubernetes/kubelet.conf - name: NODE_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: spec.nodeName image: public.ecr.aws/neuron/neuron-device-plugin:2.17.3.0 imagePullPolicy: Always name: k8s-neuron-device-plugin-ctr resources: {} securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /var/lib/kubelet/device-plugins name: device-plugin - mountPath: /run name: infa-map dnsPolicy: ClusterFirst priorityClassName: system-node-critical restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: neuron-device-plugin serviceAccountName: neuron-device-plugin terminationGracePeriodSeconds: 30 tolerations: - key: CriticalAddonsOnly operator: Exists - effect: NoSchedule key: aws.amazon.com/neuron operator: Exists volumes: - hostPath: path: /var/lib/kubelet/device-plugins type: "" name: device-plugin - hostPath: path: /run type: "" name: infa-map updateStrategy: rollingUpdate: maxSurge: 0 maxUnavailable: 1 type: RollingUpdate -

Once the device plugin DaemonSet is deployed, run

kubectl describe nodeto confirm that you see device plugin daemons running on your Neuron-based instances, and that they advertiseaws.amazon.com/neuronresources to Kubernetes.

The following output is an example of a correctly configured Neuron-based node. Note the Neuron device plugin daemon present on the node, the advertised aws.amazon.com/neuron resource, and the Domino node pool label identifying the node as Trainium.

Name: ip-192-168-42-179.us-west-2.compute.internal

Roles: <none>

Labels: alpha.eksctl.io/cluster-name=inferentia-test

alpha.eksctl.io/instance-id=i-00549360c9911f4f1

alpha.eksctl.io/nodegroup-name=domino-trn1

beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=trn1.2xlarge

beta.kubernetes.io/os=linux

dominodatalab.com/node-pool=trainium

failure-domain.beta.kubernetes.io/region=us-west-2

failure-domain.beta.kubernetes.io/zone=us-west-2b

k8s.io/cloud-provider-aws=7c4bfb478ecbb2400bead13fc878a3a1

kubernetes.io/arch=amd64

kubernetes.io/hostname=ip-192-168-42-179.us-west-2.compute.internal

kubernetes.io/os=linux

node-lifecycle=on-demand

node.kubernetes.io/instance-type=trn1.2xlarge

topology.ebs.csi.aws.com/zone=us-west-2b

topology.kubernetes.io/region=us-west-2

topology.kubernetes.io/zone=us-west-2b

... <snip> ...

Capacity:

aws.amazon.com/neuron: 1

aws.amazon.com/neuroncore: 2

aws.amazon.com/neurondevice: 1

cpu: 8

ephemeral-storage: 209702892Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 32338380Ki

pods: 58

smarter-devices/fuse: 20

Allocatable:

aws.amazon.com/neuron: 1

aws.amazon.com/neuroncore: 2

aws.amazon.com/neurondevice: 1

cpu: 7910m

ephemeral-storage: 192188443124

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 31321548Ki

pods: 58

smarter-devices/fuse: 20

System Info:

Machine ID: ec240d0453aef36a07d5248a753946c5

System UUID: ec240d04-53ae-f36a-07d5-248a753946c5

Boot ID: 9d066b81-273b-406d-ad0f-6375adebca5d

Kernel Version: 5.4.253-167.359.amzn2.x86_64

OS Image: Amazon Linux 2

Operating System: linux

Architecture: amd64

Container Runtime Version: containerd://1.6.19

Kubelet Version: v1.26.7-eks-8ccc7ba

Kube-Proxy Version: v1.26.7-eks-8ccc7ba

ProviderID: aws:///us-west-2b/i-00549360c9911f4f1

Non-terminated Pods: (11 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

domino-compute run-650b32d5eb80df0d60cec514-kgcc4 5610m (70%) 6600m (83%) 28174Mi (92%) 28174Mi (92%) 3h17m

domino-platform aws-ebs-csi-driver-node-lwp9g 30m (0%) 200m (2%) 80Mi (0%) 456Mi (1%) 3h10m

domino-platform aws-efs-csi-driver-node-kk7mb 20m (0%) 200m (2%) 40Mi (0%) 200Mi (0%) 3h10m

domino-platform docker-registry-cert-mgr-k67bb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3h10m

domino-platform fluentd-ljkhk 200m (2%) 1 (12%) 600Mi (1%) 2Gi (6%) 3h10m

domino-platform image-cache-agent-7ztv6 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3h10m

domino-platform prometheus-node-exporter-vr9bc 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3h10m

domino-platform smarter-device-manager-ncsr6 10m (0%) 100m (1%) 15Mi (0%) 15Mi (0%) 3h10m

kube-system aws-node-q89xl 25m (0%) 0 (0%) 0 (0%) 0 (0%) 3h10m

kube-system kube-proxy-b2qpv 100m (1%) 0 (0%) 0 (0%) 0 (0%) 3h10m

kube-system neuron-device-plugin-daemonset-h8d7k 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3h10mNext, you need to make the node group accessible to your users by creating a Domino hardware tier that does the following:

-

Targets the node pool label you’ve given to your Neuron-based nodes

-

Requests a suitable amount of the node vCPU and memory, allowing for necessary overhead

-

Requests a custom GPU resource with the name

aws.amazon.com/neuron

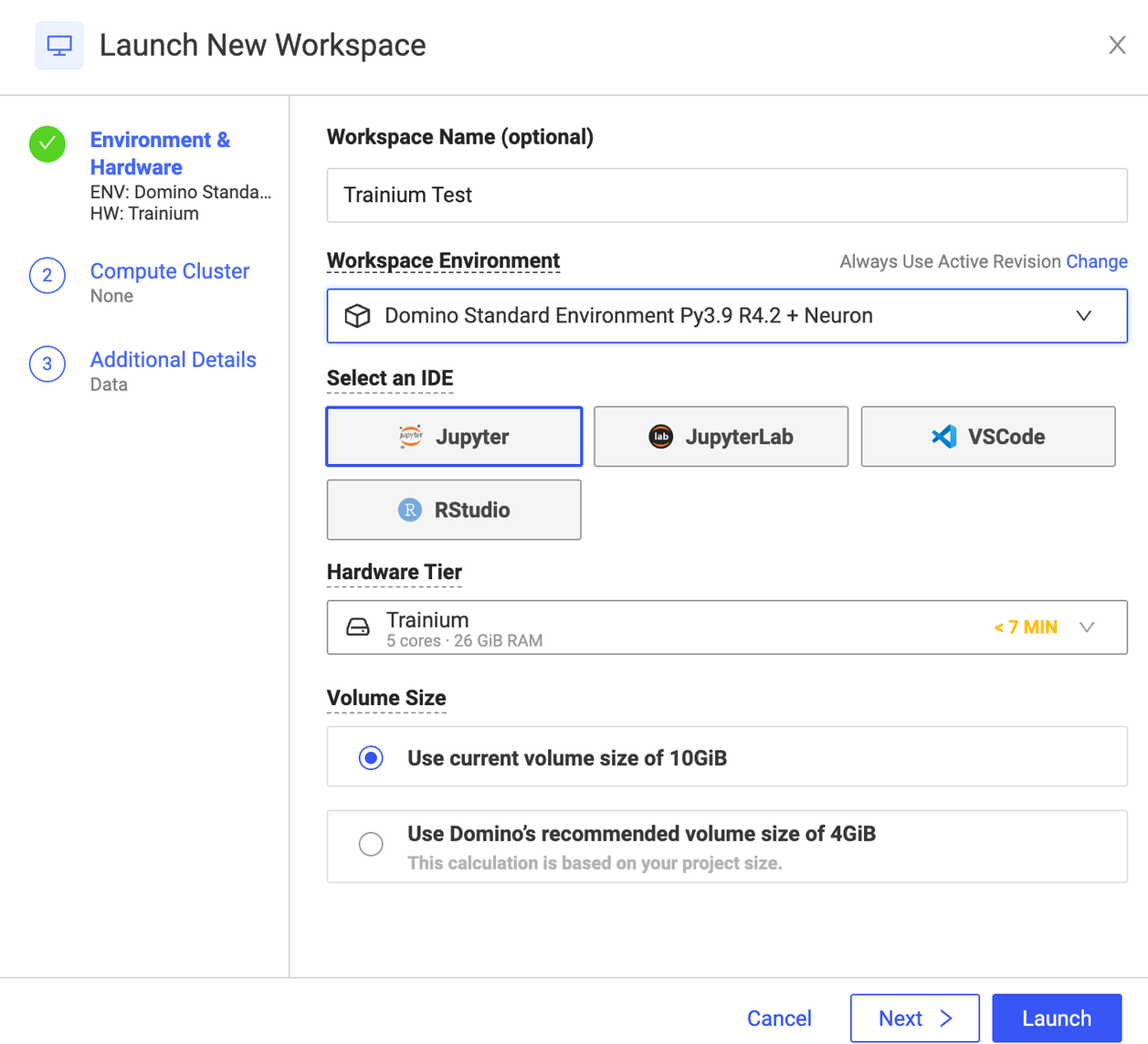

See the following example:

| Key | Value |

|---|---|

Cluster Type | Kubernetes |

ID | trainium |

Name | Trainium |

Cores Requested | 5.0 |

Memory Requested (GiB) | 26.0 |

Number of GPUs | 1 |

Use custom GPU name | Yes |

GPU Resource Name | aws.amazon.com/neuron |

Cents Per Minute Per Run | 0.0 |

Node Pool | trainium |

Restrict to compute cluster | Options: Spark, Ray, Dask, Mpi |

Maximum Simultaneous Executions | |

Overprovisioning Pods | 0 |

The AWS Neuron SDK is designed for use with fully integrated frameworks like PyTorch and TensorFlow. When setting up a Domino environment for a new version of Neuron or the integrated framework, you should read the documentation on:

As an example, and to facilitate testing, here’s an environment definition for adding PyTorch Neuron to the Domino 5.7 Standard Environment (quay.io/domino/compute-environment-images:ubuntu20-py3.9-r4.3-domino5.7-standard):

# Configure Linux for Neuron repository updates

RUN sudo touch /etc/apt/sources.list.d/neuron.list

RUN echo "deb https://apt.repos.neuron.amazonaws.com focal main" | sudo tee -a /etc/apt/sources.list.d/neuron.list

RUN sudo wget -qO - https://apt.repos.neuron.amazonaws.com/GPG-PUB-KEY-AMAZON-AWS-NEURON.PUB | sudo apt-key add -

# Update OS packages

RUN sudo apt-get update -y

# Install Neuron Driver

RUN sudo apt-get install aws-neuronx-dkms=2.* -y

# Install Neuron Runtime

RUN sudo apt-get install aws-neuronx-collectives=2.* -y

RUN sudo apt-get install aws-neuronx-runtime-lib=2.* -y

# Install Neuron Tools

RUN sudo apt-get install aws-neuronx-tools=2.* -y

# Add PATH

RUN export PATH=/opt/aws/neuron/bin:$PATH

# pip installs

RUN python -m pip config set global.extra-index-url https://pip.repos.neuron.amazonaws.com

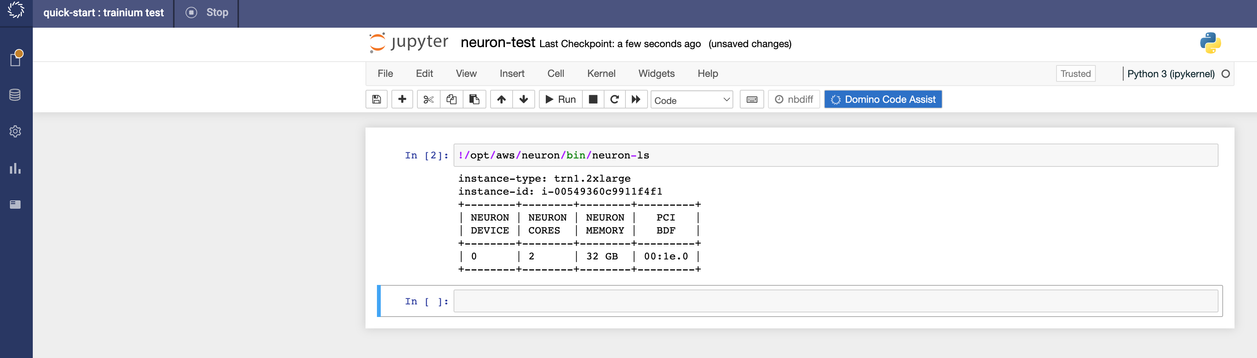

RUN python -m pip install --user neuronx-cc==2.* torch-neuronx torchvisionTo test your setup, start a Jupyter workspace using a Neuron-based hardware tier and Neuron-enabled Workspace Environment.

Once your workspace has started, open a Python notebook and execute a cell with the command !/opt/aws/neuron/bin/neuron-ls to see mounted Neuron devices.

You can now use the Neuron framework you’ve installed to invoke the mounted accelerator.

Refer to the Getting Started with Neuron guide for your chosen framework to get started.