|

Note

| This article only applies to Domino Cloud deployments. |

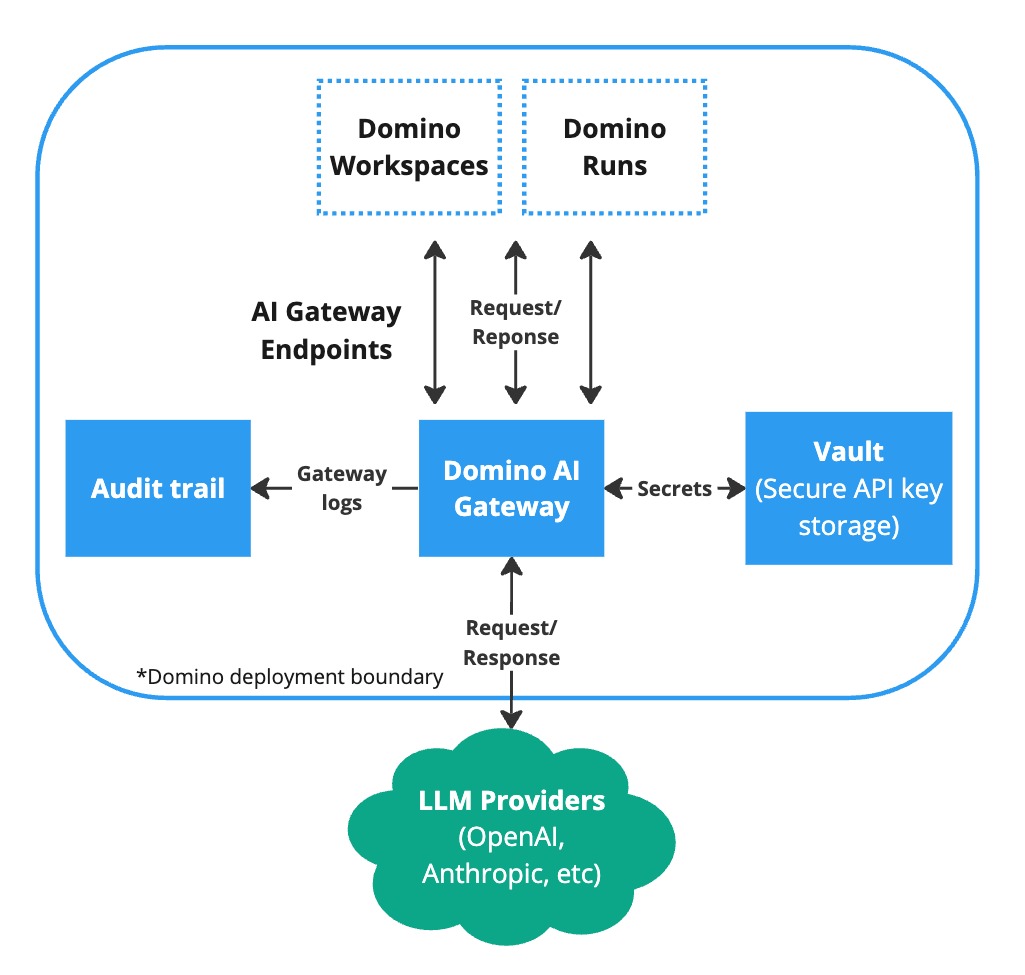

Admins can set up AI Gateway to provide Domino users a safe and streamlined way to access external Large Language Models (LLMs) hosted by LLM service providers like OpenAI or AWS Bedrock. This lets users enjoy the benefits of provider-hosted models while ensuring that they follow security best practices.

AI Gateway provides the following benefits:

-

Securely manage API keys to prevent leaks.

-

Control user access to LLMs.

-

Log LLM activity for auditing.

-

Provide data scientists with a consistent and streamlined interface to multiple LLM providers.

-

Built on top of MLflow Deployments Server for easy integration with existing MLflow projects.

AI Gateway endpoints are central to AI Gateway. Each endpoint acts as a proxy endpoint for the user, forwarding requests to a specific model defined by the endpoint. Endpoints are a managed way of securely connecting to model providers.

To create an AI Gateway endpoint in Domino, you must use the aigateway endpoint in the Domino Platform API.

For example, the following curl command creates an AI Gateway endpoint using gpt-4, hosted by openai, that is usable by all users in the Domino deployment:

curl -d

'{

"endpointName":"completions",

"endpointType":"llm/v1/completions",

"endpointPermissions":{"isEveryoneAllowed":true,"userIds":[]},

"modelProvider":"openai",

"modelName":"gpt-4",

"modelConfig":{"openai_api_key":"<OpenAI_API_Key>"}

}' -H "X-Domino-Api-Key:$DOMINO_USER_API_KEY" -H "Content-Type: application/json" -X POST https://<deployment_url>/api/aigateway/v1/endpoints|

Important

|

The endpointName must be unique.

|

See MLflow’s Deployment Server documentation for more information on the list of supported LLM providers and provider-specific configuration parameters.

Once an endpoint is created, authorized users can query the endpoint in any Workspace or Run using the standard MLflow Deployment Client API. For more information, see the documentation to Use Gateway LLMs.

Endpoint permission management

AI Gateway endpoints can be configured to be accessible to everyone, or to a specific set of users and/or organizations. In the API, this can be accomplished through the endpointPermissions field in AI Gateway creation and update request. Please see the curl request above for an example.

Secure credential storage

When creating an endpoint, you will most likely need to pass a model-specific API key (such as OpenAI’s openai_api_key) or secret access key (such as AWS Bedrock’s aws_secret_access_key). When you create an endpoint, all of these keys are automatically stored securely in Domino’s central vault service and are never exposed to users when they interact with AI Gateway endpoints.

The secure credential store helps prevent API key leaks and provides a way to centrally manage API keys, rather than simply giving plain text key to users.

To update an AI Gateway endpoint, you must use the aigateway endpoint in the Domino Platform API. For example, after creating the endpoint above, you can update the model used with the following command:

curl -d

'{

"modelName":"gpt-3.5"

}' -H "X-Domino-Api-Key:<Domino_API_Key>" -H "Content-Type: application/json" -X PATCH https://<deployment_url>/api/aigateway/v1/endpoints/completionsTo update permissions you will need User IDs, which can be retrieved from the Endpoint API.

Domino logs all AI Gateway endpoint activity to Domino’s central audit system. To see AI Gateway endpoint activity, go to Admin > Advanced > MongoDB and run the following command:

db.audit_trail.find({ kind: "AccessGatewayEndpoint" })You can refine this query to filter records by fields such as user or endpoint name:

db.audit_trail.find({ kind: "AccessGatewayEndpoint", "metadata.accessedByUsername": "johndoe" })

db.audit_trail.find({ kind: "AccessGatewayEndpoint", "metadata.endpointName": "openai_completions" })Learn how to use AI Gateway endpoints as a Domino user.