|

Note

| These specifications are minimum requirements. See Sizing infrastructure for Domino for more information. Your Domino representative can give you a more accurate estimate of your organization’s needs. |

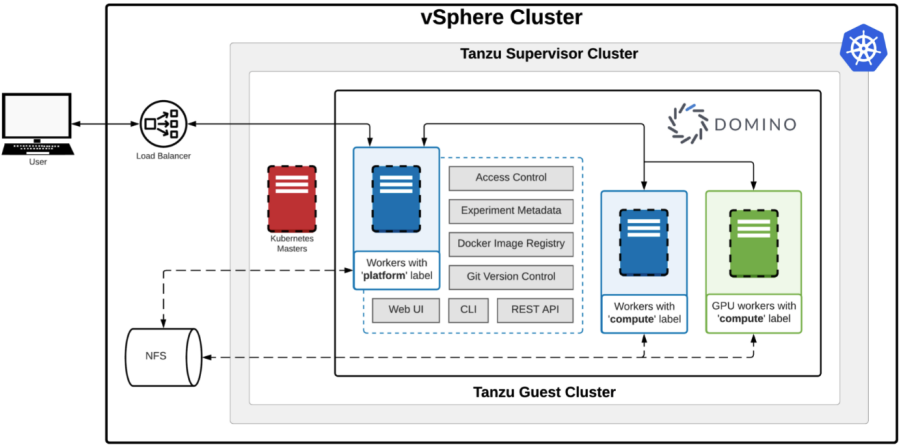

These topics describe how to install Domino on VMware vSphere with Tanzu. Tanzu is a highly abstracted Kubernetes platform that can be deployed on a variety of infrastructure, or even across hosts on different kinds of infrastructure.

When you deploy Domino on Tanzu, you must have the following services and components:

There must be enough total cluster capacity for vSphere to provision the virtual machines (VMs) that meet Domino’s Node Requirements.

The solution is designed to run on NVIDIA NGC-Ready and NVIDIA-Certified Systems. The reference architecture for this guide was deployed on the following certified hardware configuration:

-

3 x Dell EMC PowerEdge R750 NVIDIA-Certified server

-

2 x Intel Xeon Gold 6338 CPU @ 2.00GHz (32C/64T)

-

8 x 64 GB RAM (512 GB)

-

1 x Dell Ent NVMe P5600 MU U.2 1.6TB as vSAN Cache Disk

-

1 x Dell Ent NVMe CM6 RI 7.68TB as vSAN Capacity Disk

-

1 x Broadcom NetXtreme E-Series Advanced Dual-port 25Gb

-

1 x BOSS-S2 (Embedded) AHCI controller with 240GB SATA as ESXi booting device

-

2 x NVIDIA Ampere A100 PCIE 40GB

Virtualization and the Kubernetes cluster are provided by the VMware vSphere with Tanzu Kubernetes Grid Service.

Our reference solution required vSphere Hypervisor (ESXi) version 7.0U3c or later and vCenter version 7.0.3 or later.

For details on preparing vSphere for Tanzu, please refer to the Deploy an AI-Ready Enterprise Platform on VMware vSphere 7 with VMware Tanzu Kubernetes Grid Service document.

The Domino Enterprise MLOps Platform requires access to persistent storage that is attached to the Kubernetes cluster. In general, automatic provisioning of persistent volumes must be configured. To import, export, and use Domino Datasets, The Domino Platform requires access to a persistent shared file system or object store. The recommended storage facility when deploying to VMware vSphere is VMware vSAN. When using vSAN, it is recommended to use vSAN Ready Nodes.

Other storage options include:

-

NFS

-

vCloud Director / Cloudian

-

NetApp ONTAP

-

Ceph

If you use NVIDIA GPUs, the following are also required:

When adding physical nodes to the cluster it is important to install the NVIDIA Virtual GPU (vGPU) software on the ESXi host before adding it to the vSphere cluster. The NVIDIA vGPU Host Driver is available as a vSphere Installation Bundle (VIB) and can be downloaded from your NVIDIA AI Enterprise NGC Registry.

NVIDIA vGPU VIB version: NVIDIA-AIE_ESXi_7.0.2_Driver_470.82-1

Installation instructions are provided here: Installing and configuring the NVIDIA VIB on ESXi

The GPU Operator allows administrators of Kubernetes clusters to manage GPU nodes just like CPU nodes in the cluster. Instead of provisioning a special operating system (OS) image for GPU nodes, administrators can rely on a standard OS image for both CPU and GPU nodes and then rely on the GPU Operator to provision the required software components for GPUs.

The NVIDIA GPU Operator version 1.9.0-beta or later is required; v1.9.1 or greater is preferred.

Install the NVIDIA GPU Operator by running the Helm chart that you can download with the following command:

helm fetch https://helm.ngc.nvidia.com/ea-cnt/ext_operator_vgpu/charts/gpu-operator-v1.9.1-rc.3.tgz --username='$oauthtoken' --password=<YOUR API KEY>Complete installation instructions are available here: Install NVIDIA GPU Operator

If using an NVIDIA AI Enterprise Software license, the required software should be accessible from your NGC Private Registry. You can access the software online at https://ngc.nvidia.com once your organization has obtained a valid license. Contact your solution provider for more details.