This guide outlines important security considerations that must be taken when configuring ingress for control plane services in Domino Nexus. Depending on the customer’s requirements and particular Domino configuration, the exact configuration of this ingress may vary significantly. This guide will therefore discuss these security principles in the abstract, without describing solutions for a particular infrastructure vendor.

Domino Nexus control planes host several important services which must be accessible from data plane clusters:

Domino API - This is the API which is used by the Domino CLI, UI, and now data planes. Ingress is already configured on the main endpoint by which users access Domino. This endpoint should be routable from data plane clusters. Because this is already configured, this guide will not discuss it further.

Vault - Data plane clusters use Hashicorp’s Vault to authenticate with the control plane, encrypt secrets in transit, and authenticate with RabbitMQ. Communication with Vault happens over HTTPS on port 8200.

RabbitMQ - The primary communication channel between the Domino control plane and the data plane is based on RPC over RabbitMQ. Additionally, data planes publish execution state back to the control plane over RabbitMQ. This communication occurs using AMQP and RabbitMQ Stream protocols on ports 5672 and 5552.

Docker Registry - If you are using Domino’s internal Docker Registry then data plane Kubelets must be able to access the registry over HTTPS on port 443. This is used to pull environment images for user executions.

Vault and RabbitMQ in particular are sensitive services. Authentication and authorization for these services is tightly controlled. These services should not be generally available to the public Internet, to reduce the risk of compromised credentials or vulnerabilities in the services themselves.

Load balancers are typically used for Kubernetes ingress to route traffic from a stable DNS host to the variable set of cluster nodes hosting the service. Most infrastructure providers are able to configure Layer 4 (Network) and / or Layer 7 (Application) load balancers. Application load balancers can be used for Vault and Docker Registry because they use HTTPS, however a network load balancer is required for RabbitMQ because it is using AMQP.

TLS termination

Data planes must communicate with the control plane services using TLS. These services do not support serving TLS connections themselves (unless Istio is in use, see below), and so load balancers must perform TLS termination. If your company issues non-public certificates, these can be used here, however see Domino’s administrator documentation for guidance on creating data planes when non-public certificates are in use.

Network access

Load balancers should not be accessible from the public Internet. This can be achieved in two ways:

-

The recommended way is to configure internal load balancers, which sit in a private subnet, do not have a public IP address, and are only accessible using private networking such as VPN or peering. Even with internal load balancers, you should limit source IP ranges to subnets which you expect to host data plane services.

-

If your company does not have private networking between Nexus clusters, you must restrict the network CIDRs which can access your load balancers. This involves identifying the IP addresses for NAT gateways used by data plane services, and limiting load balancer access to these source ranges, either through load balancer configuration or network security groups.

DNS

Data plane services must be able to reach load balancers using a stable DNS host.

Most cloud providers will publish public DNS records for both public and private load balancers. This allows you to use public DNS to resolve private load balancers.

If you are hosting your own load balancers (such as on-premises), you must configure a DNS record (either public or private) to resolve to the load balancer’s IP. If the IP changes over time, you must keep the DNS record updated. If you are using private DNS, ensure that pods in data plane clusters are able to resolve such hosts.

In traditional Domino deployments, access to RabbitMQ and Vault by services inside the cluster is tightly controlled. Allowing ingress to these services from outside the cluster while limiting access from services inside the cluster is nontrivial because many types of access control operate at the network level and are not aware of cluster application level semantics.

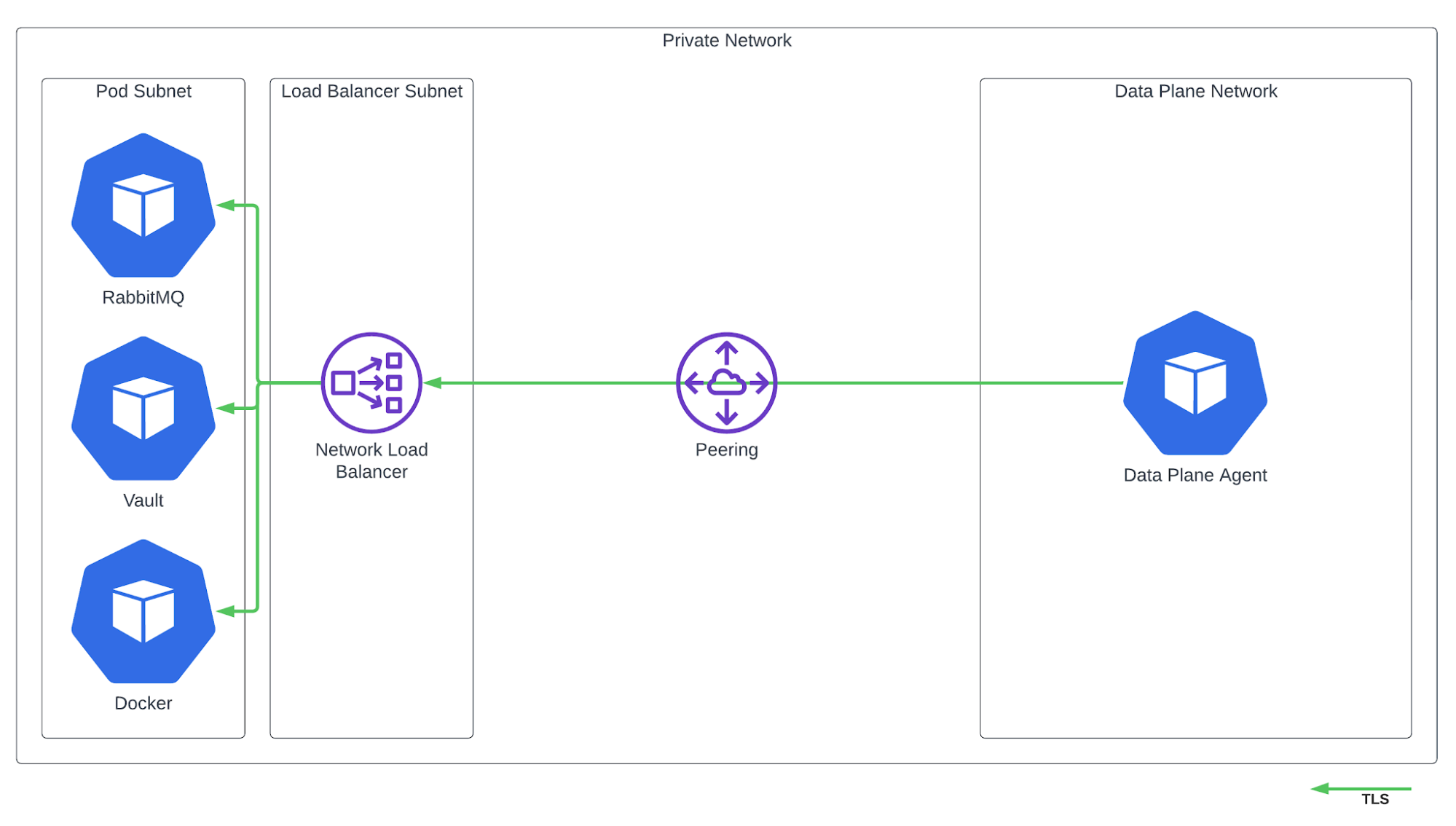

In the architecture pictured above, it is recommended that load balancers are placed in a separate subnet from cluster nodes and pods. This allows network policies to be defined which only allow traffic from this subnet, and not other pods in the cluster. Some load balancer types forward client IP addresses (used for evaluating network policies). In this case you can allow only address space known to be used by data plane sources.

In addition to network policies, you configure security groups between load balancers and cluster nodes in accordance with best practice.

Communication between load balancers and backend services is often not encrypted in standard Kubernetes configurations. If you are not using Istio in your cluster, such traffic will already not be encrypted between local cluster pods and these services. To encrypt traffic between load balancers and Pods, you must have Istio enabled in your deployment.

If your company requires encryption in transit to be enabled, and you are running Istio in your Domino deployment, then you must take steps to allow the load balancer to connect to these services without using mTLS, while continuing to use TLS.

One way this can be accomplished is by using Istio’s Gateway. In this architecture, load balancers will instead route traffic to Istio’s Gateway service, which originates mTLS and allows traffic to enter the service mesh. If you go this route, ensure that the load balancer configured by Istio conforms with the guidance above, and uses TLS to communicate with the Istio Gateway service backend.

Another option is to configure Sidecar TLS termination. With this method, mutual TLS is disabled for the services and ports that we wish to expose. The sidecar is configured to serve custom TLS certificates to the load balancer to provide encryption in transit between load balancer and service. Domino only relies on Istio for encryption in transit, not service identity, so mutual TLS is not necessarily required.

In either case, you can chose to have the Istio Gateway or Sidecar serve the external certificates for the control plane, or to leverage TLS termination in the load balancer.