Domino lets you register large language models (LLMs) and deploy them as hosted endpoints with optimized inference. These endpoints provide OpenAI-compatible APIs that your applications and agentic systems can call.

You can register models from Hugging Face or from your experiment runs, then deploy them as endpoints. You’ll need Project Collaborator permissions to register models and create endpoints.

Before creating an endpoint, consider these key factors to ensure optimal performance and cost-efficiency:

Understand your model’s requirements

-

Check your model’s documentation for minimum memory and compute requirements.

-

Choose appropriate resource sizes based on requirements and expected usage patterns.

Size resources appropriately for expected usage

-

Account for concurrent users: if you expect high throughput or multiple simultaneous requests, minimal GPU sizes may cause slowdowns. Scale up the hardware tier or consider deploying multiple endpoints.

-

Balance performance against cost. Start with a tier that meets your requirements and monitor performance before scaling up.

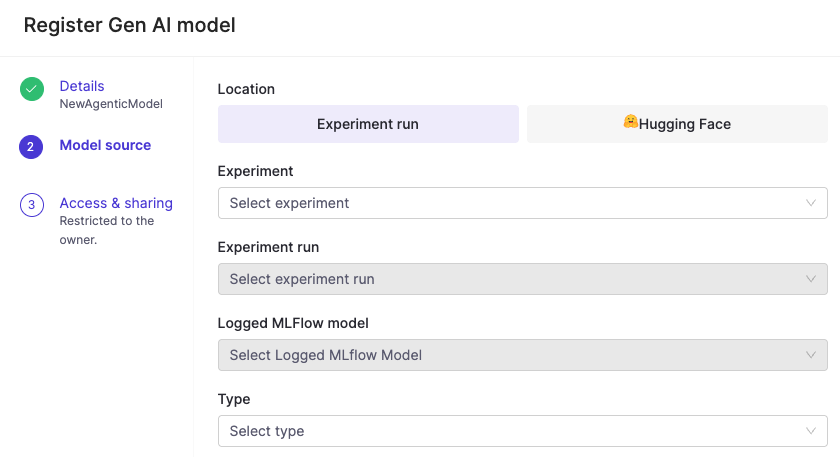

Register a model to make it available for deployment as an endpoint. Go to Models > Register to get started.

-

Choose your model source:

-

Hugging Face models that you have access to, or

-

Experiment runs that include a logged MLflow model.

-

-

Complete the required fields.

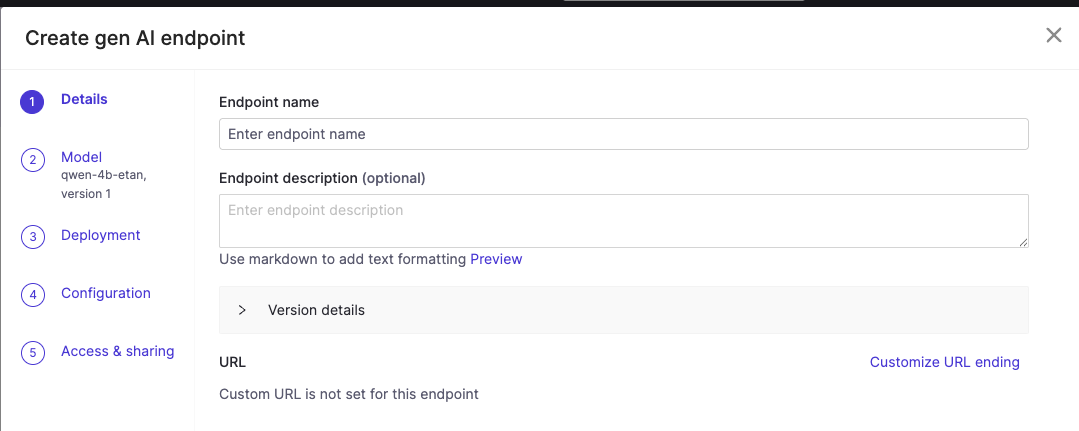

After registering a model, you can deploy it as an endpoint. From your registered model’s Endpoints tab, click Create endpoint.

-

Complete the endpoint configuration details and choose a model source environment and resource size.

-

Configure access controls by adding users or organizations for access to this endpoint.

-

Click Create endpoint.

The endpoint deploys with the vLLM runtime, which provides optimized inference performance and OpenAI-compatible APIs.

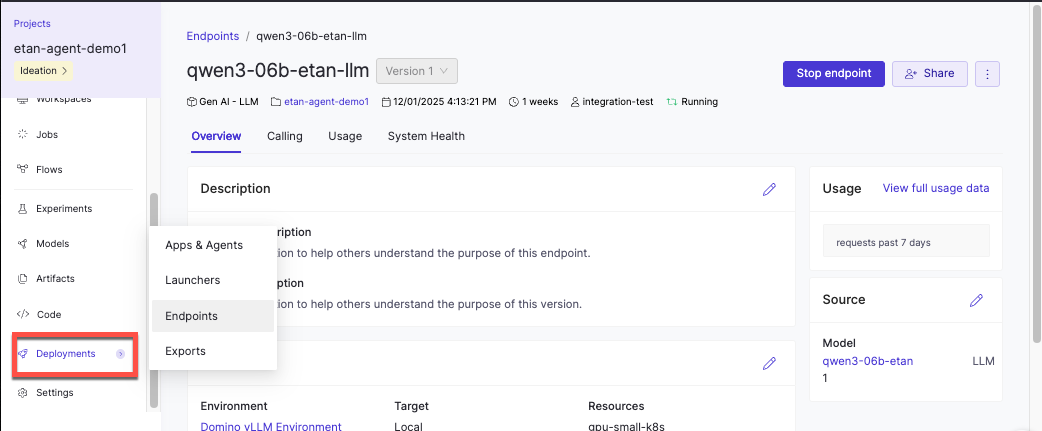

After deploying your endpoint, you can monitor its performance and usage.

-

Overview: Configuration details and deployment status

-

Performance: Token usage and latency metrics over time

-

Usage: Endpoint invocation frequency

Monitor model endpoint performance has more detailed information about using monitoring capabilities during model development and after deployment to make sure your models perform efficiently and reliably in production environments.

-

Hugging Face model not appearing in the list:

Verify you have access to the model. Some models require accepting license agreements on Hugging Face before they’re available in Domino. -

Endpoint stuck in "Starting" status:

Check that the model size is compatible with your selected resource configuration. Large models may require hardware tiers with more GPU memory. Review endpoint logs for specific error messages. -

Slow response times or timeouts:

Monitor the Performance tab to identify latency patterns. If concurrent requests exceed your resource capacity, consider scaling to a larger hardware tier or deploying additional endpoints. -

Users can’t access the endpoint:

Verify users or their organizations were added to the endpoint’s access controls and that users have the required project permissions.

-

Set up agentic system tracing: Instrument your agent code to capture traces during development and attach evaluations to assess quality.

-

Track and monitor experiments: View, compare, and export experiment results for both ML and agentic systems.

-

Deploy and monitor production agents: Launch your best configuration to production and continue monitoring performance with real user interactions.