See also the fleetcommand-agent Release Notes.

Changes

-

Improved logging in the Image Cache Agent.

-

Fixed an issue that prevented file uploads through the Domino CLI.

-

Fixed an issue where the Scheduled Jobs page failed to load if a job could not find a hardware tier.

-

Fixed an issue that caused Workspace & App links in the Compute & Spend report to return errors, preventing access to the associated job.

-

Fixed an issue that caused the

/healthendpoint to return a 404 incorrectly. -

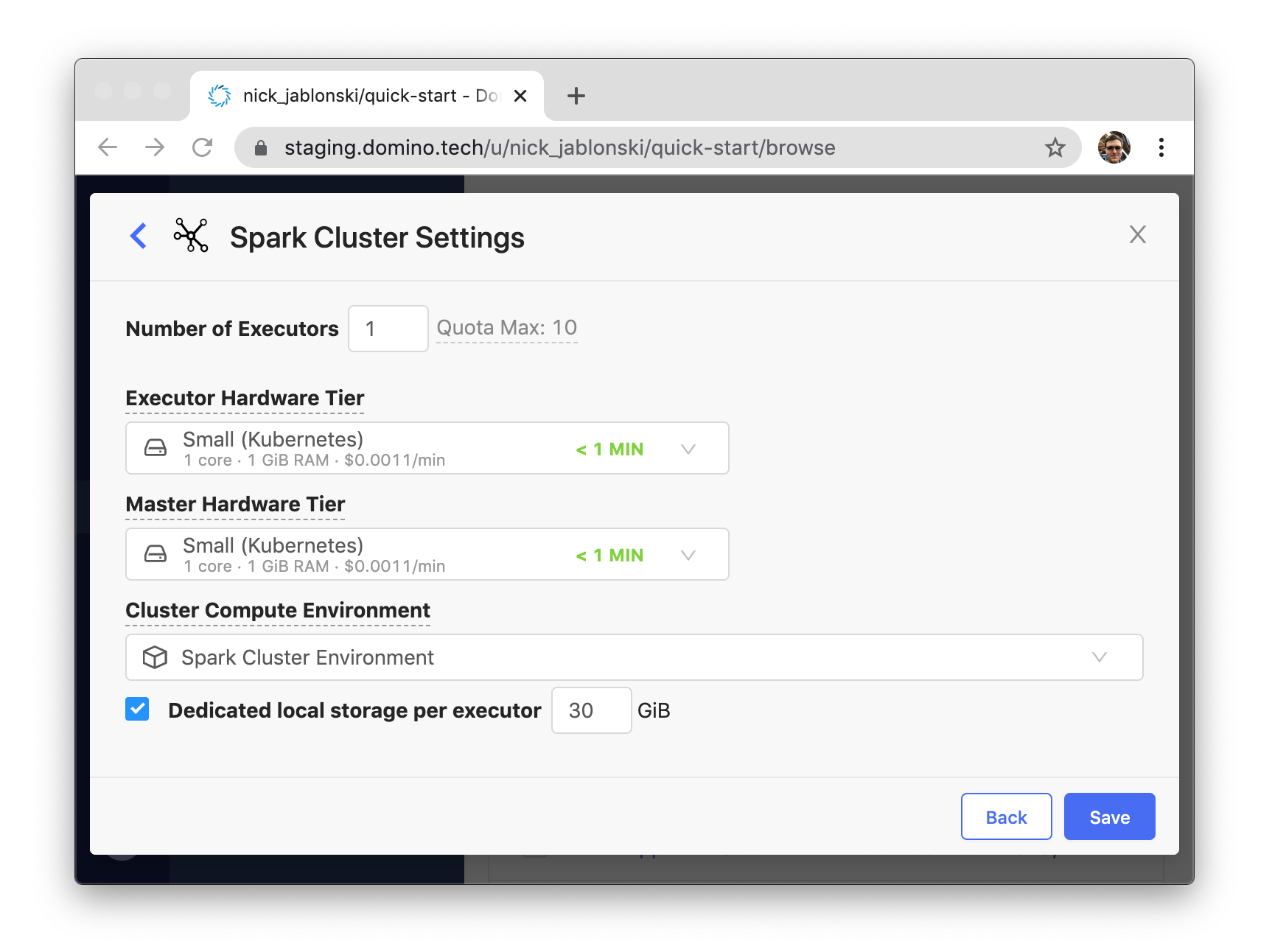

Fixed an issue where Spark worker storage would not be provisioned unless the value for the storage size was modified. The default storage of 30GiB is now created without requiring custom input.

-

On the Account Settings page, the Environment list is limited to 100 options so the user cannot select from all the environments to which they have access.

-

When a user signs in as a member of an organization and tries to edit the scheduled job, they receive a message that they are not authorized to edit the run.

-

The asset count shows an incorrect value in the Project Portfolio view of the Domino Control Center.

-

Installing the python-domino library in the egg format fails in Centos.

-

An external dependency was removed on 7/24/21, causing build failures for DAD-related environments.

-

The textbox will not accept keyboard input when you try to filter the list of datasets when mounting a shared dataset.

-

There is a known issue that affects error reporting in the R harness.

-

Loading Model API and related UI pages is slow for a particular customer.

-

When a user signs in as a member of an organization and tries to edit the scheduled job, they receive a message that they are not authorized to edit the run.

-

Spark clusters are stuck in pending if they used a GPU-based hardware tier.

-

When committing to a protected branch, the push succeeds without errors or pending changes.

-

Datasets are not mounting properly when executing a Domino run using the Save & Run option on the "Files" page of a Domino project.

-

Users cannot view datasets in their project if the dataset originated from an imported project.

-

Workspaces that are launched from the "Files" page of a Domino project do not have a scratch space created unless a dataset is added.

-

Python-based extensions are not functioning properly in workspaces that use VS Code.

-

When editing an environment, the base image reverts back to the default global environment.

-

Workspaces will not launch if a user was removed from an Okta group with credential propagation enabled.

-

When publishing an app using the Domino Python wrapper, the Domino REST API throws a 500 error.

-

Launcher users are able to access project files and can import an un-exported file.

-

If you leave the Cores Limit field empty, the hardware tier definition will not save. However, this is intended to allow you to indicate that you do not want to specify an upper limit.

-

GPU-based Spark clusters are stuck in a Pending state.

-

Some workspaces end up in a state where they cannot be stopped.

Changes

-

Improved performance of the files browser in the UI

-

Fixed an issue where user-uploaded custom certificates were not being used when connecting to Git servers for cloning and working with externally hosted repositories.

-

Domino 4.2.1 is supported by fleetcommand-agent v23 or greater. See the fleetcommand-agent release notes for breaking changes and new options.

-

When you click Link Goals in a Workspace, the buttons are overlapped by the Goals list and you cannot proceed.

-

When linking goals, if you try to search, you get a "Not Found" message.

-

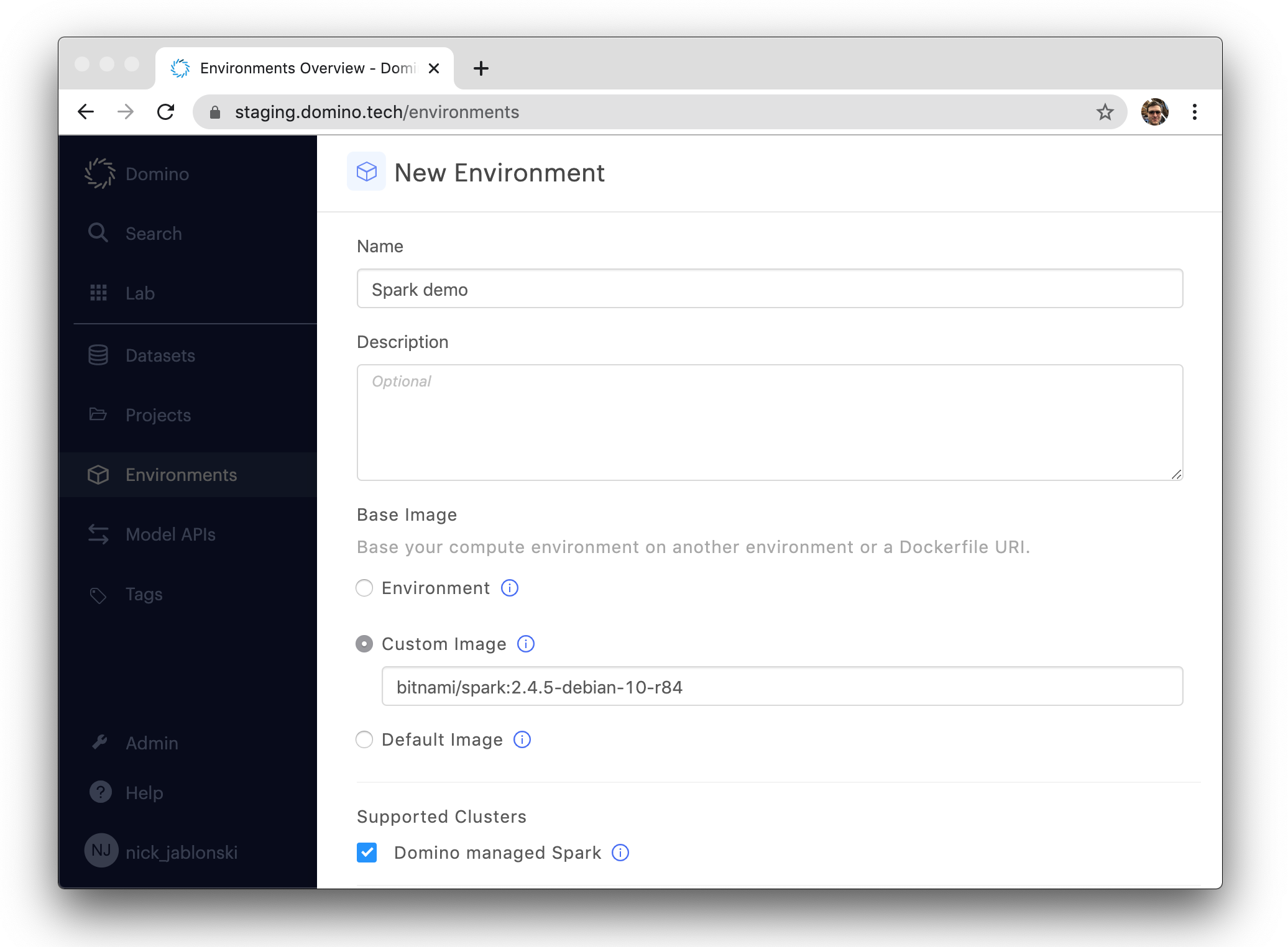

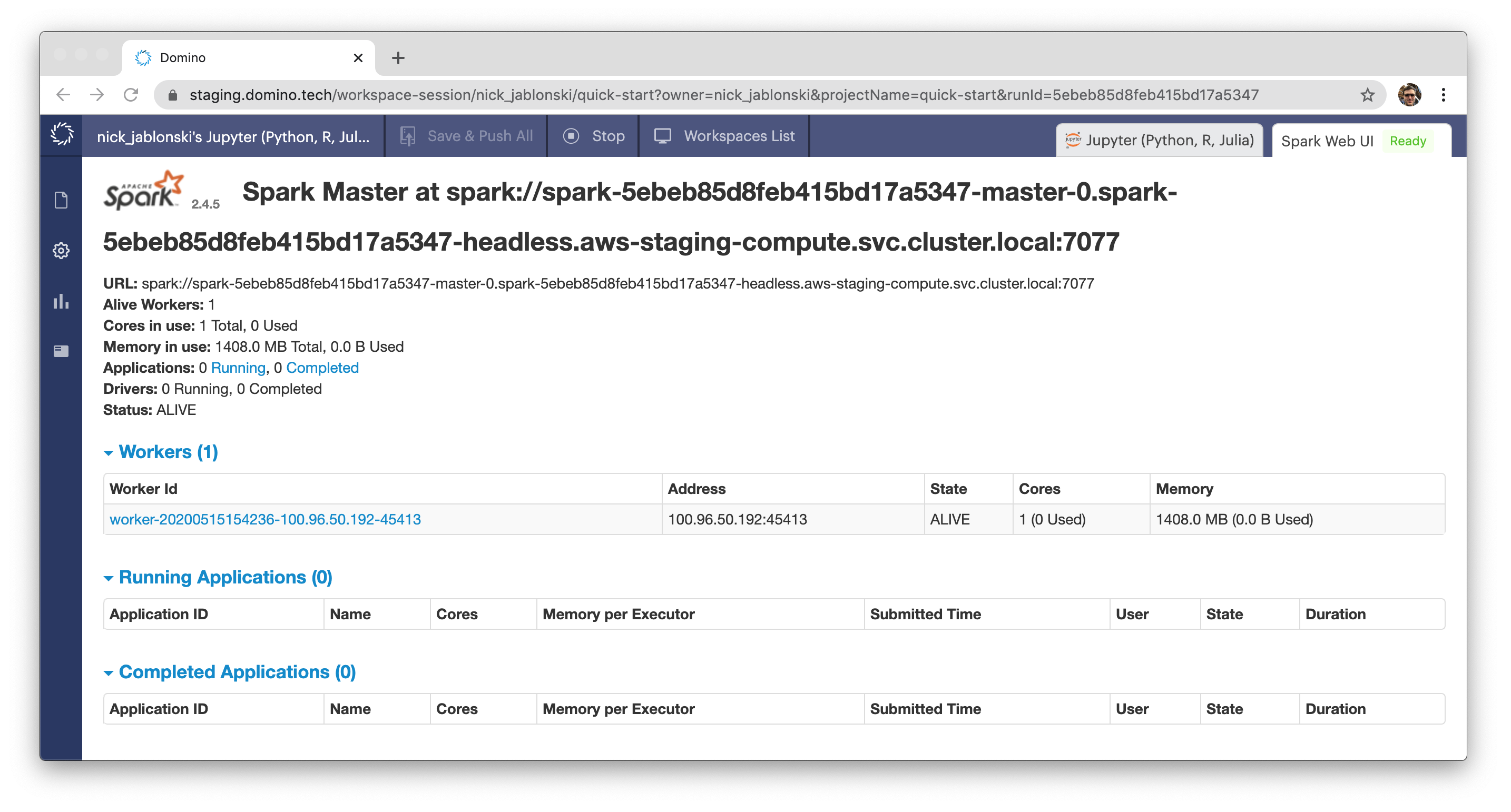

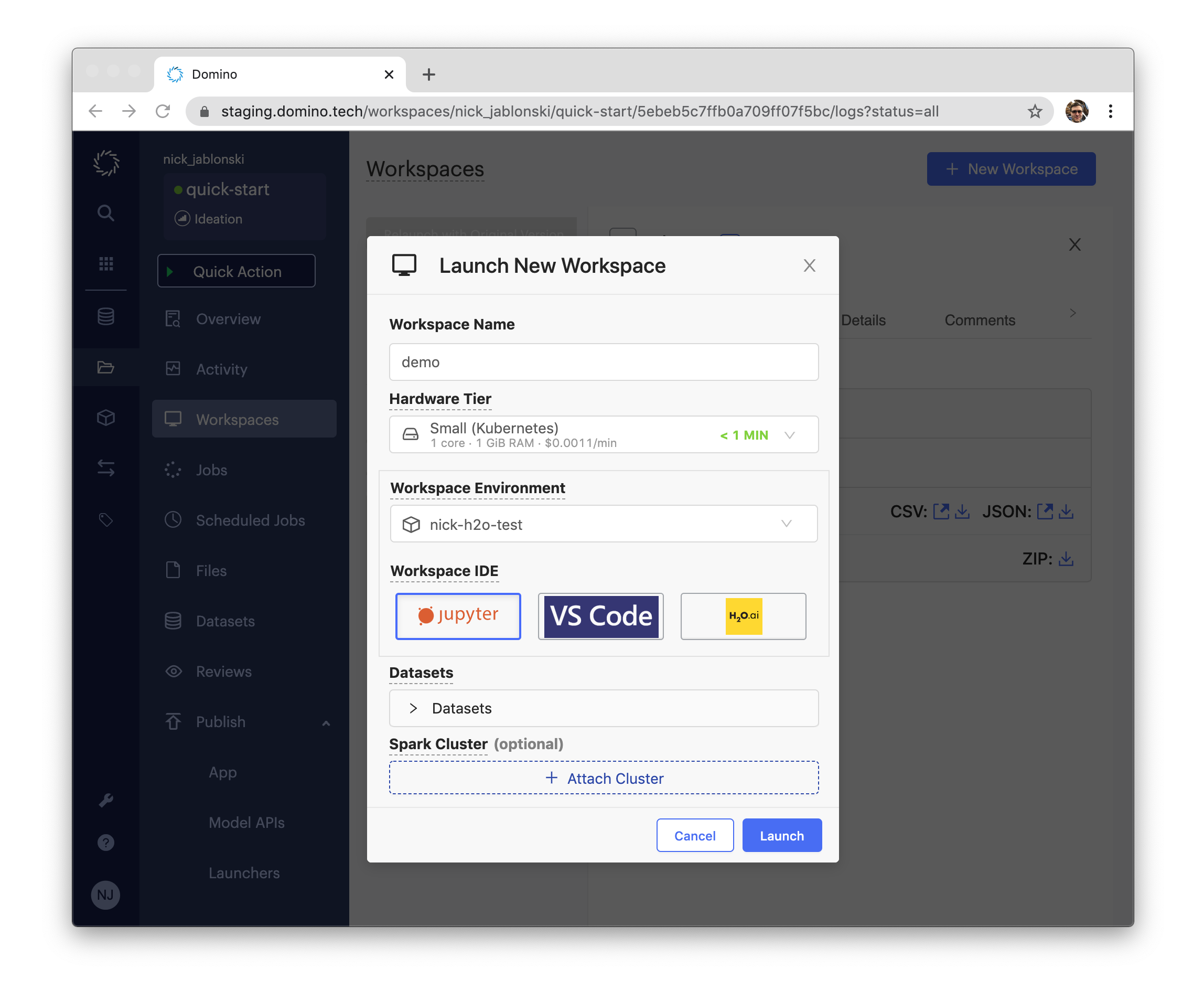

Domino 4.2 introduces the ability to launch and work with on-demand Spark clusters backed by the Domino compute grid. When setting up an environment, you can now use a base image with Spark worker and client components installed, such as

bitnami/spark:2.4.5-debian-10-r84and then mark the environment as ready for use with Domino managed Spark.

Read more about environment setup for on-demand Spark.

Once your environment is configured, you can attach on-demand spark clusters to jobs and workspaces that use that environment. The cluster and clients will be automatically configured, and you can use PySpark or spark-submit in your job or workspace code to interact with the cluster.

-

In Domino 4.2, if a workspace stops or shuts down unexpectedly without syncing files, and the attached volume enters a salvaged state, the user will automatically be prompted to start a recovery workspace that will reconnect to the salvaged volumes for syncing.

-

Domino in AWS now supports custom KMS key encryption of S3 stores.

-

The user attribute mappers, plus pre-configured client mappers for AWS credential propagation and organization or role mappings.

-

New API endpoints have been introduced for working with Model APIs. You can now use the API to export a Model API image in a Sagemaker-compatible format directly to your own container registry, and there are also new endpoints for starting, stopping, and getting the deployment status of Model APIs.

-

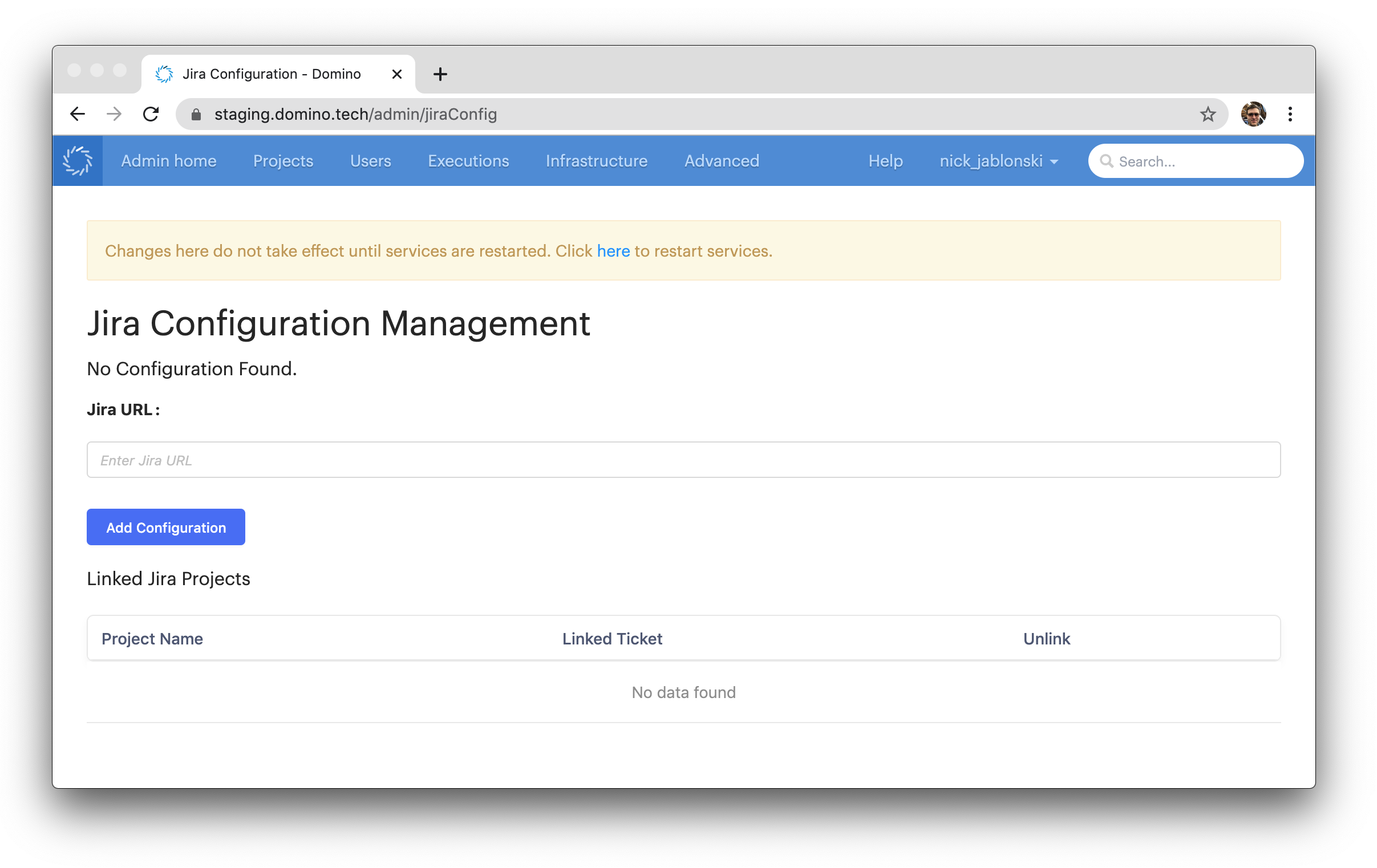

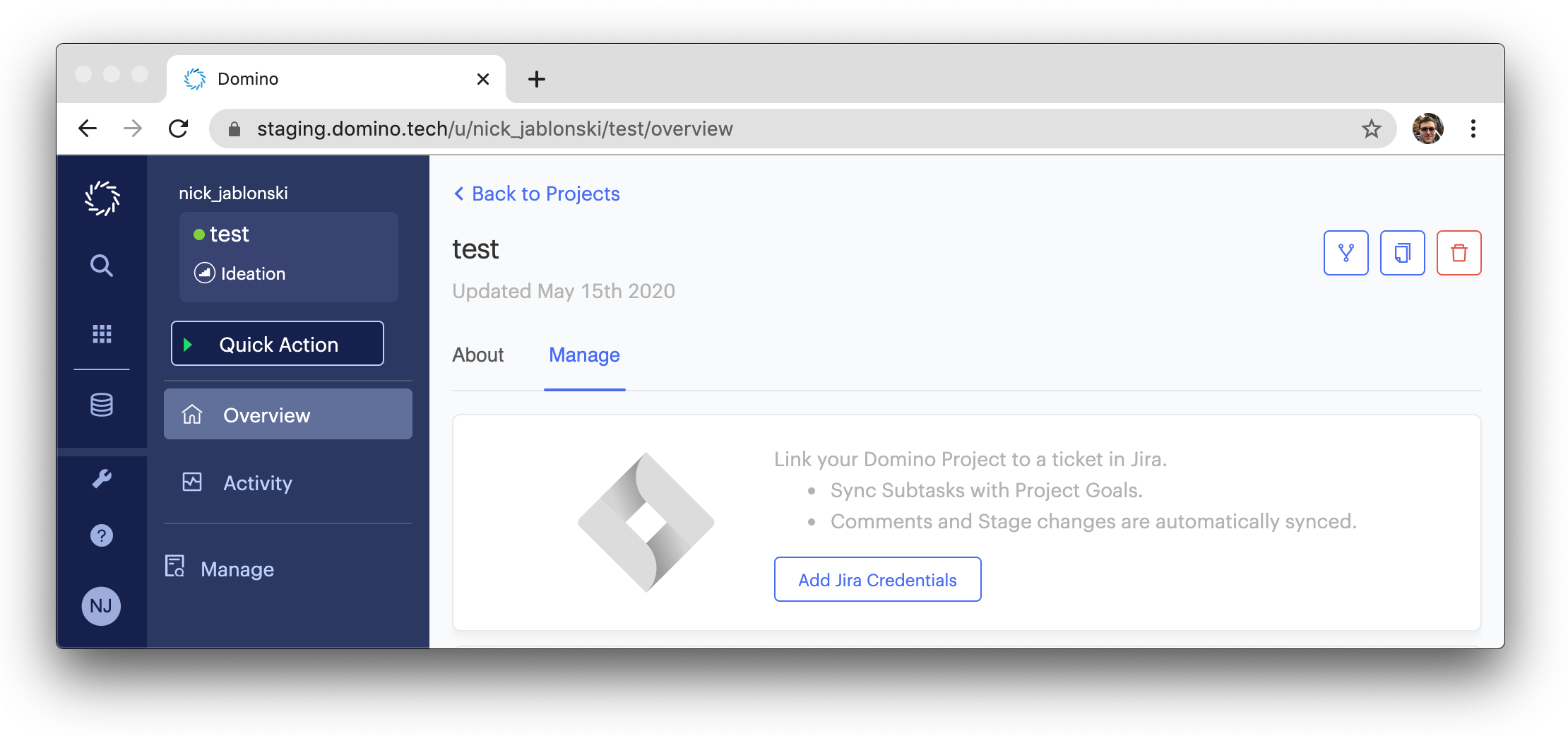

Domino projects can now integrate with Atlassian Jira to link Domino work to Jira issues and projects.

To enable this feature, a Domino administrator must configure a Jira integration. From the admin portal, click Advanced > Jira Configuration, then supply your Jira URL to start the integration.

Once a Jira integration has been added, users can go to the Manage tab of a project overview to authenticate to the Jira service and connect the Domino project to a Jira issue. Users can then link Domino project goals to Jira subtasks, and comments and project status changes will automatically be recorded in Jira.

Changes

-

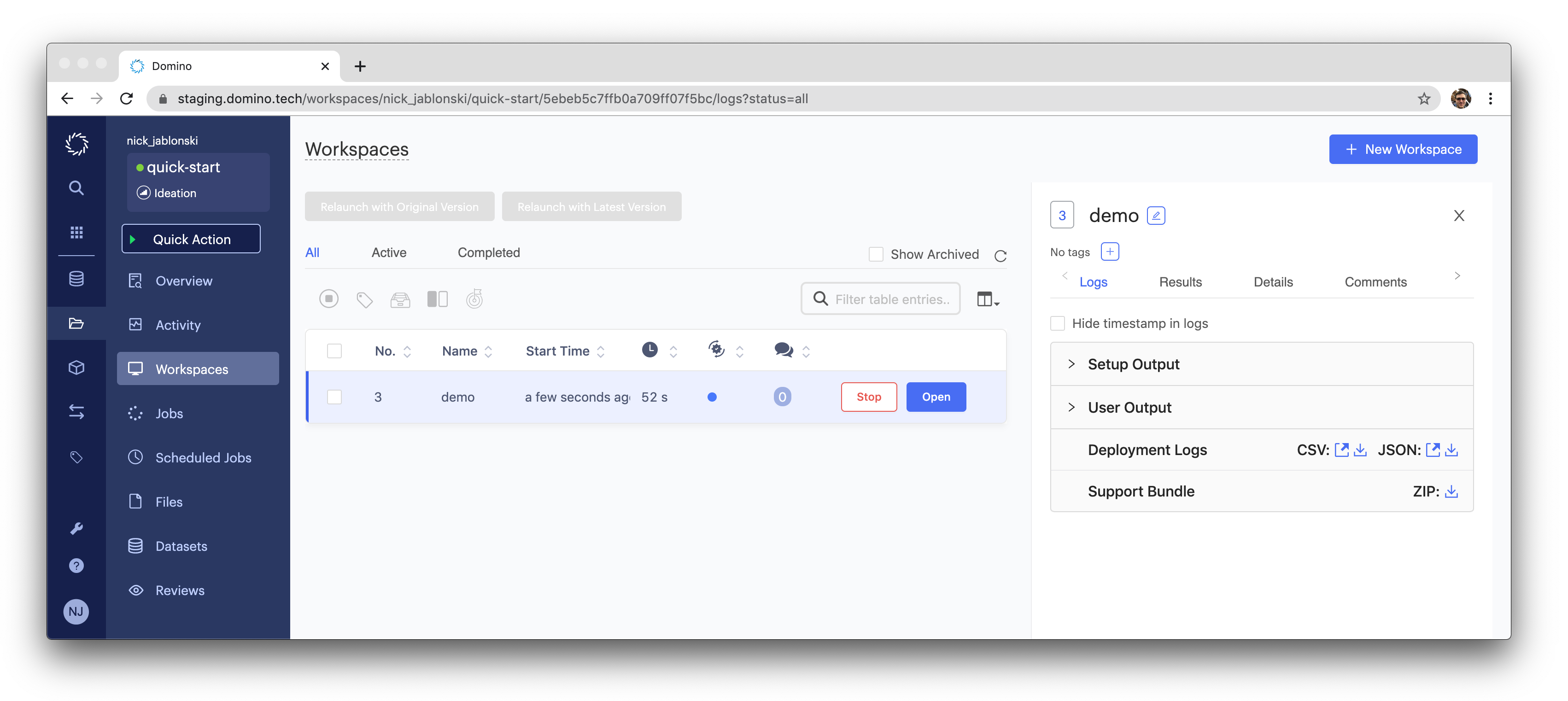

The user interface for workspaces has been redesigned to support new features like separate interface tabs for the Spark UI when using on-demand Spark clusters.

The workspaces dashboard has also been redesigned for simplicity and readability.

The process for launching a new workspace is now started from the + New Workspace button at top right. This button opens a redesigned dialog for starting a workspace.

-

Performance improvements to the workspace startup flow have reduced the minimum time to start a workspace, and additional progress information is now shown during startup.

-

A number of issues related to reverse proxy and subdomain access to workspaces have been fixed.

-

The default resource requests for Domino platform services have been tuned to provide baseline capacity for orchestrating 300 concurrent executions. Read the Sizing Infrastructure for Domino to learn more.

-

The error messaging when there is an environment build failure due to Dockerfile parsing errors is not clear.

-

The links on the Model APIs versions page go to the latest version of the project files instead of the project files for the model version listed.

-

When using the Domino Minimal Distribution (DMD), it fails to install some dependencies.

-

The Support button that is used to contact Domino Technical Support is missing.

-

Workspace will not launch if a snapshot that is marked for deletion is mounted to another project through a shared dataset.

-

Multiple hardware tiers can be designated as the "default" hardware tier for a Domino deployment.

-

When a user clones a hardware tier, the form is populated with fields derived from the source. However, the name is also set to a value from the source but it is derived from the ID and not the Name.

-

Assets portfolio becomes unresponsive if the portfolio contains a large number of assets.

-

Calls are consuming large amounts of CPU and causing Domino to become unresponsive.

-

When publishing a new revision of a model, the model returns 502 errors for several minutes.

-

When restarting a Workspace through the Update Settings modal, External Data Volumes are not mounted in the new Workspace. Follow the steps to mount External Data Volumes. This issue is fixed in Domino 5.9.0.