See also the fleetcommand-agent Release Notes.

The following versions have been validated with Domino 5.0. Other versions may also be compatible but are not guaranteed.

-

Kubernetes 1.18–1.20

ImportantSee the cluster requirements for important notes about compatibility with Kubernetes 1.20 and above. -

Ray - 1.6.0

-

Spark - 3.1.2

-

Dask - 2021.10.0

|

Note

|

Many of these new features require packages that are specific to the 5.0 Domino Standard Environment (DSE). To use these features, do one of the following:

|

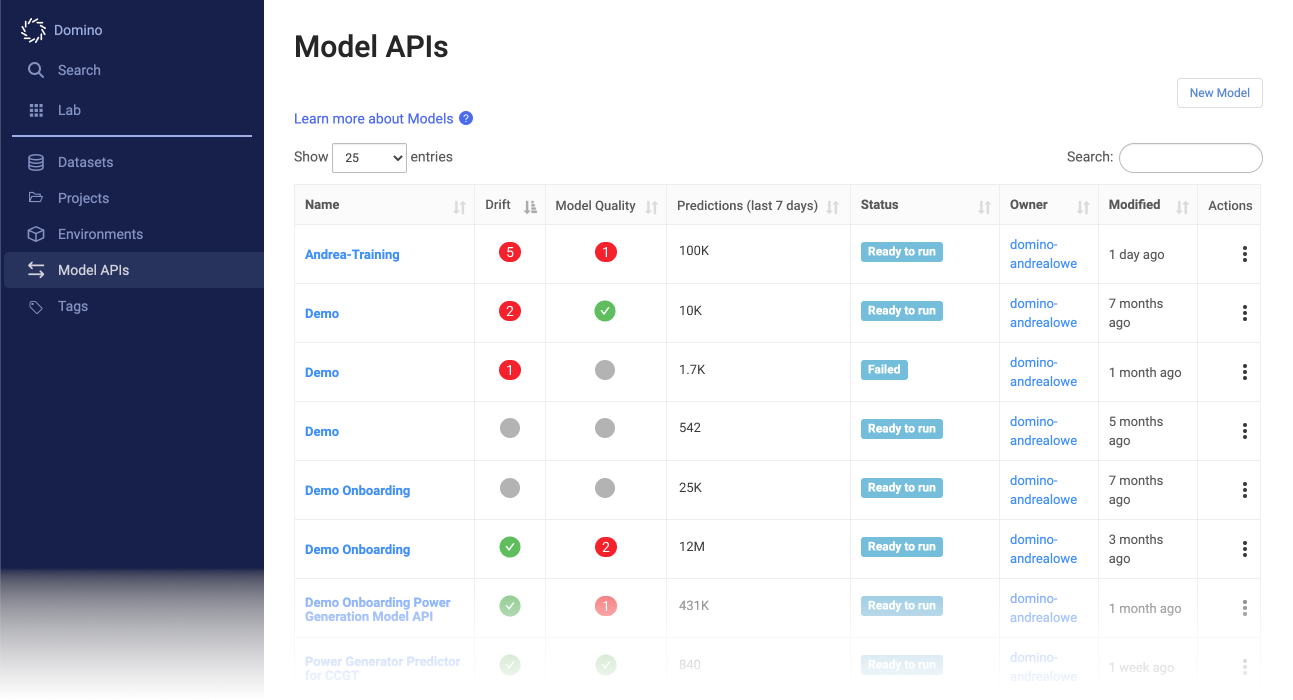

Seamless experience to enable continuous monitoring of Model APIs

Domino’s Model APIs now have a quick and easy set of steps to prepare your model for continuous monitoring and proactive alerting on drift and model quality.

You no longer need to build a separate data pipeline to collect and store a model’s production data for use in monitoring. Using our data capture libraries, you can add simple instrumentation to your training and inference code that is seamlessly integrated with the workbench and deployment platform. Domino automatically analyzes the prediction and training data to generate drift and model quality monitoring metrics and alerting. Our integrated workflows unlock remediation steps via our new workspace reproducibility feature. We provide you with an environment to debug your code and access prediction data in near real time in the form of a Domino Dataset for deeper analysis of drift and model quality results.

-

New mechanism for ingesting prediction data

-

New client libraries in Python and R allow you to instrument your prediction logic to capture prediction data that is then automatically streamed to the Model Monitor for analysis so it can be applied in data drift and model quality monitoring. The data is also made available in Domino Datasets within your project.

-

Domino automatically infers the schema of the model from the registered Training Set so you do not need to configure the model separately for monitoring.

-

-

Integrated UI

-

A new Monitoring tab in the Model API section provides detailed drift and model quality information on a per-feature basis. Within a simple configuration dialog, you can enable continuous monitoring of your model.

-

In addition, the new UI allows configurations to adjust test types, thresholds, schedules, alert notifications, and more.

-

Signals for drift and model quality monitoring are now integrated into the Model API overview page to get a holistic view of the quality of all your models.

-

-

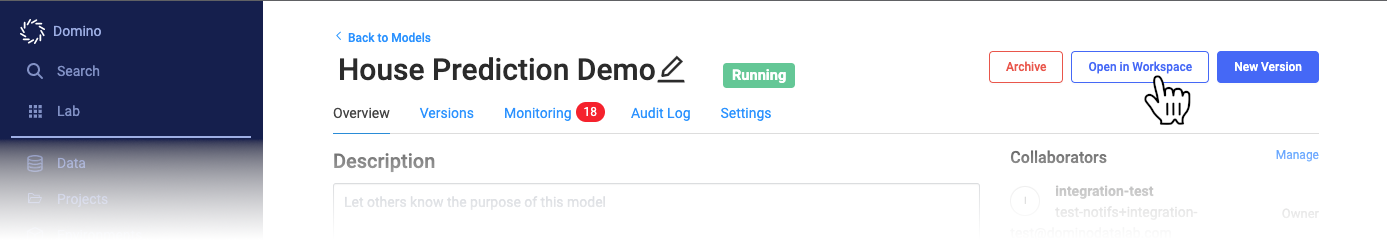

Model remediation and reproducibility

With a new Open in Workspace feature, Domino establishes a “closed-loop” environment to access historical predictions for deeper analysis of issues found during monitoring, reproduce a development environment, and re-deploy a more accurate model faster.

-

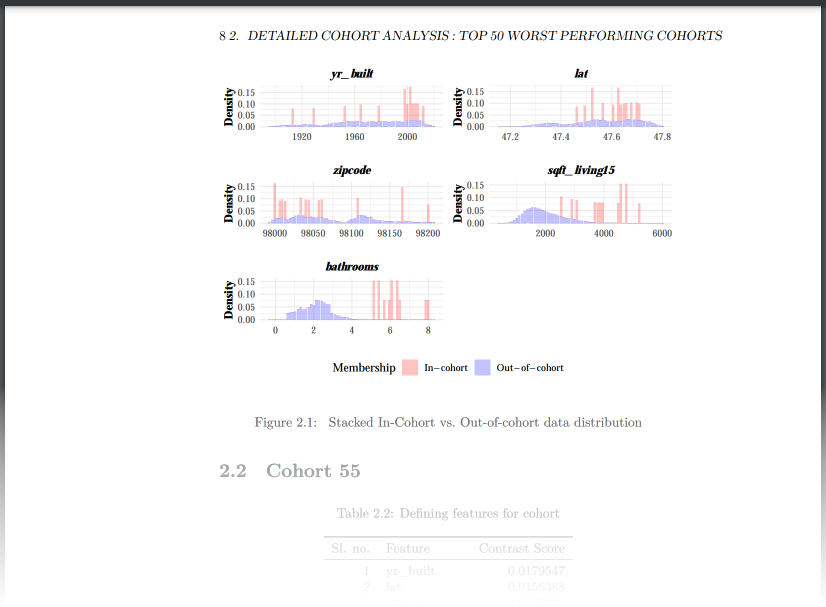

Cohort Analysis

The Cohort Analysis feature gives you the details you need in order to take remedial action when the Model Monitor detects data drift or model quality issues. It identifies underperforming cohorts of data, determines what differentiates this data, and shows you specific features of the data that require examination.

See Cohort Analysis for details and configuration steps.

-

Support for Parquet files

Parquet files are now supported in training, prediction, and ground truth datasets in the Model Monitor. With this change, both CSV and Parquet formats are supported for datasets in monitoring.

See Model Monitoring for complete details.

Install-time updates to Domino

With the model monitoring integration, there are a few changes to the Domino deployment and installation process:

-

Minimum requirements

The minimum node requirements for Domino are now increased by 2 nodes (1 platform node + 1 compute node).

-

Upgrades

If the platform cluster does not support autoscaling, extra nodes must be made available before an upgrade to 5.0. If they do, no changes are needed.

-

Install options for the Model Monitor

-

No standalone Model Monitor installation and no standalone Domino installation in 5.0

-

Install-time configuration options to enable / disable the Model Monitor no longer exist.

-

Cluster auto-scaling

Domino can now auto-scale Spark, Ray, and Dask on-demand clusters to dynamically grow and shrink to optimize resource utilization and reduce cost, especially with bursty workloads. This allows you to start with a small cluster which then automatically scales up and down in response to the resource consumption of your workload.

Cluster auto-scaling is not enabled by default. See the Administration Guide for details about enabling this feature. See Spark cluster auto-scaling, Ray cluster auto-scaling, and Dask cluster auto-scaling for more details and configuration options.

|

Note

|

The preferred method of connecting to a Ray cluster has changed to use a modified version of |

Domino Data API

This new Python library lets you access tabular and file-based data using consistent access patterns and SQL-based access to tabular data. Using this method, there is no need to restart a workload to install drivers. Connectors can be queried on the fly. Results are available as dataframe abstractions for popular libraries.

|

Note

| This is a preview feature, subject to change in future releases. |

In Domino 5.0, this new data access method is supported for Snowflake, Redshift, and S3, with additional data sources planned for future releases.

See the Domino Data API documentation.

Training Sets

Training sets persist dataframes for model training or analysis. You can store or load multiple versions of dataframes, produced by data source queries or constructed by any other method you like. Training sets are stored alongside projects, using the same permissions as the project. In addition to training data, training sets capture metadata to establish a baseline for integrated model monitoring. Performance is optimized by caching expensive retrieval operations.

See Training Sets Use Cases for information about how to create, retrieve, and update training sets.

-

Vault integration for secret storage

Domino now stores environment variables, user API keys, and data source access secrets at rest, in encrypted form, inside a pre-configured internal HashiCorp Vault.

-

Improvements to data sources

Data connectors (UI, SDK, and API) provide access to popular data sources in a structured, self-service, and highly secure manner.

Now when you have a configured data source, you can copy a code snippet to paste into your query code for easy access to the data.

-

Credentials are now stored securely.

Data access credentials now are stored, encrypted, in an internal HashiCorp Vault.

-

You can share data sources with other users and organizations.

-

You can query multiple data sources.

-

-

Git integration improvements

This release includes smoother integration for Git-based projects.

-

Switch Git branches in your Domino workspace.

Users now have full access to Git branches during model development for seamless collaboration.

-

Resolve merge conflicts directly in your Domino workspace.

Domino 5.0 improves on existing capabilities by simplifying the process for resolving conflicts when merging code.

-

-

Checkpoints for durable workspaces

Durable workspaces, introduced in Domino 4.4, now allow you to create "checkpoints" – reproducible commit points that you can return to at any point to review the history of your work or branch experiments in new directions. A new Open in Workspace button in the Model API interface lets you open a model at any checkpoint. You can also review the workspace session history. See Recreate a Workspace From A Previous Commit.

NoteOnly models published in 5.0 and up can be opened this way. Models originally published using an earlier release will have the Open in Workspace button grayed out. Additionally, commits made outside of Domino cannot be used as checkpoints. -

Compute cluster dedicated hardware tiers

A new Restrict to compute cluster setting specifies the compute cluster types (such as Spark, Ray, Dask, and so on) that should exclusively use a given Hardware Tier. See the Administration Guide for details.

-

Improvements to Domino Environments

Stability and security are improved in the Domino Environments for 5.0. Here are the key changes in the 5.0 Domino Environment images:

-

Fixed R Kernel in Jupyter

-

Added Domino-specific packages to the environments, like domino-data-capture, and dominodatalab-data

-

Add Spark Cluster and Spark Compute Environments by default to new deploys to support the Cohort Analysis feature

-

Fixed Jupyter notebook subdomain support

-

Added more Ray packages to the Ray Compute Environment

-

-

The Model Monitor is now compatible with Istio.

-

The Domino CLI installer no longer requires administrator privileges on Windows or Linux.

On Mac OS X, the installer prompts you for administrator credentials. If you do not supply them, you can still install and use the CLI by updating your PATH.

-

The default values of these configuration keys have been doubled in order to support workspace reproducibility:

-

com.cerebro.domino.workbench.workspace.maxWorkspacesPerUserPerProject -

com.cerebro.domino.workbench.workspace.maxWorkspacesPerUser -

com.cerebro.domino.workbench.workspace.maxWorkspaces

-

-

The GP3 volume type is now supported and used by default when deploying in AWS.

GP3 is now the default storage type for all platform and compute nodes in new deployments in AWS. In existing deployments, compute nodes can leverage GP3 volume types. Switching storage volume types for platform nodes to GP3 is not supported.

To use GP3 volumes for compute nodes in 5.0.0: Create a new GP3 storage class. Update the

com.cerebro.domino.computegrid.kubernetes.volume.storageClasscentral configuration value todominodisk-gp3. ** Restart Domino. -

The values for known configuration keys are now validated in the central config editor UI.

-

New configuration keys:

-

metrics_server.installdetermines whether the Kubernetesmetrics-servercomponent is installed as part of the installation/upgrade process. The default isfalse; you only need to set this totruein EKS or any other Kubernetes cluster type where themetrics-servercomponent is not provided out-of-the-box. -

vault.enabledspecifies whether the internal vault is enabled. In 5.0, this must be set totrue.

-

-

The

helm.versionconfiguration key has been removed. Helm 3 is now the only supported version.-

metrics_server.installdetermines whether the Kubernetesmetrics-servercomponent is installed as part of the installation/upgrade process. The default isfalse; you only need to set this totruein EKS or any other Kubernetes cluster type where themetrics-servercomponent is not provided out-of-the-box. -

vault.enabledspecifies whether the internal vault is enabled. In 5.0, this must be set totrue.

-

-

Domino can now import environment variables from projects that are owned by the organization.

-

metrics_server.installdetermines whether the Kubernetesmetrics-servercomponent is installed as part of the installation/upgrade process. The default isfalse; you only need to set this totruein EKS or any other Kubernetes cluster type where themetrics-servercomponent is not provided out-of-the-box. -

vault.enabledspecifies whether the internal vault is enabled. In 5.0, this must be set totrue.

-

-

Workspaces become unstoppable and their statuses don’t change when the timestamps on the run’s status changes array are incorrect.

These are issues known to exist in the 5.0.0 release that will be fixed in an upcoming release.

-

In the Control Center’s navigation pane, click Assets. Domino displays a "Failed to get assets" message and takes a long time to load the assets.

-

You cannot delete, rename, or move a

.gitignorefile in a Domino File System project.-

If you delete the file, it remains.

-

If you move the file, the original and target file both exist.

-

If you rename the file, the source and renamed file both exist.

-

-

If you rerun a successful job that accesses files from a dataset in a project owned by an organization, the job fails with

file not founderrors. Initial runs of the jobs succeed, but reruns fail with logging similar to the following:2022-10-06 04:34:32 : FileNotFoundError: [Errno 2] No such file or directory: '/domino/datasets/local/reruns_fail/file.txt'

To work around this, start the execution as a new job.

-

If you created a new DSE-based environment or rebuilt an existing one between January 14-21, those environments may contain outdated packages that prevent new 5.0 features from working correctly. You can resolve this issue by rebuilding those environments or by downloading the latest packages from PyPI at https://pypi.org/project/dominodatalab-data/.

-

Email notifications from the Model Monitor only work over SMTP+STARTTLS, which is usually deployed on ports

587,25, and2587. SMTPS, which is usually deployed on ports465and2465, is not supported. If you are not receiving emails from the Model Monitor, and the Model Monitor is configured to send emails over port465, try changing the email settings to use port587instead. You can configure the SMTP port for the Model Monitor email service at Model Monitor > Settings > Notification Channels > SMTP Port. -

When you export a Domino model to Amazon Sagemaker and create an endpoint from the exported model, the Sagemaker endpoint may fail with the error

The primary container for production variant variant-name-1 did not pass the ping health check.Follow these steps to work around the issue: . Add

USER rootin the environmentDockerfileinstructions. . Publish the model API from the new instructions. . Export the same image in Sagemaker and create an endpoint. -

When using a Ray workspace to run a GPU workload that exercises the use of the

filelock._unixsubmodule, you may encounter the errorModuleNotFoundError: No module named 'filelock._unix'; 'filelock' is not a package. This can occur in environments that are based on thequay.io/domino/ray-environment:ubuntu18-py3.8-r4.1-ray1.6.0-domino5.0image tag. To work around this issue, update the workspace module’s version offilelocklike this:RUN pip install filelock==3.0.12 --user -

When building Model API images with the V2 ImageBuilder (Forge) on deployments with Istio enabled, Repocloner is not supported. That means that on deployments with Istio enabled, the

ShortLived.RepoclonerImageBuildsconfiguration key must be set to “false” if theShortLived.ImageBuilderV2key is set to “true”. -

After upgrading Kubernetes,

X509 certificate signed by unknown authorityerrors appear and Domino fails to deploy workspaces, jobs, apps, models, and so on.This can occur if you upgrade to a Kubernetes version where Docker is not the default runtime. Domino depends on some Docker functionality (for managing certificate trust) that is not available in containerd without special configuration. See the

cluster requirementsfor important notes about compatibility with Kubernetes 1.20 and above, and contact your Domino customer success team for guidance. -

Project collaborators with Launcher User or Results Consumer permissions can access the project’s files.

-

A customer is unable to integrate to Jira due to authentication issues.

-

Environment detail pages loading slowly and timeout if they have < 50 revisions and many linked projects.

-

The output of the

gateway/runs/getByBatchIdendpoint is missing therunsandnextBatchIdobjects. This issue is resolved in Domino 5.2.0.

-

Sync operations for deployments backed by SMB file stores can sometimes result in unintended file deletions. This issue is fixed in Domino 5.2.0.

-

You cannot delete a

.gitignorefile in a Domino File System (DFS)-based project. This issue is fixed in Domino 5.2.0.

-

Jobs can fail or become stuck in a Pending state when using files from an S3 data source. This issue is fixed in Domino 5.2.0.

-

Apps may fail with unusual error messages. This issue is resolved in Domino 5.2.0.

-

Opening an un-cached workspace can cause the workspace to get stuck in the "Pulling" status. This is fixed in Domino 5.1.0.

-

When an API key explicitly passed as an

api_keyargument and theDOMINO_TOKEN_FILEenvironment variable is set, Domino uses the token authentication. This issue is resolved in Domino 5.2.0.

-

When Flask apps generate links, this can result in unwanted

302 redirectbehavior. This issue is fixed in Domino 5.2.0.

-

When a Model API fails while retrieving files from git using SSH keys, the private key and the key password, if any, may be exposed in the Domino log. This issue is resolved in Domino 5.2.0.

-

The Import Project drop-down does not display projects owned by organizations of which you are a member. This issue is resolved in Domino 5.2.0.

-

MATLAB environment builds may fail, or MATLAB workspaces may be unresponsive.

-

You might experience slow loading times when you open the Environments, Workspaces, Model APIs, or Projects pages.

-

When starting a workspace, the application sometimes gets stuck in the Pulling status, even though the logs indicate that the workspace started successfully. If this occurs, stop and start the workspace again. This issue is fixed in Domino 5.2.0.

-

The Kolmogorov–Smirnov Test, Wasserstein distance, and Energy Distance tests can lead to unpredictable results. Do not use these tests.

-

After upgrading to 4.6.4 or higher, launching a job from a Git-based project that uses a shared SSH key or personal access token results in a

Cannot access Git repositoryerror.

-

In a Git-based project,

requirements.txtmust be in your project’s Artifacts folder in order to be picked up by an execution, instead of at the root level in your project files.TipTo avoid falling out of sync with the Git repository, always modify requirements.txtin the repository first and then copy it to your project’s Artifacts folder.

-

After you migrate from 4.x, scheduled jobs will fail until the users that own them log out and log in again. This issue is resolved in Domino 5.3.0.

TipYou can also resolve this issue by removing all existing tokens in MongoDB, like this: db.user_session_values.remove({"key": "jwt"})+

-

Domino can become inaccessible after a fixed uptime period when running on Kubernetes 1.21 or higher. See this knowledge base article for the workaround. This issue is fixed in Domino 5.3.1.

-

If you upgrade to this release from Domino 3.x or 4.x, Git credentials might be omitted from the menu when you create a new Git-based project. A workaround is available from Domino’s Customer Success team.

-

Model Monitoring data sources aren’t validated. If you enter an invalid bucket name and attempt to save, the entry will go through. However, you won’t be able to see metrics for that entry because the name points to an invalid bucket.

-

Configuring prediction when setting up prediction capture for Model APIs causes predictions to not be captured. This issue is resolved in 5.4.0.

-

When restarting a Workspace through the Update Settings modal, External Data Volumes are not mounted in the new Workspace. Follow the steps to mount External Data Volumes. This issue is fixed in Domino 5.9.0.

-

Viewing dataset files in an Azure-based Domino cluster may lock files, preventing them from being deleted or modified. Restarting Nucleus frontend pods will release the lock. This issue is fixed in Domino 5.11.1.