See also the fleetcommand-agent Release Notes.

The following versions have been validated with Domino 5.2.0. Other versions might also be compatible but are not guaranteed.

-

Kubernetes - see the Kubernetes compatibility chart

-

Ray - 1.12.0

-

Spark - 3.2.1

-

Dask - 2022.04.2

-

Dask ML - 2021.11.30

-

MPI - 4.1.3

IntelliSize for durable workspaces

Domino now provides intelligent workspace features designed to reduce your cloud compute and storage costs, maximize performance, and ensure that resources are always available to users.

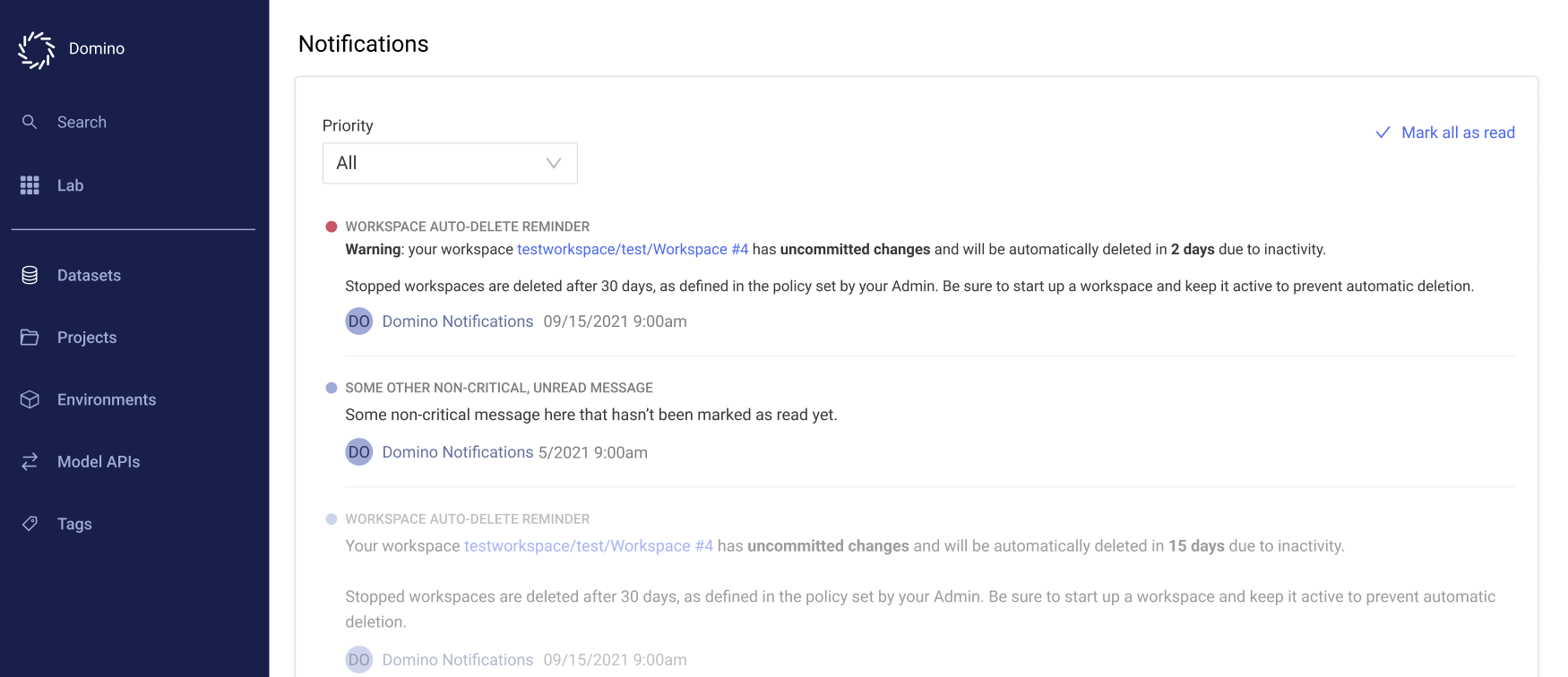

Automatic workspace deletion

Domino can automatically delete unused workspaces to make more storage available.

An administrator must enable this feature. If this feature is enabled, Domino automatically deletes workspaces that have been stopped for 10 days plus a 30-day grace period.

An administrator can configure both of these periods.

You are notified in advance (in the Domino application and through email) when your workspaces are scheduled for automatic deletion:

To remove a workspace that is scheduled for deletion from the auto-delete schedule, start it again. The auto-delete period begins again the next time the workspace is stopped.

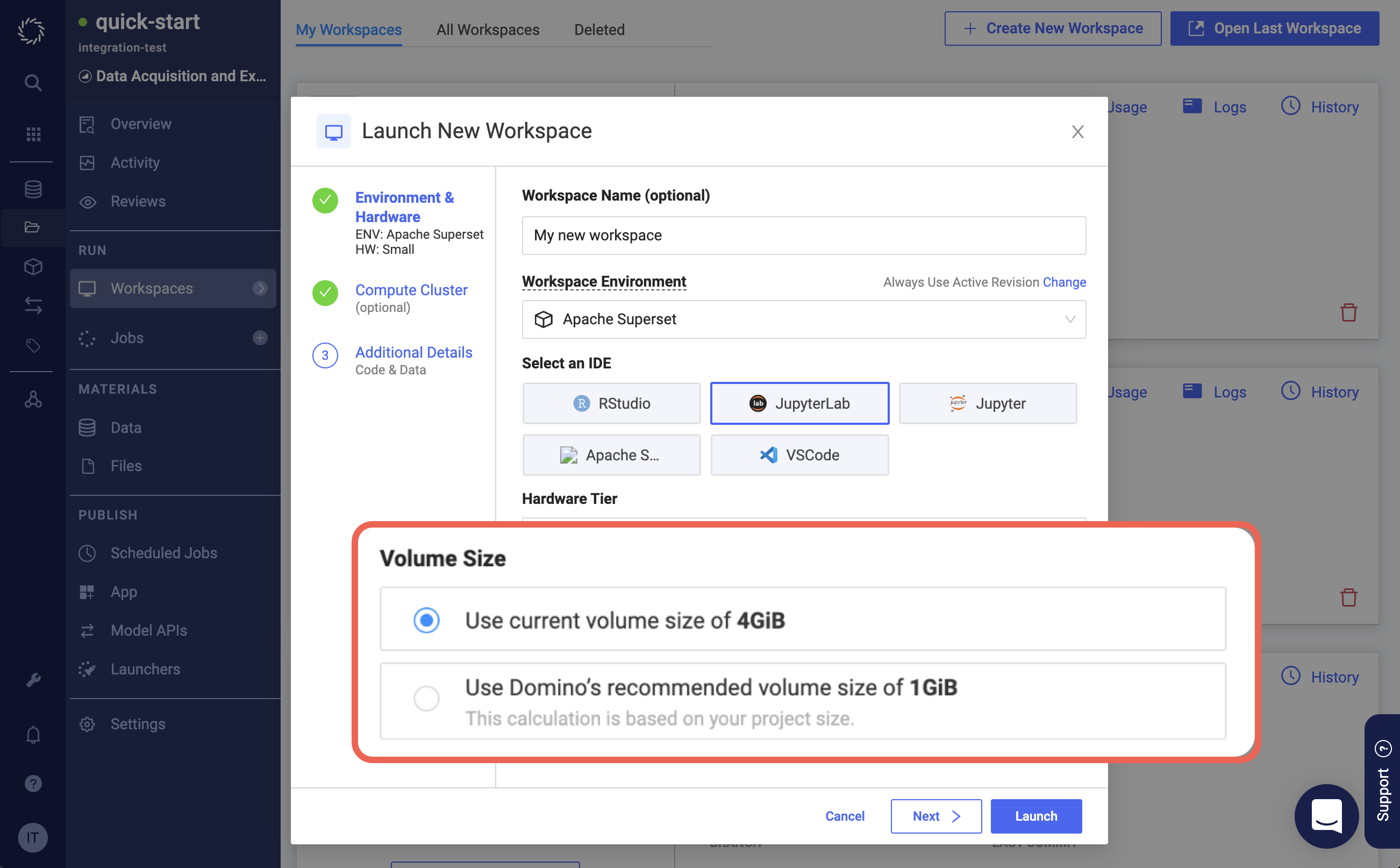

Persistent volume provisioning recommendations

You can skip the recommendation to use the default value configured by your administrator.

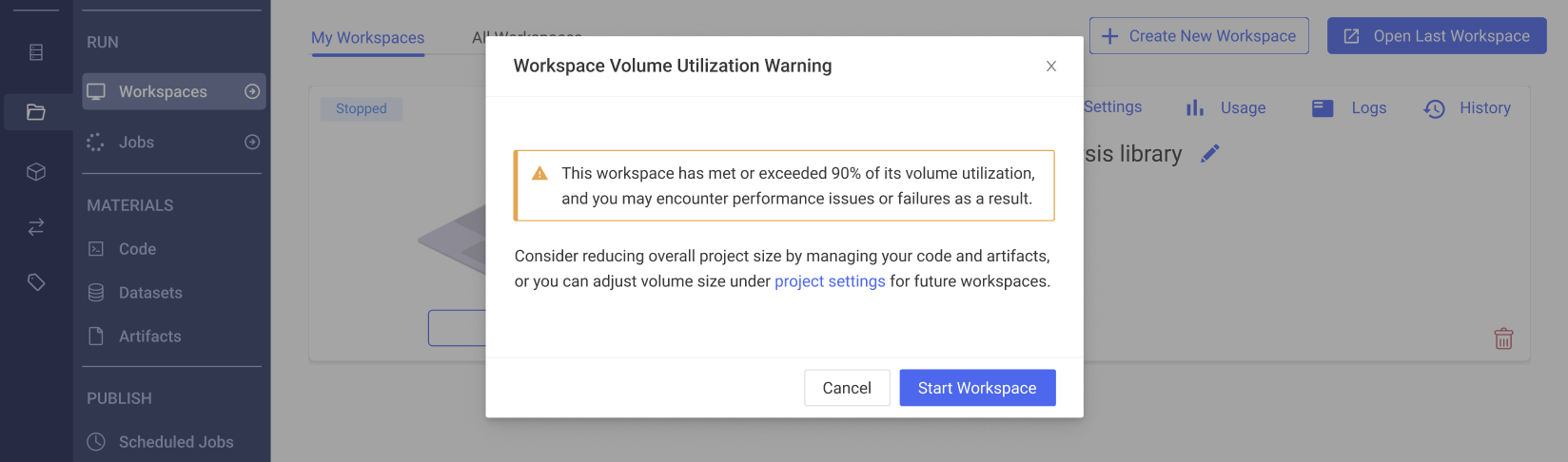

Better messaging about volume utilization

Domino notifies you when a workspace or job is utilizing more than 90% of its volume size (configurable by an administrator), or when it fails to start because it has already exceeded its volume utilization limits.

Log messages also include more details and actionable information when a workspace or job fails to load.

Environment revisioning

By default, the active revision of your environment is used when you start your workspace, job, or scheduled job. Environment revisioning lets you select a different revision of your environment when starting a scheduled job.

Publish models to Snowflake

You can build models in Domino and use them in Snowflake queries. Snowflake models perform in-database scoring. By processing data in-place, your models perform faster and cost you less than if you transferred large amounts of data between systems.

If you want to export to other external providers such as NVIDIA or AWS SageMaker, use the /v4/models/buildModelImage endpoint.

See Export to Sagemaker and Export to NVIDIA , Export to NVIDIA, and Use Models in Snowflake Queries for more information about exports.

Snowflake as a data source for Model Monitoring

You can now use Snowflake as a data source for the model monitoring data. See Connect a Data Source.

Advanced authentication for data sources

In addition to basic authentication, Domino now supports OAuth for Amazon S3 data sources.

An administrator must enable these new authentication methods. See Central Configuration for a reference to the relevant configuration keys.

New data connector for BigQuery

You can create a Domino data source to connect to your BigQuery data store. See Connect to BigQuery for instructions.

-

Model publishing UX refactoring

Currently, you publish models to Apps, Launchers, Model APIs, and Scheduled Jobs. Domino introduced an Exports page to manage models published to external systems like Snowflake, AWS Sagemaker, and NVIDIA Fleet Command. This is an initial step towards simplifying the model publishing process. Ultimately, Domino will consolidate publishing methods to simplify the user interaction.

-

When installing Python/R packages in your Domino environments, you no longer need to run the installation commands as root. Only OS-level commands (such as

apt-get install …) must be run as root.

-

The Rerun with latest job launch shortcut is now called Re-run with current version and uses the current active revision of the environment for both the main execution and the cluster environment. This is a change from the previous behavior where the project’s current default environment and revision were used for the main execution.

-

Model API image builds using Forge (ImageBuilder version 2) now use Repocloner (a built-in binary, not a service) instead of Replicator for cloning project files. You can revert to the previous behavior (using Replicator) by setting the

ShortLived.RepoclonerImageBuildsfeature flag tofalse.

-

You can use the new

com.cerebro.domino.repocloner.javaOptsCentral Configuration key to adjust the maximum size of memory heap. You can also add Java options to the value. The Repocloner replaces the Replicator for model builds. See Central Configuration for details.

Domino 5.2.0 introduces new public REST API endpoints. Some are entirely new endpoints, while others deprecate some of the previous APIs.

API endpoints are available for:

| New endpoint | Beta? | Deprecates |

|---|---|---|

✓ | ||

✓ | ||

✓ | ||

✓ | ||

✓ | ||

✓ | ||

✓ | ||

✓ | ||

✓ | ||

✓ | ||

-

When exporting notebooks from JupyterLab workspaces, the system shows a 403 Forbidden Error with Blocking Cross Origin message.

-

Now the Import Project drop-down includes projects owned by organizations of which you are a member. This fixes a known issue in Domino 4.4, 4.5, and 4.6.

-

Fixed an issue that prevented users from entering a URI in the New Environment window.

-

Domino now honors any API key explicitly passed as an

api_keyargument, even when theDOMINO_TOKEN_FILEenvironment variable is set. Likewise, it honors any token passed as atokenargument, even when theDOMINO_USER_API_KEYenvironment variable is set.NoteIf DOMINO_TOKEN_FILEandDOMINO_USER_API_KEYare both set and no API key is passed as an argument, thenDOMINO_TOKEN_FILEtakes precedence. Likewise, if both atokenand anapi_keyargument are specified, then the token argument takes precedence.

-

Fixed an issue that sometimes caused the Domino application to hang with a "Waiting for available socket" error.

-

Fixed an issue that sometimes caused apps to fail with unusual error messages.

-

Fixed an issue that sometimes caused jobs to fail or become stuck in a

Pendingstate when using files from an S3 data source.

-

When you set the default environment on the Environments page and then restart the service, Domino no longer reverts to the default environment previously set on the Central Configuration page.

-

Fixed an issue that prevented deleting a

.gitignorefile in a Domino File System (DFS)-based project.

-

Fixed a bug that allowed Javascript to be executed when viewing a raw HTML file while

com.cerebro.domino.frontend.allowHtmlFilesToRenderisfalse.

-

Flask apps can now generate links without unwanted

302 redirectbehavior.

-

Database migrations that run during upgrades now stop when a failure occurs, instead of continuing with the remaining migrations. The last exception is stored in the database for troubleshooting purposes.

-

Fixed an issue that prevented workspaces from launching when the name of the organization or user that owned the project started with

data.

-

The

gateway/runs/getByBatchIdendpoint now produces the correct output, including therunsandnextBatchIdobjects.

-

Fixed an issue that caused file deletions during sync operations for deployments backed by SMB file stores.

-

Fixed an issue that caused MATLAB environment builds to fail and MATLAB workspaces to become unresponsive.

-

Fixed an issue that caused the application to get stuck displaying the Pulling status after starting a workspace.

-

URLs in the Job Details tab will no longer have single quotes, which prevented users from viewing the job file.

-

Workspaces become unstoppable and their statuses don’t change when the timestamps on the run’s status changes array are incorrect.

-

After deleting branches that were created in GitHub but not pulled (that is, they are not local on Domino), they are still shown in the Code menu in the Data tab of the workspace.

-

If you are comparing jobs that have large numbers of output files, you might encounter a

502 Bad Gatewayerror.

-

In the Control Center’s navigation pane, click Assets. Domino displays a "Failed to get assets" message and takes a long time to load the assets.

-

You cannot delete, rename, or move a

.gitignorefile in a Domino File System project.-

If you delete the file, it remains.

-

If you move the file, the original and target file both exist.

-

If you rename the file, the source and renamed file both exist.

-

-

If you rerun a successful job that accesses files from a dataset in a project owned by an organization, the job fails with

file not founderrors. Initial runs of the jobs succeed, but reruns fail with logging similar to the following:2022-10-06 04:34:32 : FileNotFoundError: [Errno 2] No such file or directory: '/domino/datasets/local/reruns_fail/file.txt'

To work around this, start the execution as a new job.

-

If you use a forward slash (

/) in a Git branch name, the forward slash and any preceding text is stripped. For example, if the branch was namedtest/branch1, it will be renamedbranch1.-

In a workspace in a Git-based project, you can’t pull data because the branch doesn’t exist. You can push because this creates a new branch named

branch1. -

In a Domino File System project that uses imported code repositories, you can push and pull directly to and from the branch (

test/branch2) if you don’t change the branch in the menu. If you change the branch, you can’t return to the selection (test/branch2) becausetest/is stripped. If you push tobranch2, this creates a new branch namedbranch2.

-

-

The graph views are skewed incorrectly, in Domino’s model monitoring, when normalized values are small.

-

ImageBuilder V3 does not correctly install or function when installing Domino 5.2.0 in these environments:

-

Kubernetes v1.19

-

Offline or private registry environments, where the Domino installer cannot retrieve images from public repositories

Domino 5.2.1 resolves this issue.

-

-

If you have the configuration key

refreshTokenInRunset totrueand are migrating from versions 4.3.x through 4.6.x to 5.x, Scheduled Jobs will no longer work. JWT tokens generated prior to Domino 5.x are not compatible with the offline tokens used in Domino 5.x. To remedy this, individual users must logout of Domino, then log back in. Administrators can use the following command to remove all existing tokens from MongoDB:db.user_session_values.remove({"key": "jwt"})

-

Due to a bug in Rancher 2.6.0 and above that makes it unable to properly create and manage TLS certificates, Domino is unable to deploy and validate Kubernetes >= 1.21 for Rancher deployments.

-

You might experience slow loading times when opening the Environments, Workspaces, Model APIs, or Projects pages.

-

You might experience slow response times with the Projects API, Projects v4 API, and the

/v4/gateway/projects/findProjectByOwnerAndNameendpoint.

-

The Kolmogorov–Smirnov Test, Wasserstein distance, and Energy Distance tests can lead to unpredictable results. Do not use these tests.

-

The lists of projects and scheduled runs might load slowly when you have many projects and models.

-

When a node runs low on available memory, Kubernetes might evict job and workspace pods residing on that node, and those pods might fail to start up on a different node. To minimize pod eviction, set the hardware tier memory limit to be equal to the memory request.

-

Setting

com.cerebro.domino.workloadNotifications.isRequiredtotruedoes not prevent users from turning off notifications, and changing the value ofcom.cerebro.domino.workloadNotifications.maximumPeriodInSecondshas no effect.

-

When you add environment variables to a project, they are visible in the

execution_specificationscollection in MongoDB.

-

After you upgrade to 4.6.4 or higher, if you launch a job from a Git-based project that uses a shared SSH key or personal access token, it results in a

Cannot access Git repositoryerror.

-

In a Git-based project,

requirements.txtmust be in your project’s Artifacts folder in order to be picked up by an execution, instead of at the root level in your project files.TipTo avoid falling out of sync with the Git repository, always modify requirements.txtin the repository first and then copy it to your project’s Artifacts folder.

-

When submitting a large number of jobs, Domino processes the first batch of jobs as usual but if the total number of jobs is larger than the execution quota for the user or the hardware tier, then the queued jobs are not promptly processed when capacity is available under the quota. This issue is resolved in Domino 5.2.2.

-

After you migrate from 4.x, scheduled jobs will fail until the users that own them log out and log in again. This issue is resolved in Domino 5.3.0.

TipYou can also resolve this issue by removing all existing tokens in MongoDB, like this: db.user_session_values.remove({"key": "jwt"})

-

Domino can become inaccessible after a fixed uptime period when running on Kubernetes 1.21 or higher. See this knowledge base article for the workaround. This issue is fixed in Domino 5.3.1.

-

Upgrading to this release from Domino 3.x or 4.x can cause Git credentials to be omitted from the dropdown when creating a new Git-based project. A workaround is available from Domino’s Customer Success team.

-

When you use drag-and-drop to upload a large number of files at once, the upload might fail. The application displays many empty dialogs. Use the Domino CLI to upload large numbers of files instead. Domino 5.4.0 eliminates the empty dialogs and shows helpful information.

-

Model Monitoring data sources aren’t validated. If you enter an invalid bucket name and attempt to save, the entry will go through. However, you won’t be able to see metrics for that entry because the name points to an invalid bucket.

-

A limit overcommit error (OOMKilled) is occurring frequently on the

prometheus-adapterpod in Domino’s Integrated Model Monitoring. The current workaround is to increase the limits. This issue is resolved in Domino 5.5.0.

-

If the Model Access Token Vault migration runs more than once, it wipes tokens from Mongo and Vault, and users need to regenerate their Model Access Tokens in the UI. This is fixed in Domino 5.4.0.

-

"Other" Git service provider URLs not ending in ".git" fail to work when you attempt to create a project from a Git repo or add a Git repo to a project. This issue is fixed in Domino 5.5.0.

-

Configuring prediction when setting up prediction capture for Model APIs causes predictions to not be captured. This issue is resolved in 5.4.0.

-

When restarting a Workspace through the Update Settings modal, External Data Volumes are not mounted in the new Workspace. Follow the steps to mount External Data Volumes. This issue is fixed in Domino 5.9.0.

-

Viewing dataset files in an Azure-based Domino cluster may lock files, preventing them from being deleted or modified. Restarting Nucleus frontend pods will release the lock. This issue is fixed in Domino 5.11.1.