To make a node groups available to Domino, add new Kubernetes worker nodes with a distinct dominodatalab.com/node-pool label.

Then, reference the value of that label when you create new

hardware tiers

to configure Domino to assign

executions to those nodes.

See below for an example of creating a scalable node pool in EKS.

This example shows how to create a new node group with eksctl and expose it to the cluster autoscaler as a labeled Domino node pool.

-

Create a

new-nodegroup.yamlfile like the one below, and configure it with the properties you want the new group to have. All values shown with a$are variables that you must modify.apiVersion: eksctl.io/v1alpha5 kind: ClusterConfig metadata: name: $CLUSTER_NAME region: $CLUSTER_REGION nodeGroups: - name: $GROUP_NAME # this can be any name you choose, it will be part of the ASG and template name instanceType: $AWS_INSTANCE_TYPE minSize: $DMINIMUM_GROUP_SIZE maxSize: $DESIRED_MAXIMUM_GROUP_SIZE volumeSize: 400 # important to allow for image caching on Domino workers availabilityZones: ["$YOUR_CHOICE"] # this should be the same AZ (or the same multiple AZ's) as your other node pools ami: $AMI_ID labels: "dominodatalab.com/node-pool": "$NODE_POOL_NAME" # this is the name you'll reference from Domino # "nvidia.com/gpu": "true" # uncomment this line if this pool uses a GPU instance type tags: "k8s.io/cluster-autoscaler/node-template/label/dominodatalab.com/node-pool": "$NODE_POOL_NAME" # "k8s.io/cluster-autoscaler/node-template/label/nvidia.com/gpu": "true" # uncomment this line if this pool uses a GPU instance typeThe AWS tag with key

k8s.io/cluster-autoscaler/node-template/label/dominodatalab.com/node-poolis important for exposing the group to your cluster autoscaler.You cannot have compute node pools in separate, isolated AZs, as this creates volume affinity errors.

-

Once your configuration file describes the group you want to create, run

eksctl create nodegroup --config-file=new-nodegroup.yaml. -

Take the names of the resulting ASG and add them to the

autoscaling.groupssection of yourdomino.ymlinstaller configuration. -

Run the Domino installer to update the autoscaler.

-

Create a new in Domino that references the new labels.

When finished, you can start Domino executions that use the new Hardware Tier and those executions will be assigned to nodes in the new group, which will be scaled as configured by the cluster autoscaler.

With spot instances, customers’ can leverage the cloud provider’s unused capacity and receive a compute environment at a significantly (50-90%) discounted rate. See AWS spot instance webpage to learn more.

One disadvantage of spot instances is that AWS can revoke the allocation of spot instances with just a 2-minute notice when there’s high demand for on-demand instances. Typical frequency of interruption by instance type and region can be found on AWS spot Instance Advisor webpage.

However, due to their cost benefit, customers may want to leverage spot instances for workloads such as Model API, Domino Apps and small duration Jobs.

|

Note

| We recommend using on-demand instances for default and platform node pools. |

Follow these steps to use spot instances:

-

Add a new node pool with spot instances.

-

Select Spot instances.

-

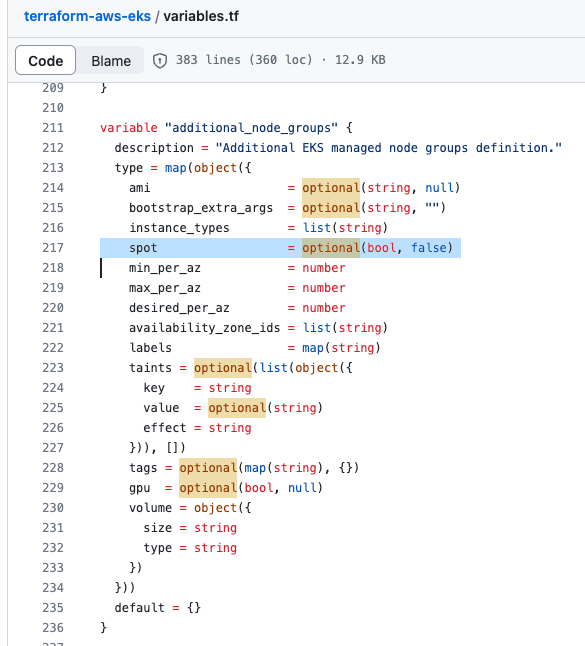

In the Terraform template, select spot = True.

-

-

Create a new hardware tier that will map the new node pool with spot instances. We recommend using 'Spot' to clearly differentiate between spot and On-demand hardware tiers.

Spot instance best practices

Be flexible in terms of instance types and availability zones A spot instance pool consists of unused EC2 instances with the same instance type and availability zone. You should be flexible about which instance types you request and in which Availability Zones.

Use the capacity-optimized allocation strategy Allocation strategies in auto-scaling groups help you deploy your target capacity without manually searching for the spot instance pools with free capacity.

Use proactive capacity balancing Capacity rebalancing helps maintain availability by adding a new spot instance to the fleet before a running spot instance receives the 2-minute notification. It balances the capacity-optimized allocation strategy and the policy of mixed entities.

If the node pool is allowed to use spot instances in multiple availability zones (AZs) and one of the AZs gets its spot instance interrupted, the workload will still not be able to come up again, because the EBS volume of the failed workload is tied to the AZ. If you encounter this issue, use the hardware tier that uses non-spot node pool.