Image: quay.io/domino/fleetcommand-agent:5.11.1.catalog-55ee23e

Installation bundles

Changes

-

Support for Domino 5.11.1.

Image: quay.io/domino/fleetcommand-agent:5.11.0.catalog-454f53d

Installation bundles

Changes

-

Support for Domino 5.11.0.

-

Split catalog releases into "core" and "extended".

-

Added

configured-releasescommand. -

Added the following options to the config:

-

audit_trail

-

extended_catalog

-

fips

-

flyte

-

grafana_alerts

-

ingress_controller.allowed_cidrs

-

istio.permissive

-

monitoring.gpu_metrics

-

monitoring.newrelic.managed_controlplane

Image: quay.io/domino/fleetcommand-agent:5.10.1.catalog-b1ce370

Installation bundles

Changes

-

Support for Domino 5.10.1.

Please note that starting with this release, the versioning has changed.

Image: quay.io/domino/fleetcommand-agent:5.10.0.catalog-0cbbe52

Installation bundles

Changes

-

Support for Domino 5.10.0.

Image: quay.io/domino/fleetcommand-agent:v66.3

Installation bundles

Changes

-

Support for Domino 5.9.3.

Image: quay.io/domino/fleetcommand-agent:v66.2

Installation bundles

Changes

-

Support for Domino 5.9.2.

Image: quay.io/domino/fleetcommand-agent:v66.1

Installation bundles

Changes

-

Support for Domino 5.9.1.

Image: quay.io/domino/fleetcommand-agent:v66

Installation bundles

Changes

-

Support for Domino 5.9.0.

-

Improvements to Prometheus scraping of Keycloak metrics data.

Known issues:

-

Redis has compatibility issues with Docker versions 20.10.9 and earlier. Please upgrade to 20.10.24 or higher if using Docker.

Image: quay.io/domino/fleetcommand-agent:v65

Installation bundles

Changes

-

Support for Domino 5.8.0.

-

The

istiodrelease (istio discovery) no longer supports theENABLE_LEGACY_FSGROUP_INJECTIONenvironment variable. It is recommended to remove the chart value override within thepilot.envmapping. -

Updated DNS detection and reconciliation.

Image: quay.io/domino/fleetcommand-agent:v64.4

Installation bundles

Changes

-

Support for Domino 5.7.4.

Image: quay.io/domino/fleetcommand-agent:v64.3

Installation bundles

Changes

-

Support for Domino 5.7.3.

-

Bumps tf-aws-eks to 2.2.2.

Image: quay.io/domino/fleetcommand-agent:v64.2

Installation bundles

Changes

-

Support for Domino 5.7.2.

Image: quay.io/domino/fleetcommand-agent:v64.1

Installation bundles

Changes

-

Supports a fix for the role synchronization logic that caused a customer’s Domino role memberships to be overwritten or removed during SSO sign-in.

Image: quay.io/domino/fleetcommand-agent:v64

Installation bundles

Changes

-

Adds support for the MLflow proxy to support tokens that contain OAuth scopes applicable to all entities.

Image: quay.io/domino/fleetcommand-agent:v63.2

Installation bundles

Changes

-

Support for Domino 5.6.2.

Image: quay.io/domino/fleetcommand-agent:v62.2

Installation bundles

Changes

-

Support for Domino 5.5.4.

Image: quay.io/domino/fleetcommand-agent:v63.1

Installation bundles

Changes

-

Continuity of executions maintained during upgrades.

-

Backup fixes for Azure; back up custom certificate configs.

Image: quay.io/domino/fleetcommand-agent:v62.1

Installation bundles

Changes

-

Support for Domino 5.5.3.

-

Supports configuring the NGINX charts

extraArgsparameter. The ports are configured by default.

Image: quay.io/domino/fleetcommand-agent:v63

Installation bundles

Changes

-

Support for Domino 5.6.0.

-

Support for Openshift 4.12. The Installer sets global permissions on

cephfs.

-

Kubecost is Accessible via Kubernetes secret.

-

fleetcommand_agent run -ssupports comma and space-separated arguments.

Image: quay.io/domino/fleetcommand-agent:v62

Installation bundles

Changes

-

Support for Domino 5.5.2.

-

CDK updated for new S3 security defaults (disabled ACLs).

-

The

fleetcommand-agent config lintcommand now works as expected, resolving a known issue in v60 and v61.

Image: quay.io/domino/fleetcommand-agent:v61

Installation bundles

Changes

-

Support for Domino 5.5.1.

Known Issues

-

The

fleetcommand-agent config lintcommand does not work. This issue is resolved in v62.

Image: quay.io/domino/fleetcommand-agent:v60.1

Installation bundles

Changes

-

Updated references to 5.5.0 artifacts to incorporate critical patch.

-

Support for Domino 5.5.0.

-

Configuration schema is now 1.3.

-

Importer is now accessed as just

importerrather than/app/importer, as the docker image now installs it into/usr/local/bin.

Known Issues

-

The

fleetcommand-agent config lintcommand does not work. This issue is resolved in v62.

Image: quay.io/domino/fleetcommand-agent:v60

Changes

-

Primary Mongo instance upgraded to 4.4. Note that Mongo can only be upgraded one version at a time (ie Mongo 3.6 → 4.0 → 4.2 → 4.4). Ensure that the

mongodb-replicasetchart release is using Mongo 4.2 before attempting an upgrade, or perform an intermediate upgrade to a Domino 5.3.0 or higher, which uses Mongo 4.2. -

This was the initial

fleetcommand-agentfor the 5.5.0, but has been superseded by v60.1.

Known Issues

-

The

fleetcommand-agent config lintcommand does not work. This issue is resolved in v62.

Image: quay.io/domino/fleetcommand-agent:v59.2

Installation bundles

Changes

-

Support for Domino 5.4.1.

-

Update installation config email validation to support upgrades of older Domino versions.

-

Mongo upgrade version check was incorrect for Domino 5.4.1.

Image: quay.io/domino/fleetcommand-agent:v59.1

Installation bundles

Changes

-

Minor updates to catalog images.

Known Issues

-

This version contains an incorrectly configured check for the Mongo version. It’s checking for Mongo 4.4 when it should be checking for Mongo 4.2. Please use fleetcommand-agent v59.2 or later.

Image: quay.io/domino/fleetcommand-agent:v59

Installation bundles

Changes

-

Support for Domino 5.4.0.

-

Shoreline agent can be installed for incident monitoring.

-

Compatibility for Kubernetes’s deprecation of

PodSecurityPolicy. -

Some functions of

nucleus-dispatcherare split out into their own pods:nucleus-trainandnucleus-workspace-volume-snapshot-cleaner. -

Remove legacy Azure File support, only use the current CSI driver.

-

Force HSTS headers to always be on when using SSL. Previously relied on

nginx-ingressto auto-detect, which could fail with certain load balancer configurations. -

Support for

cephfsandceph-rdbstorage types. -

Projects blob store can use Azure Containers.

-

Support to upgrade from old 4.x releases using MongoDB 3.4.

Known Issues

-

This version contains an incorrectly configured check for the Mongo version. It’s checking for Mongo 4.4 when it should be checking for Mongo 4.2. Can be bypassed with the

--warn-onlyflag. Please use fleetcommand-agent v59.2 or later.

Image: quay.io/domino/fleetcommand-agent:v58.1

Installation bundles

Changes

-

Support for Domino 5.3.2.

-

Migrating S3 configuration from a 1.0 config will preserve the

sse_kms_key_idvalue.

-

When generating a configuration for AWS, the Metrics Server is enabled by default.

Image: quay.io/domino/fleetcommand-agent:v58

Installation bundles

Changes

-

Support for Domino 5.3.1.

-

Vault is properly configured for service account token renewal when upgrading from Domino 5.3.0 and earlier.

-

AWS/CDK provisioning updates with a large number of node groups works.

-

Image overrides now use the registry specified in the override, rather than a globally configured image registry.

-

NVIDIA charts are now installed into the platform namespace rather than kube-system.

Image: quay.io/domino/fleetcommand-agent:v57.1

Installation bundles

Changes

These changes resolve issues in the v57 installer:

-

Data sources are shown in the Domino application after an upgrade to 5.3.0.

-

Domino stores execution logs in the correct locations.

-

Domino runs the

--dryoption during thepost-helmfileportion of the installation process.

Image: quay.io/domino/fleetcommand-agent:v57

Installation bundles

Changes

Major release adding support for Domino 5.3.0, better install configuration validation and upgrades, and a new install engine backed on existing open-source tools. See Installation Changes for all process changes.

New installer configurations

Many fields have been modified or moved from installer v56 to v57. Most fields will be properly handled by the automated schema updates during upgrades done with v57. Take note of the added or modified fields described below.

Release, chart, and image overrides

The existing services and system_images fields have been replaced by three new fields:

-

release_overrideswhich will override chart-specific values based on their Helmreleasename. For example, to overridenginx-ingressvalues:

release_overrides:

nginx-ingress:

chart_values:

myCustom: value-

chart_overrideswhich allow chart-version overrides based on the Helmchartname. For example, to override thenginx-ingresschart version:

chart_overrides:

nginx-ingress:

version: "1.2.3"-

image_overrideswhich allows image overrides. For example, to override thenginx-ingressimage tag:

image_overrides:

nginx_ingress: "quay.io/domino/ingress-nginx.controller:v1.2.3"Whether or not individual services are installed can now be managed in release_overrides:

release_overrides:

my-release:

installed: falseImage building cloud authentication and builder resources

Image building cloud authentication and builder resources can now be modified as part of the official configuration:

image_building:

cloud_registry_auth:

# Add Azure credentials required to access ACR

azure:

tenant_id: <tenant-id>

client_id: <client-id>

client_secret: <client-secret>

# Reference the GCP service account that has rights to GCR

gcp:

service_account: "<cluster-name>-gcr@<project-name>.iam.gserviceaccount.com"

builder_resources:

# Default configuration

requests:

cpu: "4"

memory: "15Gi"

# Add limits if/when they make sense but be careful not to over-throttle nor OOMKill the build pods

limits:

cpu: "4"

memory: "15Gi"Please remove any Helm release overrides that were previously used in Domino ⇐ 5.2.x as chart values are subject to change.

SMB CSI driver disabled by default

Due to security concerns with the increased permissions required by CSI drivers, the SMB driver has been made opt-in for Domino 5.3.0. Enable it with the following configuration:

release_overrides:

csi-driver-smb:

installed: true-

Primary Mongo instance upgraded to 4.2. Note that Mongo can only be upgraded one version at a time (ie Mongo 3.6 → 4.0 → 4.2). Ensure that the

mongodb-replicasetchart release is using Mongo 4.0 before attempting an upgrade, or perform an intermediate upgrade to a Domino 4.4.2 or higher, which uses Mongo 4.0.

Image: quay.io/domino/fleetcommand-agent:v56.2

Installation bundles

Changes

This release fixes an issue in the v56.1 installer that prevented Domino from using all the Docker registries when multiple registries were configured with domino-quay-repos during the install process.

Image: quay.io/domino/fleetcommand-agent:v56.1

Installation bundles

Changes

-

Update image references for 5.2.2.

-

Updated Domino CDK version to v0.0.5 to fix deployment IAM permissions.

-

Security: OS package updates in deployment images.

Image: quay.io/domino/fleetcommand-agent:v56

Installation bundles

Changes

-

Update ImageBuilder v3 to better handle offline or custom registry installation scenarios.

-

Update image references for 5.2.1.

Image: quay.io/domino/fleetcommand-agent:v55

Installation bundles

Changes

-

Added support for Domino versions 5.2.0.

-

Added the access-manager service.

-

Updated the default Helm version to v3.8.0. Older versions are still supported.

-

Dispatcher and Frontend pod resources, requests, and limits can now be set separately.

New installer configuration fields

The fleetcommand-agent installer configuration has new fields that support the new image builder Hephaestus that replaces Forge. Use these keys to configure the new image builder:

image_building:

verbose: false

rootless: false

concurrency: 10

cache_storage_size: 500Gi

cache_storage_retention: 375000000000

builder_node_selector: {}|

Note

|

rootless must be set to false.

|

Image: quay.io/domino/fleetcommand-agent:v54.1

Installation bundles

Changes

-

Added support for Domino 5.1.4.

Image: quay.io/domino/fleetcommand-agent:v54

Installation bundles

Changes

-

Added support for Domino 5.1.3.

Handle invalid environment revisions

In recent versions of Domino, Mongo records can have a Docker image or a base environment revision but not both. In older deployments, both items could coexist. These older deployments when updated to later Domino versions cannot de-serialize. This fix uses both if they are present.

Image: quay.io/domino/fleetcommand-agent:v53

Installation bundles

Changes

-

Added support for Domino 5.1.2.

Image: quay.io/domino/fleetcommand-agent:v52

Installation bundles

Changes

-

Added support for Domino 5.1.1.

Image: quay.io/domino/fleetcommand-agent:v51

Installation bundles

Changes

-

Added support for Domino 5.1.0.

-

Added support for custom certificate management.

Internal Registry allowed CIDRs

To allow multiple, external whitelisted CIDR ranges access to the internal container image registry, a new field has been added to augment the existing pod_cidr configurable:

internal_docker_registry:

allowed_cidrs:

- 1.2.3.4/32

- 5.6.7.8/24By default, the allowed CIDRs will be 0.0.0.0/0, which means that all network

access will be whitelisted. However, the internal container registry is

still secured by both TLS and basic authentication. Limiting the network

access is recommended, but not required.

Pod CIDR, network policy handling changes

Since added in the initial 4.x versions of Domino, the pod_cidr

configurable field has served to limit network access for both the

internal container image registry as well as main application ingress.

In Domino 5.1.0, we’ve worked to limit the need for this field to be

configured in the vast majority of situations.

Specifically, if ingress is handled by the Domino

nginx-ingress-controller and is configured to be deployed not using

the hostNetwork, then the pod_cidr field no longer needs to be

configured to allow the correct network access for application pods

(such as nucleus or web-ui).

If, however nginx-ingress-controller is still deployed using the

hostNetwork option, then the pod_cidr configurable must be set

correctly to properly allow network traffic to flow without

limitation.

The new internal container registry allowed_cidrs configurable

described previously can be used to allow network access to the registry

independent of the pod_cidr option.

In short, the following configuration is valid:

pod_cidr: ""

services:

nginx_ingress:

chart_values:

controller:

kind: Deployment

hostNetwork: falseThe following is invalid:

pod_cidr: ""

services:

nginx_ingress:

chart_values:

controller:

hostNetwork: trueIf unset, hostNetwork still defaults to true for backward

compatibility reasons.

Known Issues

Istio upgrade handling has been revamped to ensure that all pods in the cluster will be correctly recreated. Domino 5.1.0 includes a minor change to Istio configuration (placement of the control plane pod is now in the platform pool) which will recreate all Domino pods to ensure they pick up the correct configuration.

Installation bundles

Changes

-

Added support for Domino 4.6.4.

Changes

-

Added support for Domino 4.6.0.

-

Added support for containerd runtimes in Azure AKS and Google GKE.

-

Added support for Azure ACR image registries.

-

Added support for configuring shared volume capacity (Defaulting to 5Ti).

-

Added support for encrypted Spark support through

distributed-compute-operator. -

Update installation bundle location and include monitoring and operational tooling.

Image: quay.io/domino/fleetcommand-agent:v45

Installation bundles

Image: quay.io/domino/fleetcommand-agent:v43

Installation bundles

Changes

-

Added support for Domino 4.5.1.

-

Previously, install timeouts for many charts were hardcoded to 5 minutes and not configurable. Some, like daemonsets or charts with particularly long bootstrap times, had longer hardcoded timeouts. Now, individual chart timeouts can be controlled in the service stanza when using the "full" configuration:

services: nginx_ingress: version: 1.30.0-0.5.0 install_timeout: 900 # seconds chart_values: {}In addition, the daemonset_timeout option has been removed.

helm: daemonset_timeout: 0 # no longer used

Image: quay.io/domino/fleetcommand-agent:v41

Installation bundles

Changes

-

Added support for Domino 4.5.0.

-

There is a new configuration option in the agent YAML. By default,

traffic.sidecar.istio.io/excludeInboundPortsannotations are added to the NGINX ingress controller to exclude enforcement of mTLS for traffic that is routed from the load balancer backend. When mTLS is required for NGINX, such as when the ingress controller is behind an Istio gateway, thenginx_annotationssetting can be changed to false. This setting only applies when Istio is enabled.istio: nginx_annotations: true

Image: quay.io/domino/fleetcommand-agent:v39

Installation bundles

Changes

-

Updates workspace configs to fix Spark UI access issues with managed spark clusters.

-

Release is otherwise identical to v38.

Image: quay.io/domino/fleetcommand-agent:v38

Installation bundles

Changes

-

Adds support for Domino 4.4.2.

-

Included in Domino 4.4.2 is opt-in support for FUSE volume usage in the compute grid. This feature currently requires configuration changes at installation or upgrade time due to the potential security implications. To enable FUSE volume support for compute grid executions, set the following:

services: nucleus: chart_values: enableFuseVolumeSupport: true -

A new configuration has been added in preparation for future feature development. These fields are a required part of the v38 schema but currently have no effect.

certificate_management: enabled: true

Image: quay.io/domino/fleetcommand-agent:v37

Installation bundles

Changes

-

Adds support for Domino 4.4.1.

-

The

teleportsection of the agent configuration has been deprecated in favor of the newteleport_kube_agentsection.

Image: quay.io/domino/fleetcommand-agent:v34

Installation bundles

Changes

-

Adds support for Domino 4.4.0.

Image: quay.io/domino/fleetcommand-agent:v33

Installation bundles

Changes

-

Adds support for Domino 4.4.0.

-

New configuration options have been added for the new Teleport Kubernetes agent:

If a deployment currently has

teleport.enabledandteleport.remote_accessset totrue, they must be disabled andteleport_kube_agent.enabledmust be set instead.teleport_kube_agent: enabled: false proxyAddr: teleport.domino.tech:443 authToken: eeceeV4sohh8eew0Oa1aexoTahm3Eiha

-

Domino 4.4.0 includes support for restartable workspace disaster recovery in AWS leveraging EBS snapshots. To support this functionality, existing installations might require additional IAM permissions for platform node pool instances.

The permissions required, without any resource restriction (that is, ), are the following:

ec2:CreateSnapshotec2:CreateTagsec2:DeleteSnapshotec2:DeleteTagsec2:DescribeAvailabilityZonesec2:DescribeSnapshots*ec2:DescribeTags

Known Issues

-

If upgrading from Helm 2 to Helm 3, please read the release notes from v22 for caveats and known issues.

Image: quay.io/domino/fleetcommand-agent:v32

Installation bundles

Changes:

-

Fixes a memory leak in the EFS CSI driver.

Image: quay.io/domino/fleetcommand-agent:v31

Installation bundles

Changes:

-

Updates to the latest build of 4.3.3.

Image: quay.io/domino/fleetcommand-agent:v30

Installation bundles

Changes:

-

Adds support for Domino 4.3.3.

-

The agent now supports installing Istio 1.7 (set

istio.installtotrue), and installing Domino in Istio-compatible mode (setistio.enabledtotrue).istio: enabled: false install: false cni: true namespace: istio-system -

The EFS storage provider for new installs has changed from

efs-provisionerto the EFS CSI driver, to support encryption in transit to EFS. For existing installs, this does not require any changes unless encryption in transit is desired. If a migration to encrypted EFS is necessary, please contact Domino support.One limitation of the new driver, compared to the previous, is an inability to dynamically create directories according to provisioned volumes. Support for pre-provisioned directories in AWS is done through access points, which must be created before Domino can be installed.

To specify the access point at install time, ensure the filesystem_id is set in the format

{EFS ID}::{AP ID}:storage_classes: shared: efs: filesystem_id: 'fs-285b532d::fsap-00cb72ba8ca35a121' -

Two new fields were added in order to simplify DaemonSet management during upgrades for particularly large clusters. DaemonSets do not have configuration options for upgrades and pods will be replaced one-by-one. For large compute node pools, this can take a significant amount of time.

helm: skip_daemonset_validation: false daemonset_timeout: 300Setting

helm.skip_daemonset_validationto true will bypass post-upgrade validation that all pods have been successfully recreated.helm.daemonset_timeoutis an integer representing the number of seconds to wait for all daemon pods in a DaemonSet to be recreated. -

4.3.3 introduces limited availability of the new containerized Domino image builder: Forge. Forge can be enabled with the

ImageBuilderV2feature flag, although Domino services must be restarted to cause this flag to take effect. Running Domino image builds in a cluster that uses a non-Docker container runtime, such as cri-o or containerd, requires that the feature flag be enabled.To support the default rootless mode that Forge is configured to use, the worker nodes must support unprivileged mounts, user namespaces, and overlayfs (either natively or through FUSE). Currently, GKE and EKS do not support user namespace remapping and require the following extra configuration to properly use Forge.

services: forge: chart_values: config: fullPrivilege: true

Image: quay.io/domino/fleetcommand-agent:v29

Installation bundles

Changes:

-

Updated Keycloak migration job version.

Image: quay.io/domino/fleetcommand-agent:v28

Installation bundles

Changes:

-

Adds support for Domino 4.3.2.

-

Adds support for encrypted EFS access by using the EFS CSI driver.

-

A new

istiofield has been added to the domino.yml schema for testing and development of future releases. Domino 4.3.2 does not support Istio and therefore you must setenabledin this new section tofalse.istio: enabled: false install: false cni: true namespace: istio-system -

New fields to specify static AWS access key and secret key credentials have been added. These are currently unused and can be left unset.

blob_storage: projects: s3: access_key_id: '' secret_access_key: '' -

A new field for Teleport remote access integration has been added. This is currently unused and must be set to

false.teleport: remote_access: false

Image: quay.io/domino/fleetcommand-agent:v27

Installation bundles

Changes:

-

Fix a bug where dry-run installation can cause internal credentials to be improperly rotated.

Image: quay.io/domino/fleetcommand-agent:v26

Installation bundles

Changes:

-

Adds support for Domino 4.3.1.

-

Adds support for running Domino on OpenShift 4.4+.

-

A new field has been added to the installer configuration that controls whether or not the image caching service is deployed.

image_caching: enabled: true -

A new field has been added to the installer configuration that specifies the Kubernetes distribution for resource compatibility. The available options are

cncf(Cloud Native Computing Foundation) andopenshift.kubernetes_distribution: cncf

Image: quay.io/domino/fleetcommand-agent:v25

Installation bundles

Changes:

-

Adds support for Domino 4.3.0.

-

A new

cache_pathfield has been added to thehelmconfiguration section. Leaving this field blank will ensure charts are fetched from an upstream repository.helm: cache_path: '' -

To facilitate the deployment of Domino into clusters with other tenants, a new global node selector field has been added to the top-level configuration that allows an arbitrary label to be used for scheduling all Domino workloads. Its primary purpose is to limit workloads such as DaemonSets that would be scheduled on all available nodes in the cluster to only nodes with the provided label. This can override default node pool selectors such as

dominodatalab.com/node-pool: "platform", but does not replace them.global_node_selectors: domino-owned: "true" -

To facilitate deployment of Domino into clusters with other tenants, a configurable Ingress class has been added to allow differentiation from other ingress providers in a cluster. If multiple Ingress objects are created with the default class, it’s possible for other tenants' paths to interfere with Domino and vice versa. Generally, this setting does not have to change, but can be set to any arbitrary string value (such as

domino).ingress_controller: class_name: nginx

Image: quay.io/domino/fleetcommand-agent:v24

Installation bundles

Changes:

-

Adds support for Domino 4.2.3 and 4.2.4.

Image: quay.io/domino/fleetcommand-agent:v23

Installation bundles

Changes:

-

Adds support for Domino 4.2.2.

-

The known issue with v22 around Domino Apps being stopped after an upgrade has been resolved. Apps will now automatically restart after an upgrade.

-

The known issue with Elasticsearch not upgrading until manually restarted has been resolved. Elasticsearch will automatically cycle through a rolling upgrade when the deployment is upgraded.

-

Fixed an issue that prevented the fleetcommand-agent.

-

Adds support for autodiscovery of scaling resources by the cluster autoscaler.

Two new fields have been added under the

autoscaler.auto_discoverykey:autoscaler: auto_discovery: cluster_name: domino tags: [] # optional. if filled in, cluster_name is ignored.By default, if no

autoscaler.groupsorautoscaler.auto_discovery.tagsare specified, thecluster_namewill be used to look for the following AWS tags:-

k8s.io/cluster-autoscaler/enabled -

k8s.io/cluster-autoscaler/{{ cluster_name }}The

tagsparameter can be used to explicitly specify which resource tags the autoscaler service must look for. Auto scaling groups with matching tags will have their scaling properties detected and the autoscaler will be configured to scale them.The IAM role for the platform nodes where the autoscaler is running still needs an autoscaling access policy that will allow it to read and scale the groups.

When upgrading from an install that uses specific groups, ensure that

auto_discovery.cluster_nameis an empty value.

-

Known Issues:

-

If you’re upgrading from fleetcommand-agent v21 or older, be sure to read the v22 release notes and implement the Helm configuration changes.

-

An incompatibility between how

nginx-ingresswas initially installed and must be maintained going forward means that action is required for both Helm 2 and Helm 3 upgrades.For Helm 2 upgrades, add the following

servicesobject to yourdomino.ymlto ensure compatibility:services: nginx_ingress: chart_values: controller: metrics: service: clusterIP: "-" service: clusterIP: "-"For Helm 3, there are two options. If

nginx-ingresshas not been configured to provide a cloud-native load balancer that is tied to the hosting DNS entry, thennginx-ingresscan be safely uninstalled prior to the upgrade. If, however, the load balancer address must be maintained across the upgrade, then the initial upgrade after the Helm 3 migration will fail. Before retrying the upgrade, execute the following commands.export NAME=nginx-ingress export SECRET=$(kubectl get secret -l owner=helm,status=deployed,name=$NAME -n domino-platform | awk '{print $1}' | grep -v NAME) kubectl get secret -n domino-platform $SECRET -oyaml | sed "s/release:.*/release: $(kubectl get secret -n domino-platform $SECRET -ogo-template="{{ .data.release | base64decode | base64decode }}" | gzip -d - | sed 's/clusterIP: ""//g' | gzip | base64 -w0 | base64 -w0)/" | kubectl replace -f - kubectl get secret -n domino-platform $SECRET -oyaml | sed "s/release:.*/release: $(kubectl get secret -n domino-platform $SECRET -ogo-template="{{ .data.release | base64decode | base64decode }}" | gzip -d - | sed 's/rbac.authorization.k8s.io/v1beta1/rbac.authorization.k8s.io/v1/g' | gzip | base64 -w0 | base64 -w0)/" | kubectl replace -f -

Image: quay.io/domino/fleetcommand-agent:v22

Installation bundles

Changes:

-

Adds support for Domino 4.2.

-

Adds support for Helm 3.

The

helmobject in the installer configuration has been restructured to accommodate Helm 3 support. There is now ahelm.versionproperty which can be set to2or3. When using Helm 2, the configuration must be similar to the below example. Theusernameandpasswordwill continue to be standard Quay.io credentials provided by Domino.helm: version: 2 host: quay.io namespace: domino prefix: helm- # Prefix for the chart repository, defaults to `helm-` username: "<username>" password: "<password>" tiller_image: gcr.io/kubernetes-helm/tiller:v2.16.1 # Version is required and MUST be 2.16.1 insecure: falseWhen using Helm 3, configure the object as shown below. Helm 3 is a major release of the underlying tool that powers the installation of Domino’s services. Helm 3 removes the Tiller service, which was the server-side component of Helm 2. This improves the security posture of Domino installation by reducing the scope and complexity of the required RBAC permissions, and it enables namespace isolation of services. Additionally, Helm 3 adds support for storing charts in OCI registries.

Currently, only gcr.io and mirrors.domino.tech are supported as chart repositories. If you are switching to Helm 3, you must contact Domino for gcr.io credentials. When using Helm 3, the

helmconfiguration object should be similar to the below example.helm: version: 3 host: gcr.io namespace: domino-eng-service-artifacts insecure: false username: _json_key # To support GCR authentication, this must be "_json_key" password: "<password>" tiller_image: null # Not required for Helm 3 prefix: '' # Charts are stored without a prefix by defaultMigration of an existing Helm 2 installation to Helm 3 is done seamlessly within the installer. Once successful, Tiller will be removed from the cluster and all Helm 2 configurations are deleted.

Known Issues:

-

Elasticsearch is currently configured to only upgrade when the pods are deleted. To properly upgrade an existing deployment from Elasticsearch 6.5 to 6.8, after running the installer use the rolling upgrade process. This involves first deleting the

elasticsearch-datapods, then theelasticsearch-masterpods. See the example procedure below.kubectl delete pods --namespace domino-platform elasticsearch-data-0 elasticsearch-data-1 --force=true --grace-period=0 # Wait for elasticsearch-data-0 & elasticsearch-data-1 to come back online kubectl delete pods --namespace domino-platform elasticsearch-master-0 elasticsearch-master-1 elasticsearch-master-2

-

An incompatibility between how

nginx-ingresswas initially installed and should be maintained going forward means that action is required for both Helm 2 and Helm 3 upgrades.For Helm 2 upgrades, add the following

servicesobject to yourdomino.ymlto ensure compatibility:services: nginx_ingress: chart_values: controller: metrics: service: clusterIP: "-" service: clusterIP: "-"For Helm 3, there are two options. If

nginx-ingresshas not been configured to provide a cloud-native load balancer that is tied to the hosting DNS entry, thennginx-ingresscan be safely uninstalled prior to the upgrade. If, however, the load balancer address must be maintained across the upgrade, then the initial upgrade after the Helm 3 migration will fail. Before retrying the upgrade, execute the following commands.export NAME=nginx-ingress export SECRET=$(kubectl get secret -l owner=helm,status=deployed,name=$NAME -n domino-platform | awk '{print $1}' | grep -v NAME) kubectl get secret -n domino-platform $SECRET -oyaml | sed "s/release:.*/release: $(kubectl get secret -n domino-platform $SECRET -ogo-template="{{ .data.release | base64decode | base64decode }}" | gzip -d - | sed 's/clusterIP: ""//g' | gzip | base64 -w0 | base64 -w0)/" | kubectl replace -f - kubectl get secret -n domino-platform $SECRET -oyaml | sed "s/release:.*/release: $(kubectl get secret -n domino-platform $SECRET -ogo-template="{{ .data.release | base64decode | base64decode }}" | gzip -d - | sed 's/rbac.authorization.k8s.io/v1beta1/rbac.authorization.k8s.io/v1/g' | gzip | base64 -w0 | base64 -w0)/" | kubectl replace -f - -

Domino Apps do not currently support a live upgrade from version 4.1 to version 4.2. After the upgrade, all Apps will be stopped.

To restart them, you can use the

/v4/modelProducts/restartAllendpoint like in the below example, providing an API key for a system administrator.curl -X POST --include --header "X-Domino-Api-Key: <admin-api-key>" 'https://<domino-url>/v4/modelProducts/restartAll'

Image: quay.io/domino/fleetcommand-agent:v21

Changes:

-

Adds support for Domino 4.1.10.

Known issues:

-

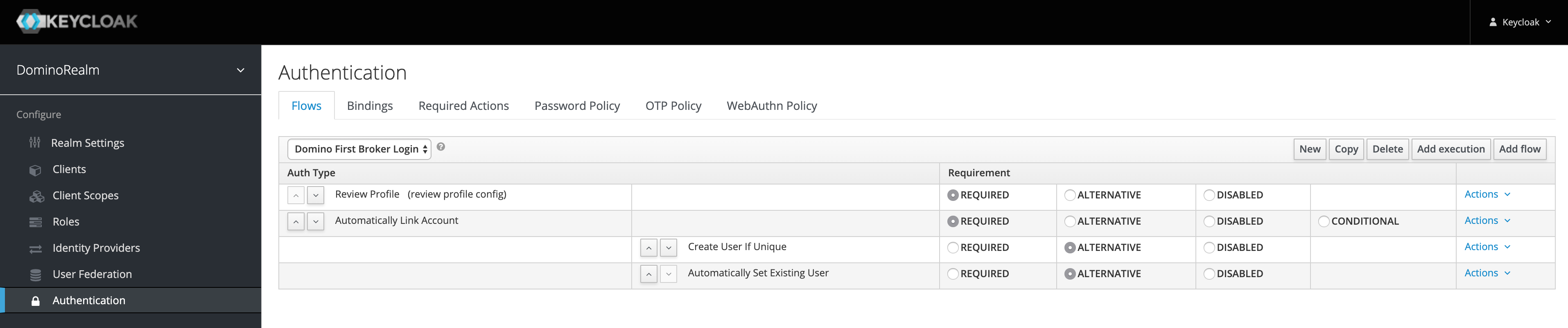

The deployed version 8.0.1 of Keycloak has an incorrect default First Broker Login authentication flow.

When setting up an SSO integration, you must create a new authentication flow like the one below. The

Automatically Link Accountstep is a custom flow, and theCreate User if UniqueandAutomatically Set Existing Userexecutions must be nested under it by adding them with the Actions link.

Image: quay.io/domino/fleetcommand-agent:v20

Changes:

-

Support for 4.1.9 has been updated to reflect a new set of artifacts.

Known issues:

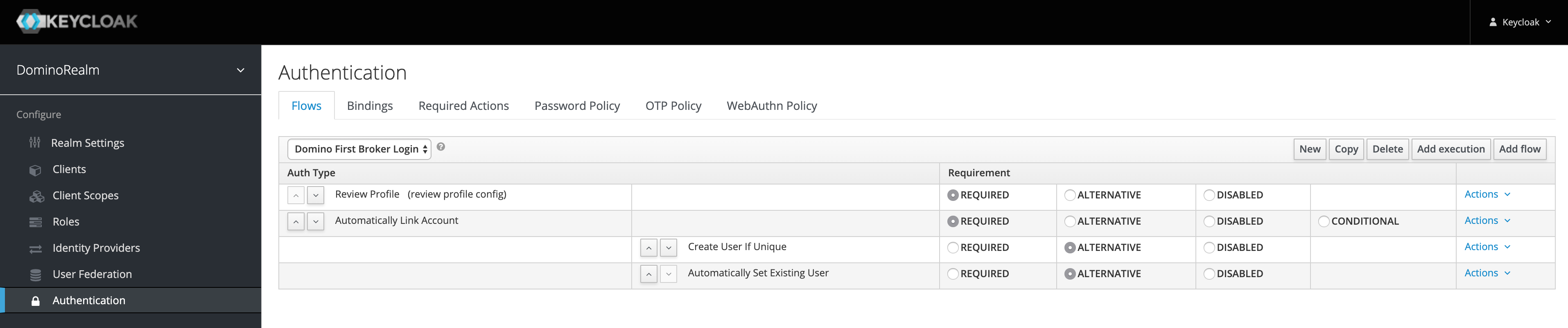

-

The deployed version 8.0.1 of Keycloak has an incorrect default First Broker Login authentication flow.

When setting up an SSO integration, you must create a new authentication flow like the one below. The

Automatically Link Accountstep is a custom flow, and theCreate User if UniqueandAutomatically Set Existing Userexecutions must be nested under it by adding them with the Actions link.

Image: quay.io/domino/fleetcommand-agent:v19

Changes:

-

Added catalogs for Domino up to 4.1.9.

-

Added support for Docker

NO_PROXYconfiguration. Domino containers will now respect the configuration and connect to the specified hosts without proxy.

Known issues:

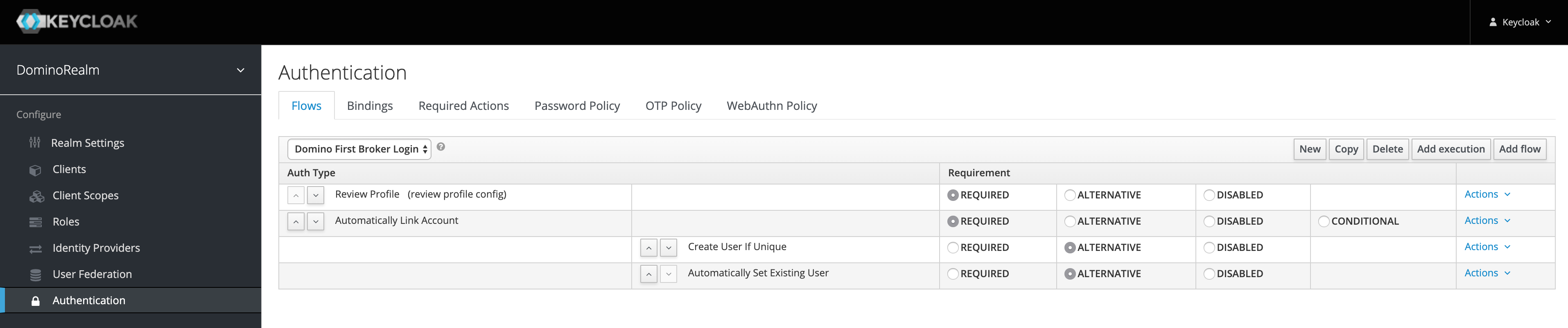

-

The deployed version 8.0.1 of Keycloak has an incorrect default First Broker Login authentication flow.

When setting up an SSO integration, you must create a new authentication flow like the one below. The

Automatically Link Accountstep is a custom flow, and theCreate User if UniqueandAutomatically Set Existing Userexecutions must be nested under it by adding them with the Actions link.

Image: quay.io/domino/fleetcommand-agent:v18

Changes:

The following new fields have been added to the fleetcommand-agent installer configuration.

-

Storage class access modes

The

storage_classoptions have a new field calledaccess_modesthat allows configuration of the underlying storage class' allowed access modes.storage_classes: block: [snip] access_modes: - ReadWriteOnce -

Git service storage class

Previously, the deployed Domino Git service used storage backed by the

sharedstorage class. Now, thedominodiskblock storage class will be used by default. If using custom storage classes, set this to the name of the block storage class. For existing Domino installations, you must set this todominoshared.For new installs:

git: storage_class: dominodisk

For existing installations and upgrades:

git: storage_class: dominoshared services: git_server: chart_values: persistence: size: 5Ti

Image: quay.io/domino/fleetcommand-agent:v17

Changes:

-

Added catalogs for Domino up to 4.1.8.

Image: quay.io/domino/fleetcommand-agent:v16

Changes:

-

Added catalogs for Domino up to 4.1.7.

-

Calico CNI is now installed by default for EKS deployments.

-

AWS Metadata API is blocked by default for Domino version >= 4.1.5.

-

Added Private registry support in the Installer.

-

New Install configuration attributes (see the reference documentation for more details):

-

sse_kms_key_idoption for Blob storage. -

gcsoption for Google Cloud Storage. -

Namespaces now support optional

labelsto apply labels during installation. -

teleportfor Domino-managed installations only.

-

Image: quay.io/domino/fleetcommand-agent:v15

Changes:

-

Added catalog for Domino 4.1.4.

-

Ensure fleetcommand-agent also deletes the system namespace.

-

Updated version of Cluster Autoscaler to 1.13.9.

Image: quay.io/domino/fleetcommand-agent:v14

Changes:

-

Updated version of Cluster Autoscaler to 1.13.7.

-

Added catalog for Domino 4.1.3.

|

Important

| Before you upgrade, you must put Domino into maintenance mode to avoid losing work. Maintenance mode pauses all apps, model APIs, restartable workspaces, and scheduled jobs. Allow running jobs to complete or stop them manually. |