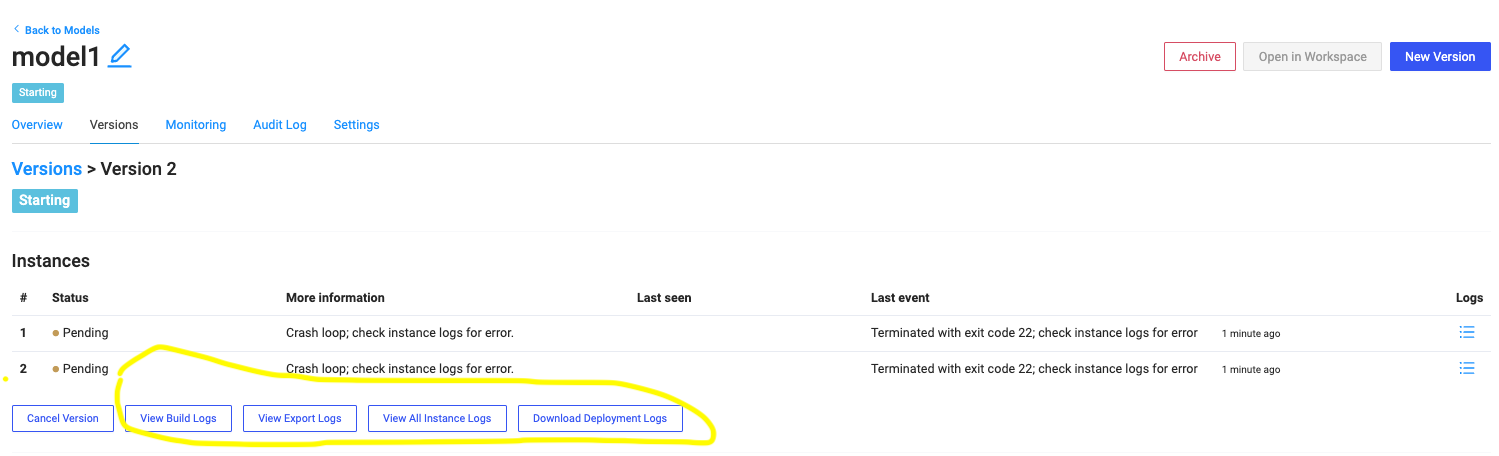

Model API publishing issues can be investigated from the UI to check the logs.

The build logs contain the messages from the Model API image build stage. The instance logs contain the messages from the Model API execution.

You can get more information from the Kubernetes logs.

<user-id>$ prod-field % kubectl get pods -n domino-compute | egrep -v "Running"

NAME READY STATUS RESTARTS AGE

model-64484be8a7d82e39bb554a67-65fb5f9c48-7hlgd 3/4 CrashLoopBackOff 5767 (3m41s ago) 20d

model-648b14732d311553013beec2-5469b84d68-9vdzz 3/4 CrashLoopBackOff 1 (6s ago) 14s

model-648b14732d311553013beec2-5469b84d68-nhv6w 3/4 CrashLoopBackOff 1 (7s ago) 14s

run-648b14bb73f8e83c8a394443-nmp6q 0/4 ContainerCreating 0 49sIn this case, model pods are in CrashLoopBackOff state. To get more details on the root cause, describe the model API pods.

<user-id>$ prod-field % kubectl describe pod model-648b14732d311553013beec2-5469b84d68-9vdzz -n domino-compute

Name: model-648b14732d311553013beec2-5469b84d68-9vdzz

Namespace: domino-compute

Priority: 0

Node: ip-10-0-96-113.us-west-2.compute.internal/10.0.96.113

Start Time: Thu, 15 Jun 2023 09:40:49 -0400

Labels: datasource-proxy-client=true

<snip>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 33s default-scheduler Successfully assigned domino-compute/model-648b14732d311553013beec2-5469b84d68-9vdzz to ip-10-0-96-113.us-west-2.compute.internal

Normal Pulling 32s kubelet Pulling image "172.20.87.71:5000/dominodatalab/model:63e5651859d2635c5c8c7247-v2-202361513390_WsB7eIW7"

Normal Pulled 29s kubelet Container image "quay.io/domino/fluent.fluent-bit:1.9.4-358991" already present on machine

Normal Started 29s kubelet Started container fluent-bit

Normal Created 29s kubelet Created container fluent-bit

Normal Pulled 29s kubelet Container image "quay.io/domino/logrotate:3.16-351989-357437" already present on machine

Normal Created 29s kubelet Created container logrotate

Normal Started 29s kubelet Started container logrotate

Normal Pulled 29s kubelet Successfully pulled image "172.20.87.71:5000/dominodatalab/model:63e5651859d2635c5c8c7247-v2-202361513390_WsB7eIW7" in 2.472524739s

Normal Created 29s kubelet Created container harness-proxy

Normal Started 29s kubelet Started container harness-proxy

Normal Pulled 29s kubelet Container image "quay.io/domino/harness-proxy:5.6.0.2969129" already present on machine

Warning Unhealthy 28s kubelet Readiness probe failed: dial tcp 10.0.112.55:8080: i/o timeout

Normal Started 12s (x3 over 29s) kubelet Started container model-648b14732d311553013beec2

Normal Created 12s (x3 over 29s) kubelet Created container model-648b14732d311553013beec2

Normal Pulled 12s (x2 over 27s) kubelet Container image "172.20.87.71:5000/dominodatalab/model:63e5651859d2635c5c8c7247-v2-202361513390_WsB7eIW7" already present on machine

Warning BackOff 10s (x3 over 25s) kubelet Back-off restarting failed containerLooking at pod logs can also provide useful information about the errors.

<user-id>$ prod-field % kubectl logs -f model-648b14732d311553013beec2-5469b84d68-9vdzz -n domino-compute -c model-648b14732d311553013beec2

*** Starting uWSGI 2.0.19.1 (64bit) on [Thu Jun 15 13:41:38 2023] ***

compiled with version: 9.3.0 on 02 March 2021 12:42:27

os: Linux-5.10.178-162.673.amzn2.x86_64 #1 SMP Mon Apr 24 23:34:06 UTC 2023

nodename: model-648b14732d311553013beec2-5469b84d68-9vdzz

machine: x86_64

clock source: unix

<snip>

/domino/model-manager/app.cfg

working_directory: /mnt/wasantha_gamage/quick-start script_path: model.py endpoint_function_name: predict2

Traceback (most recent call last):

File "/domino/model-manager/model_harness.py", line 5, in <module>

application = make_model_app(load_model_config())

File "/domino/model-manager/model_harness_utils.py", line 51, in load_model_config

return make_model_config(CONFIG_FILE_PATH)

File "/domino/model-manager/model_harness_utils.py", line 26, in make_model_config

USER_FUNCTION = harness_lib.get_endpoint_function(

File "/domino/model-manager/harness_lib.py", line 49, in get_endpoint_function

return getattr(module, function_name)

AttributeError: module '_domino_model' has no attribute 'predict2'

unable to load app 0 (mountpoint='') (callable not found or import error)

*** no app loaded. GAME OVER ***

SIGINT/SIGQUIT received...killing workers...

Exception ignored in: <module 'threading' from '/opt/conda/lib/python3.9/threading.py'>

Traceback (most recent call last):

File "/opt/conda/lib/python3.9/threading.py", line 1428, in _shutdown

def _shutdown():

KeyboardInterrupt:

OOPS ! failed loading app in worker 1 (pid 8)

Thu Jun 15 13:41:39 2023 - need-app requested, destroying the instance...

goodbye to uWSGI.The following sections provide useful steps to troubleshoot: