|

Note

| The Open MPI feature is only available with Domino versions 5.1.1 and later. Previous versions of Domino do not support this feature. |

You can use MPI clusters with Domino workspaces, jobs, and scheduled jobs.

|

Note

| MPI clusters are not available for apps, launchers, or model APIs. |

Create an on-demand MPI cluster attached to a Domino Workspace

-

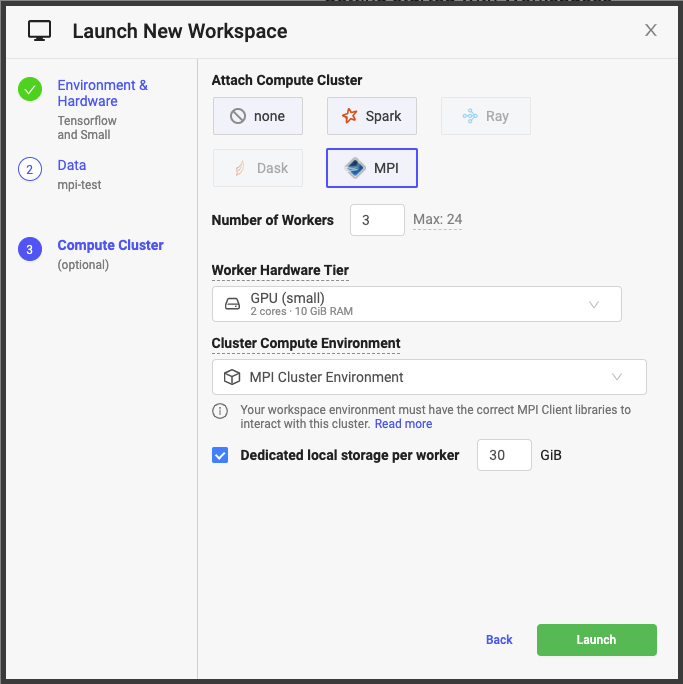

Go to Workspaces > New Workspace.

-

In the Launch New Workspace dialog click Compute Cluster. For your compute cluster, set the following cluster configuration attributes:

-

Number of Workers - The number of MPI node workers making up the cluster when it starts. The combined capacity of the workers is available for your workloads.

-

Worker Hardware Tier - The amount of compute resources (CPU, GPU, and memory) made available to each MPI node worker.

-

Cluster Compute Environment - Specifies the compute environment for the MPI cluster.

-

Dedicated local storage per executor - The amount of dedicated storage in Gigabytes (2 ^ 30 bytes) available to each MPI worker. The storage is automatically mounted to

/tmp. The storage is automatically provisioned when the cluster is created and de-provisioned when it is shut down.WarningThe local storage per worker must not be used for storing any data that must be available after the cluster is shut down.

-

-

To launch your workspace, click Launch.

After the workspace launches it can access the MPI cluster you configured.

|

Note

| The cluster worker nodes can take longer to spin up than the workspace. The status of the cluster is displayed in the navigation bar. |

Connect to your cluster

When provisioning your on-demand MPI cluster, Domino initializes environment variables. These variables contain the information to connect to your cluster. A hostfile is created with the worker node name. That file is located at /domino/mpi/hosts. Submit your mpirun jobs from your preferred IDE. The jobs use these default settings:

-

Hosts are set to all workers. This does not include the workspace/job container.

-

Slots are allotted based on the CPU cores in the selected worker hardware tier. The minimum is one. If you are using GPU worker nodes this is set to the minimum CPU/GPU requests from the hardware tier.

-

Defaults are set for mapping (socket) and binding (core).

|

Note

| You must perform an MPI file sync before you run commands on the worker nodes. See the File sync MPI clusters for more information. |

-

Run a Python script:

mpirun python my-training.pyYou can pass arguments to the mpirun command. See Open MPI docs. The following are some frequently used parameters:

-

--x <env>- Distribute an existing environment variable to all workers. You can specify only one environment variable per-xoption. -

--hostfile <your hostfile>- Specify a new hostfile with your custom slots. -

--map-by <core>- Map to the specified object. The default issocket. Create armaps_base_mapping_policyenvironment variable to set a new mapping policy.-

Create a

hwloc_base_binding_policyenvironment variable to set a new binding policy.

-

For example to add -bind-to none, to specify that a training process not be bound to a single core, and -map-by slot, use the following code:

mpirun

--bind-to none

--map-by slot

python my-training.py|

Note

|

If you are using Horovod, Domino recommends running it directly using MPI. If not, enter a command specifying the number of worker nodes. For example, -H server1:1,server2:1,server3:1 specifies three worker nodes. See the Horovod docs for instructions on converting Horovod commands to MPI.

|

Create an on-demand MPI cluster attached to a Domino Job

-

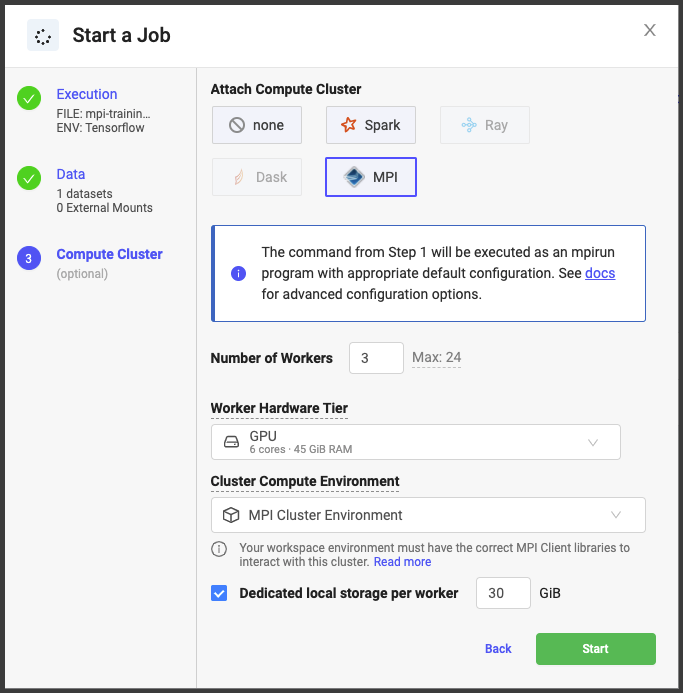

Go to Jobs > Run.

-

In the Start a Job dialog, click Compute Cluster.

For your compute cluster, set the following cluster configuration attributes:

-

Number of Workers - The number of MPI node workers for the cluster when it starts. The combined capacity of the workers is available for your workloads.

-

Worker Hardware Tier - The amount of compute resources (CPU, GPU, and memory) available to each MPI node worker.

-

Cluster Compute Environment - Specifies the compute environment for the MPI cluster.

-

Dedicated local storage per executor - The amount of dedicated storage in Gigabytes (2 ^ 30 bytes) available to each MPI worker. The storage is automatically mounted to

/tmp. The storage is automatically provisioned when the cluster is created and de-provisioned when it is shut down.WarningDo not store any data in the local storage that must be available after the cluster is shut down.

-

-

To launch your job, click Launch.

-

Enter the cluster settings and launch your job.

The job start command is wrapped in a

mpirunprefix. The defaults were described previously.NoteThe Domino job is executing the mpiruncommand. Other scripts cannot runmpirunwhile that job is executing.When writing scripts that are used in a job MPI cluster, you must save artifacts to an external location or to a Domino dataset, not to the project’s files page (

/mnt). Each project contains a default read/write dataset, accessed using the/domino/datasets/local/${DOMINO_PROJECT_NAME}path.To tune the Open MPI run in jobs, create an

mpi.conffile at your root directory with the parameters you want. The root of your project is the/mntworking directory. This is linked to theFilespage, or the/mnt/codedirectory if you are using a Git-based project. See Open MPI run-time tuning for more information.Parameters are set one per line, for example:

-

mca_base_env_list = EXISTING_ENV_VAR, where the environment variable is set in the workspace. -

orte_hostfile = <new hostfile location, copy the default hostfile fromdomino/mpi/hoststo/mntand setorte_hostfile=/mnt/hosts. -

rmaps_base_mapping_policy core, equivalent to--map-by <core> -

hwloc_base_binding_policy socket, equivalent to--bind-to socket

-

When the workspace or job starts, a Domino on-demand MPI cluster is automatically provisioned with your MPI cluster settings. The cluster is attached to the workspace or job when the cluster becomes available.

When the workspace or job shut down the on-demand MPI cluster and all associated resources are automatically stopped and de-provisioned. This includes any compute resources and storage allocated for the cluster.

The on-demand MPI clusters created by Domino are not meant for sharing between multiple users. Each cluster is associated with a given workspace or job instance. Access to the cluster is restricted to users who can access the workspace or the job attached to the cluster. This restriction is enforced at the networking level and the cluster is only reachable from the execution that provisioned the cluster.