Experimenting with GenAI systems is not the same as training traditional ML models. Instead of starting from scratch, you assemble systems that mix LLMs, prompts, tools, and agents. These systems are powerful but also complex, dynamic, and difficult to evaluate.

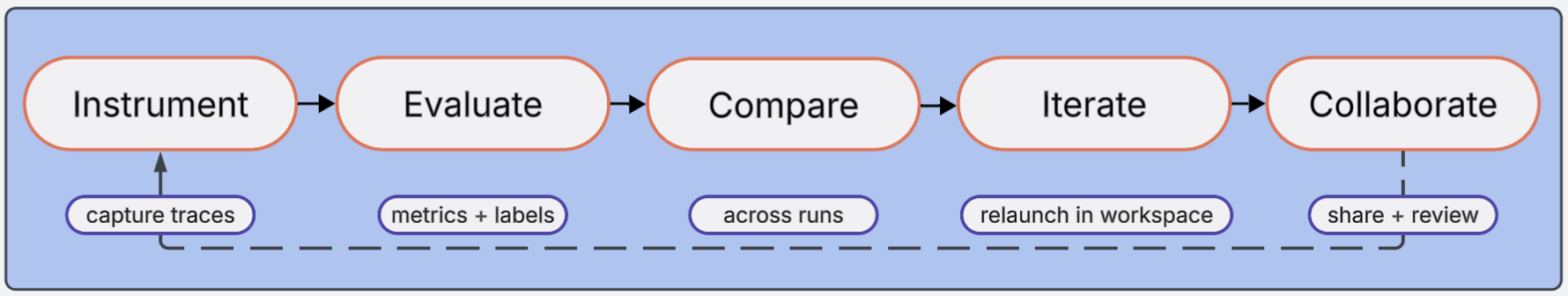

Domino’s trace and evaluation features give you a structured way to bring clarity and rigor to this process:

-

Understand system behavior. Traces record every step your GenAI system takes, including downstream calls, so you can debug, analyze, and explain results.

-

Evaluate quality. Attach metrics and labels, such as accuracy, toxicity, latency, or human annotations, to individual traces for fine-grained performance evidence.

-

Compare configurations. Treat each run as a system configuration (prompt, agent, or model) and see exactly how changes affect outcomes.

-

Accelerate iteration. When you find a promising configuration, relaunch it in a workspace and keep iterating with a reproducible setup.

-

Collaborate effectively. Traces, evaluations, and comparisons live inside your project, making it easy for colleagues to review, share, and extend your work.

-

Capture your GenAI runs, then add metrics or labels to evaluate them with ease.

-

The Domino Experiment Manager logs and stores your runs so you can monitor, compare, and collaborate.