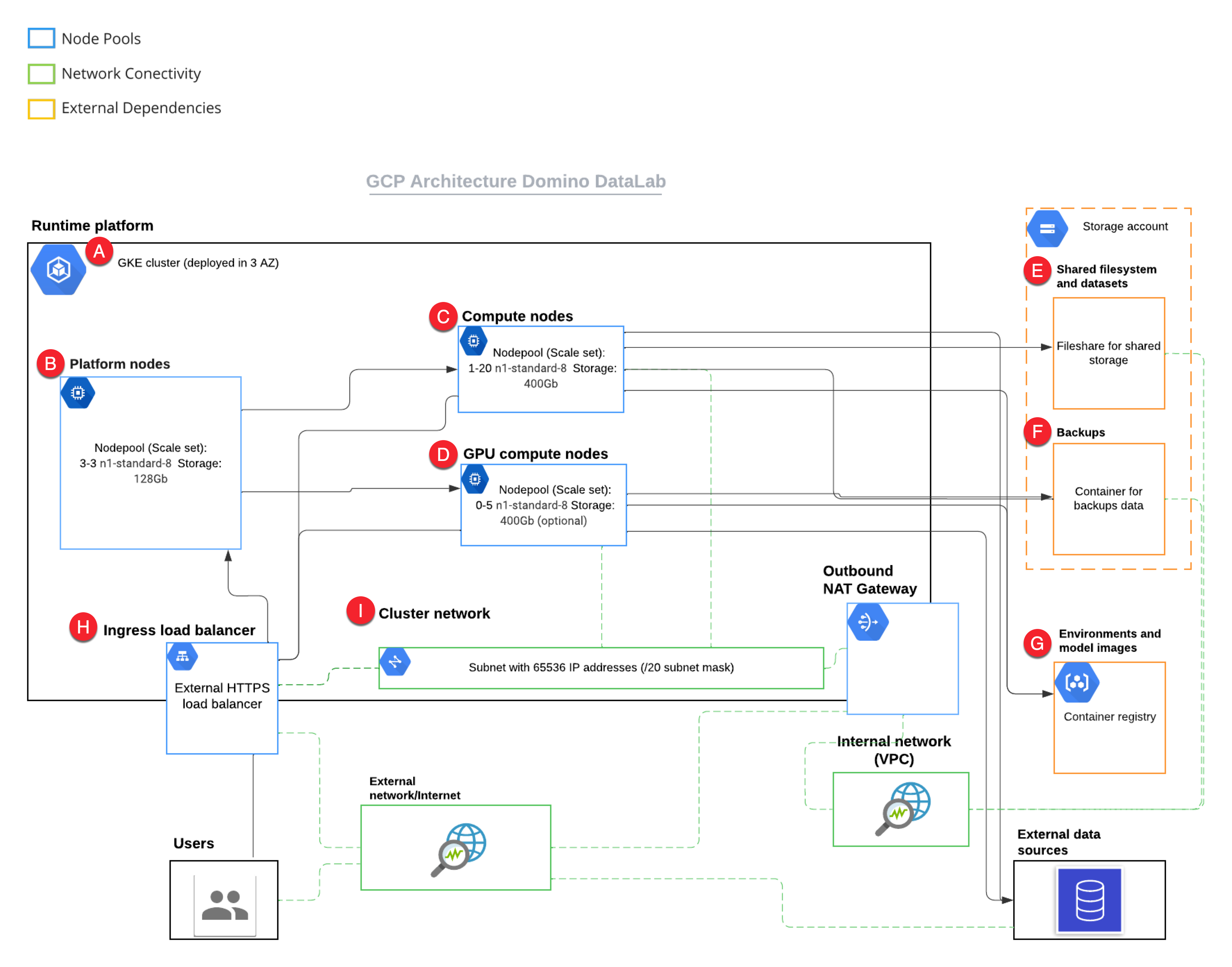

Domino can run on a Kubernetes cluster provided by the Google Kubernetes Engine (GKE).

When running on GKE, the Domino architecture uses Google Cloud Provider (GCP) resources to fulfill the Domino cluster requirements as follows:

-

The GKE cluster manages Kubernetes control.

-

Domino uses one node pool of three n1-standard-8 worker nodes to host the Domino platform.

-

Additional node pools host elastic compute for Domino executions with optional GPU accelerators.

-

Cloud Filestore stores user data, backups, logs, and Domino Datasets.

-

A Cloud Storage Bucket stores the Domino Docker Registry.

-

The

kubernetes.io/gce-pdprovisioner creates persistent volumes for Domino executions.

This section describes how to configure an GKE cluster for use with Domino.

Namespaces

You don’t have to configure namespaces prior to install. Domino creates the following namespaces in the cluster during installation, according to the following specifications:

| Namespace | Contains |

|---|---|

| Durable Domino application, metadata, platform services required for platform operation. |

| Ephemeral Domino execution pods launched by user actions in the application. |

| Domino installation metadata and secrets. |

Node pools

The GKE cluster must have at least two node pools that produce worker nodes with the following specifications and distinct node labels, and it might include an optional GPU pool:

| Pool | Min-Max | Instance | Disk | Labels |

|---|---|---|---|---|

| 4-6 | n1-standard-8 | 128G |

|

| 1-20 | n1-standard-8 | 400G |

|

Optional: | 0-5 | n1-standard-8 | 400G |

|

If you want to configure the default-gpu pool, you must add a GPU accelerator the node pool.

See the GKE documentation about available accelerators and deploying a DaemonSet that automatically installs the necessary drivers.

You can add node pools with distinct dominodatalab.com/node-pool labels to make other instance types available for Domino executions.

See Manage Compute Resources to learn how these different node types are referenced by labels from the Domino application.

Consult the following Terraform snippets for code representations of the required node pools.

Platform pool

resource "google_container_node_pool" "platform" {

name = "platform"

location = $YOUR_CLUSTER_ZONE_OR_REGION

cluster = $YOUR_CLUSTER_NAME

initial_node_count = 3

autoscaling {

max_node_count = 3

min_node_count = 3

}

node_config {

preemptible = false

machine_type = "n1-standard-8"

labels = {

"dominodatalab.com/node-pool" = "platform"

}

disk_size_gb = 128

local_ssd_count = 1

}

management {

auto_repair = true

auto_upgrade = true

}

timeouts {

delete = "20m"

}

}Default compute pool

resource "google_container_node_pool" "compute" {

name = "compute"

location = $YOUR_CLUSTER_ZONE_OR_REGION

cluster = $YOUR_CLUSTER_NAME

initial_node_count = 1

autoscaling {

max_node_count = 20

min_node_count = 1

}

node_config {

preemptible = false

machine_type = "n1-standard-8"

labels = {

"domino/build-node" = "true"

"dominodatalab.com/build-node" = "true"

"dominodatalab.com/node-pool" = "default"

}

disk_size_gb = 400

local_ssd_count = 1

}

management {

auto_repair = true

auto_upgrade = true

}

timeouts {

delete = "20m"

}

}Optional GPU pool

resource "google_container_node_pool" "gpu" {

provider = google-beta

name = "gpu"

location = $YOUR_CLUSTER_ZONE_OR_REGION

cluster = $YOUR_CLUSTER_NAME

initial_node_count = 0

autoscaling {

max_node_count = 5

min_node_count = 0

}

node_config {

preemptible = false

machine_type = "n1-standard-8"

guest_accelerator {

type = "nvidia-tesla-p100"

count = 1

}

labels = {

"dominodatalab.com/node-pool" = "default-gpu"

}

disk_size_gb = 400

local_ssd_count = 1

workload_metadata_config {

node_metadata = "GKE_METADATA_SERVER"

}

}

management {

auto_repair = true

auto_upgrade = true

}

timeouts {

delete = "20m"

}

}Network policy enforcement

Domino relies on Kubernetes network policies to manage secure communication between pods in the cluster. By default, the network plugin in GKE will not enforce these policies. To run Domino securely on GKE, you must enable enforcement of network policies.

See the GKE documentation for instructions about how to enable network policy enforcement for your cluster.

Dynamic block storage

The Domino installer will automatically create a storage class like the following example for use provisioning GCE persistent disks as execution volumes. No manual setup is necessary for this storage class.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: dominodisk

parameters:

replication-type: none

type: pd-standard

provisioner: kubernetes.io/gce-pd

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumerShared storage

A Cloud File store instance must be provisioned with at least 10T of capacity and it must be configured to allow access from the cluster. You must provide the IP address and mount path of this instance to the Domino installer, and it will create an NFS storage class like the following.

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

labels:

app.kubernetes.io/instance: nfs-client-provisioner

app.kubernetes.io/managed-by: Tiller

app.kubernetes.io/name: nfs-client-provisioner

helm.sh/chart: nfs-client-provisioner-1.2.6-0.1.4

name: domino-shared

parameters:

archiveOnDelete: "false"

provisioner: cluster.local/nfs-client-provisioner

reclaimPolicy: Delete

volumeBindingMode: ImmediateDocker registry storage

You need one Cloud Storage Bucket accessible from your cluster to be used to store the internal Domino Docker Registry.

Domain

You must configure Domino to serve from a specific FQDN. To serve Domino securely over HTTPS, you need an SSL certificate that covers the chosen name. Record the FQDN for use when you install Domino.

|

Important

|

A Domino install can’t be hosted on a subdomain of another Domino install.

For example, if you have Domino deployed at data-science.example.com, you can’t deploy another instance of Domino at acme.data-science.example.com.

|

After Domino is deployed into your cluster, you must set up DNS for this name to point to an HTTPS Cloud Load Balancer that has an SSL certificate for the chosen name, and forwards traffic to port 80 on your platform nodes.

-

Deploy workloads across multiple Kubernetes clusters with Domino’s Nexus Hybrid Architecture.

-

Find out more about Domino’s on-premises deployment service architecture.

-

Learn about how Domino uses Keycloak to manage user accounts.