You must create hardware tiers specifying the CPU, Memory, and GPU for the pods hosting Domino executions.

Users select a hardware tier when launching an execution, which determines the available resources and the node type for the execution.

-

Go to Manage Resources > Hardware Tiers.

-

Click New.

-

Set the following fields to configure the resource requests and limits for execution pods.

- Name

-

The name of the hardware tier that shows to users.

- Cores Requested

-

The number of requested CPUs.

- Memory Requested (GiB)

-

The amount of requested memory.

- Memory Limit (GiB)

-

The maximum amount of memory. Domino recommends that this is the same as the request.

If Allow executions memory limit to exceed request is not selected, Domino will automatically apply a memory limit equal to the request.

WarningUse the option to allow the memory limit to exceed the request with caution since it can make executions more likely to be evicted under memory pressure. See the Kubernetes documentation to learn how memory requests and limits influence Kubernetes eviction decisions.

- Cores Limit

-

The maximum number of CPUs. Domino recommends that this is the same as the request.

If Allow executions to exceed request when unused CPU is available is not selected, Domino will automatically apply a CPU limit equal to the request.

- Number of GPUs

-

The number of requested GPUs. The request values, CPU Cores, Memory, and GPUs, are thresholds used to determine whether a node has the capacity to host an execution pod. These requested resources are effectively reserved for the pod. The limit values control the amount of resources a pod can use above and beyond the amount requested. If there’s additional headroom on the node, the pod can use resources up to this limit.

However, if resources are in contention, and a pod is using resources beyond those it requested and thereby causing excess demand on a node, the offending pod might be evicted from the node by Kubernetes, and the associated Domino Run is terminated. For this reason, Domino recommends that you set the requests and limits to the same values.

Ensure that the CPU, memory, and GPU requests in your hardware tier do not exceed the available resources in the target node pool, considering overhead. Otherwise, an execution using such a hardware tier will never start. If you need more resources than are available on existing nodes, you might have to add a new node pool with different specifications. This might mean adding individual nodes to a static cluster or configuring new auto-scaling components that provision new nodes with the required specifications and labels.

- Cents Per Minute Per Run

-

Enter how much it will cost (in cents) per minute to run each machine in the hardware tier. You might notice that when users select a hardware tier, they see the cost in dollars. The cost is converted from cents to dollars (rounded to the fourth floating digit).

- Node Pool

-

Specify the underlying machine type on which a Domino execution will run.

Nodes that have the same value for the

dominodatalab.com/node-poolKubernetes node label form a Node Pool. Executions with a matching value in the Node Pool field will then run on these nodes. Configure spot instances has information on creating node pools with spot instances.As an example, in the previous screenshot, the

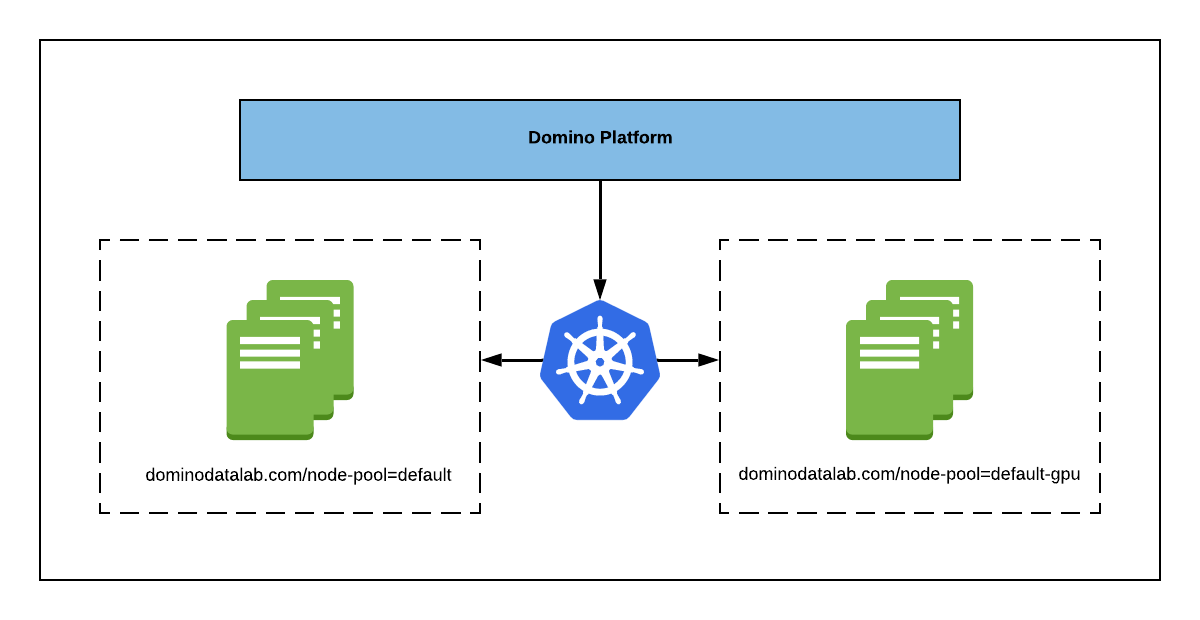

large-k8shardware tier is configured to use thedefaultnode pool.The following diagram shows a cluster configured with two node pools for Domino, one named

defaultand one nameddefault-gpu. You can label node pools with the same schemedominodatalab.com/node-pool=<node-pool-name>to make additional node pools available to Domino. The arrows in the diagram represent Domino requesting that a node with a given label be assigned to an execution. Kubernetes will then assign the execution to a node in the specified pool that has sufficient resources.

By default, Domino creates a node pool with the label

dominodatalab.com/node-pool=defaultand all compute nodes Domino creates in cloud environments are assumed to be in this pool. In cloud environments with automatic node scaling, you configure scaling components like AWS Auto Scaling Groups or Azure Scale Sets with these labels to create elastic node pools.

-

Click Insert.

|

Note

| See Advanced hardware tier settings |

You can define specific hardware tiers for machine learning models deployed as Domino endpoints. This allows you to cater your hardware tiers to the unique demands of machine learning model deployment. Domino endpoint tiers and regular hardware tiers cannot be interchanged, meaning Domino endpoints can only use Domino endpoint tiers, and Domino endpoint tiers cannot be used outside of Domino endpoints.

To learn more, see Scale models with Domino endpoint hardware tiers.